Cursor's Bugbot evolution showcases how AI-driven code review agents are overcoming early limitations to achieve measurable impact on software quality.

As AI-assisted development accelerates coding velocity, the bottleneck has shifted downstream to code review. Cursor's journey with Bugbot illustrates how specialized AI agents are evolving from novelty to necessity in maintaining quality at scale. Unlike broad-coverage coding assistants, Bugbot focuses exclusively on identifying logic bugs, performance issues, and security vulnerabilities before they reach production - a deliberate specialization that required solving unique technical challenges.

Early iterations faced fundamental limitations. "When we first tried to build a code review agent, the models weren't capable enough for the reviews to be helpful," explains the Cursor team. Initial versions relied on qualitative assessments where engineers manually evaluated false positive rates. The breakthrough came through parallel processing: running eight randomized passes of the same diff followed by majority voting to filter spurious findings. This approach leveraged model variability while mitigating individual hallucinations.

The transition from prototype to production demanded infrastructure most teams overlook. Cursor rebuilt their Git integration in Rust for performance, implemented proxy-based infrastructure to navigate GitHub's rate limits, and developed Bugbot Rules - a domain-specific language allowing teams to encode project-specific invariants like unsafe migrations or internal API misuse. These foundations enabled scaling but revealed a critical gap: without quantitative metrics, quality improvements plateaued.

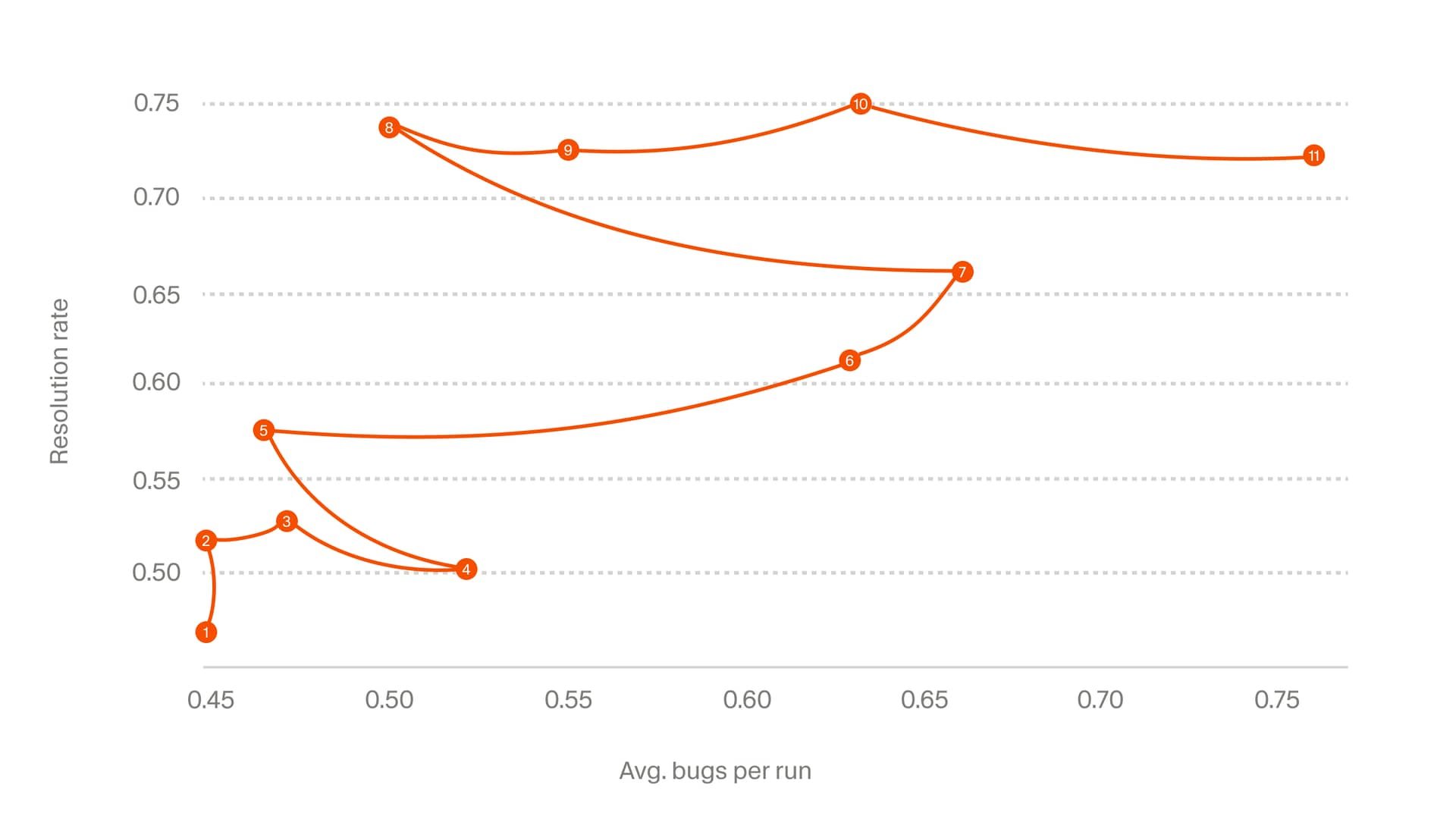

Cursor's solution was the Resolution Rate metric - an AI-powered system that determines which flagged bugs were actually fixed before merging. "Resolution rate directly answers whether Bugbot is finding real issues that engineers fix," notes the team. This became the north star for optimization, enabling data-driven experimentation across 40 major iterations.

Quantitative measurement revealed surprising insights. Many intuitively promising changes actually regressed performance, validating earlier qualitative judgments. The most significant leap came from switching to an agentic architecture where the AI dynamically investigates suspicious patterns rather than following rigid passes. This required flipping the prompting strategy from restraint to aggression - encouraging the model to err toward flagging potential issues.

Agentic design unlocked deeper optimizations. By shifting context from static to dynamic loading, the system reduced overhead while allowing the model to request precisely what it needed during analysis. Tooling interfaces became leverage points where minor adjustments yielded disproportionate improvements in accuracy.

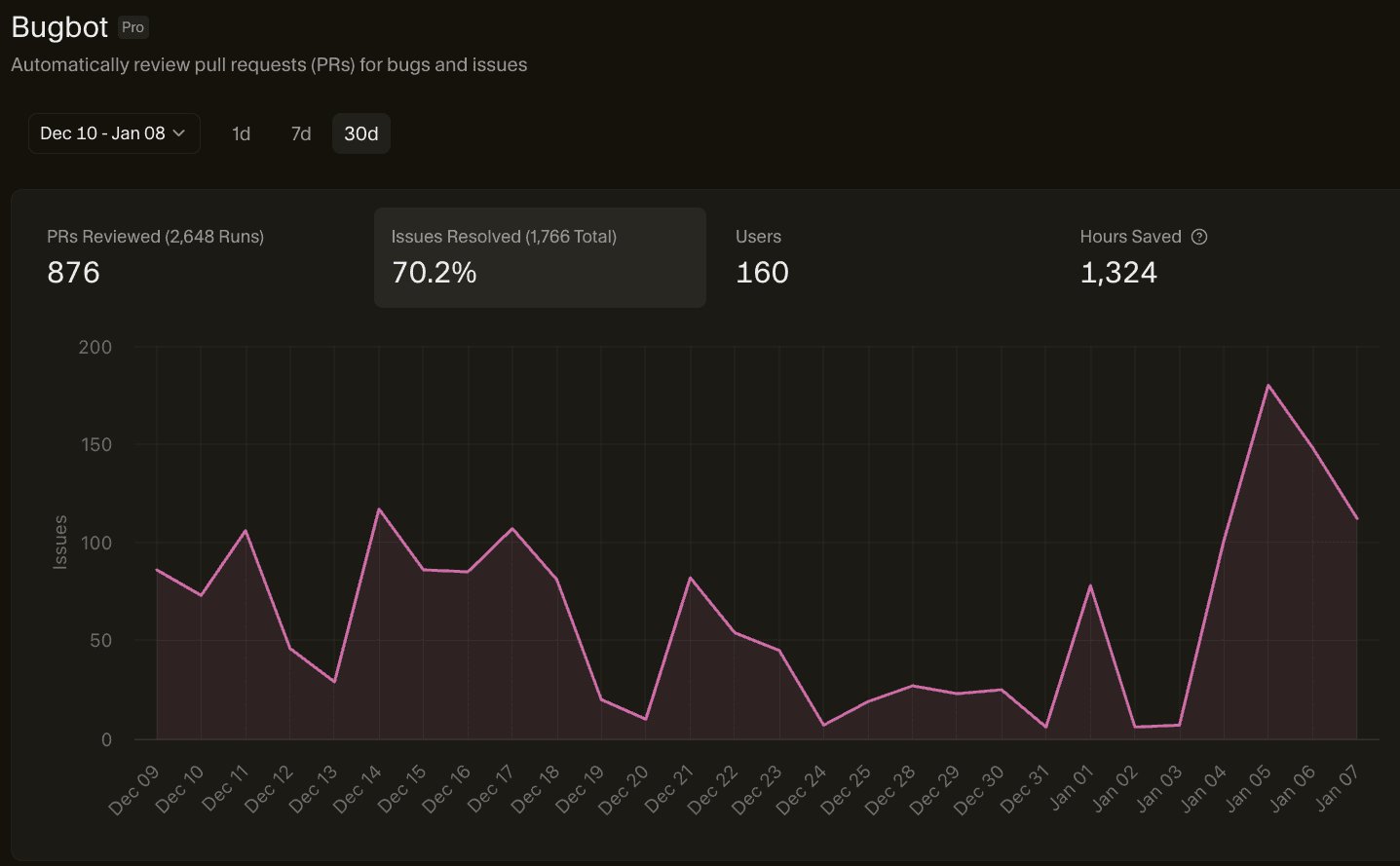

Today, Bugbot analyzes over two million PRs monthly for clients including Discord and Airtable, achieving a 70% resolution rate with 0.7 bugs identified per run - a 75% improvement since launch. Yet some engineers express skepticism about false positive rates and integration overhead. Cursor's data shows newer versions increased true positives without comparable growth in false flags, while their Rust-based Git layer minimizes performance impact.

Looking ahead, Bugbot Autofix enters beta with plans for self-verification through code execution and always-on codebase scanning. As one engineer commented: "The real test is whether it catches what humans miss without creating new bottlenecks." With documentation now publicly available, teams can evaluate whether Bugbot's specialized approach delivers on its promise of scaling quality assurance alongside AI-powered development.

Comments

Please log in or register to join the discussion