Security researchers have uncovered two high-severity vulnerabilities in the popular Chainlit AI framework that could allow attackers to steal sensitive data and perform server-side request forgery attacks, potentially leading to lateral movement within compromised organizations.

The Chainlit AI framework, a popular open-source tool for building conversational chatbots, has been found to contain two critical security flaws that could enable attackers to steal sensitive data and compromise entire systems. Discovered by security researchers at Zafran Security, these vulnerabilities—collectively dubbed ChainLeak—allow for arbitrary file reading and server-side request forgery (SSRF) attacks against AI applications built with the framework.

Chainlit has seen significant adoption in the AI development community, with over 7.3 million total downloads according to the Python Software Foundation. The framework's popularity makes these vulnerabilities particularly concerning, as they could affect a wide range of AI applications deployed in production environments.

The Vulnerabilities in Detail

The two vulnerabilities affect the framework's "/project/element" update flow and have been assigned the following CVE identifiers:

CVE-2026-22218 (CVSS 7.1): Arbitrary File Read This vulnerability stems from inadequate validation of user-controlled fields in the file update functionality. An authenticated attacker can exploit this flaw to read any file accessible to the service process, including sensitive configuration files, environment variables, and application source code.

The researchers demonstrated that an attacker could read the /proc/self/environ file to extract valuable information such as API keys, database credentials, and internal file paths. In setups using SQLAlchemy with an SQLite backend, the vulnerability could also be used to leak database files directly.

CVE-2026-22219 (CVSS 8.3): Server-Side Request Forgery When Chainlit is configured with the SQLAlchemy data layer backend, this SSRF vulnerability allows attackers to make arbitrary HTTP requests from the Chainlit server. This could be used to target internal network services or cloud metadata endpoints, potentially leading to privilege escalation and data exfiltration.

Attack Chain and Impact

According to Zafran researchers Gal Zaban and Ido Shani, these vulnerabilities can be combined in multiple ways to create a devastating attack chain:

"The two Chainlit vulnerabilities can be combined in multiple ways to leak sensitive data, escalate privileges, and move laterally within the system," the researchers explained. "Once an attacker gains arbitrary file read access on the server, the AI application's security quickly begins to collapse. What initially appears to be a contained flaw becomes direct access to the system's most sensitive secrets and internal state."

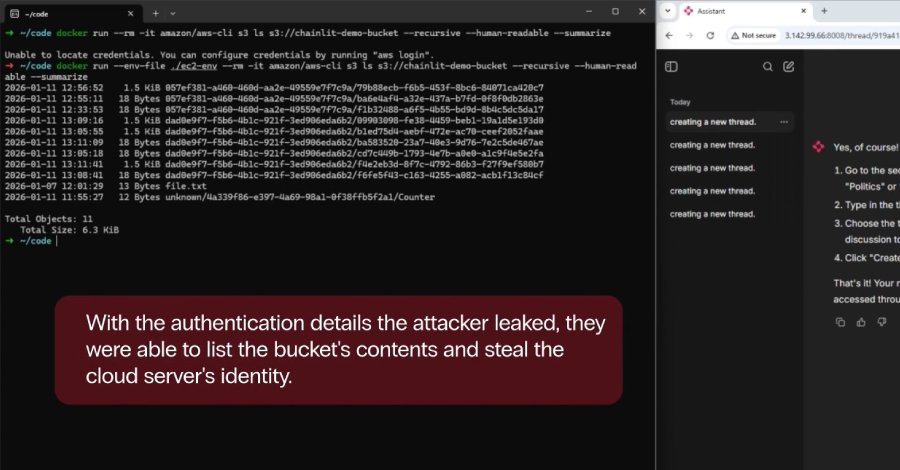

The attack scenario typically begins with an attacker gaining authenticated access to the Chainlit application. From there, they can:

- Use the file read vulnerability to extract environment variables containing cloud service credentials

- Leverage SSRF to query cloud metadata services (like AWS IMDS) for additional credentials

- Use stolen credentials to pivot to other systems within the network

- Exfiltrate sensitive data from the AI application's database or connected systems

Broader Context: MCP Server Vulnerabilities

The Chainlit disclosure coincides with another significant vulnerability in Microsoft's MarkItDown Model Context Protocol (MCP) server, discovered by BlueRock. Dubbed "MCP fURI," this flaw enables arbitrary URI resource calling, exposing organizations to similar SSRF, privilege escalation, and data leakage attacks.

BlueRock's analysis of over 7,000 MCP servers found that approximately 36.7% are likely exposed to similar SSRF vulnerabilities. The MarkItDown vulnerability specifically affects servers running on AWS EC2 instances using IMDSv1, allowing attackers to query instance metadata and obtain AWS credentials.

Mitigation and Remediation

Following responsible disclosure on November 23, 2025, Chainlit addressed both vulnerabilities in version 2.9.4, released on December 24, 2025. Organizations using Chainlit should immediately update to this patched version.

For the MarkItDown MCP server vulnerability, BlueRock recommends:

- Upgrade to IMDSv2: Use AWS Instance Metadata Service Version 2, which provides better protection against SSRF attacks

- Implement private IP blocking: Restrict access to metadata services from untrusted sources

- Create allowlists: Prevent data exfiltration by restricting outbound connections

- Network segmentation: Isolate AI application servers from sensitive internal networks

The AI Security Challenge

These vulnerabilities highlight a growing concern in the AI security landscape. As organizations rapidly adopt AI frameworks and third-party components, traditional software vulnerabilities are being embedded directly into AI infrastructure.

"These frameworks introduce new and often poorly understood attack surfaces, where well-known vulnerability classes can directly compromise AI-powered systems," Zafran noted. The rapid adoption of AI technologies has led to many organizations deploying these frameworks without fully understanding their security implications.

Practical Recommendations for Organizations

- Inventory and Update: Immediately identify all Chainlit deployments and update to version 2.9.4 or later

- Audit MCP Servers: Review any Model Context Protocol servers in use and assess their exposure to SSRF vulnerabilities

- Implement Defense in Depth: Apply network segmentation, restrict egress traffic, and monitor for suspicious file access patterns

- Review Cloud Configurations: Ensure cloud metadata services are properly secured (IMDSv2 for AWS)

- Monitor for Exploitation: Implement logging and alerting for unusual file access or outbound connection patterns

The ChainLeak vulnerabilities serve as a reminder that AI frameworks are not immune to traditional security flaws. As AI adoption continues to accelerate, organizations must apply the same rigorous security practices to AI infrastructure as they do to other critical systems.

For more information on these vulnerabilities and mitigation strategies, organizations should consult the Zafran Security advisory and the Chainlit GitHub repository for official updates and patch information.

Comments

Please log in or register to join the discussion