Researchers unveil DAAAM, a novel spatio-temporal memory framework that overcomes critical limitations in real-time 4D scene analysis. By combining optimization techniques with hierarchical scene graphs, the system achieves unprecedented accuracy in object grounding and semantic understanding while maintaining real-time performance.

Current computer vision systems for robotics and augmented reality face a fundamental dilemma: the more detailed their semantic understanding of environments becomes, the harder it is to maintain real-time performance. This tradeoff has constrained applications ranging from warehouse robotics to AR navigation, where both rich environmental context and instantaneous processing are essential.

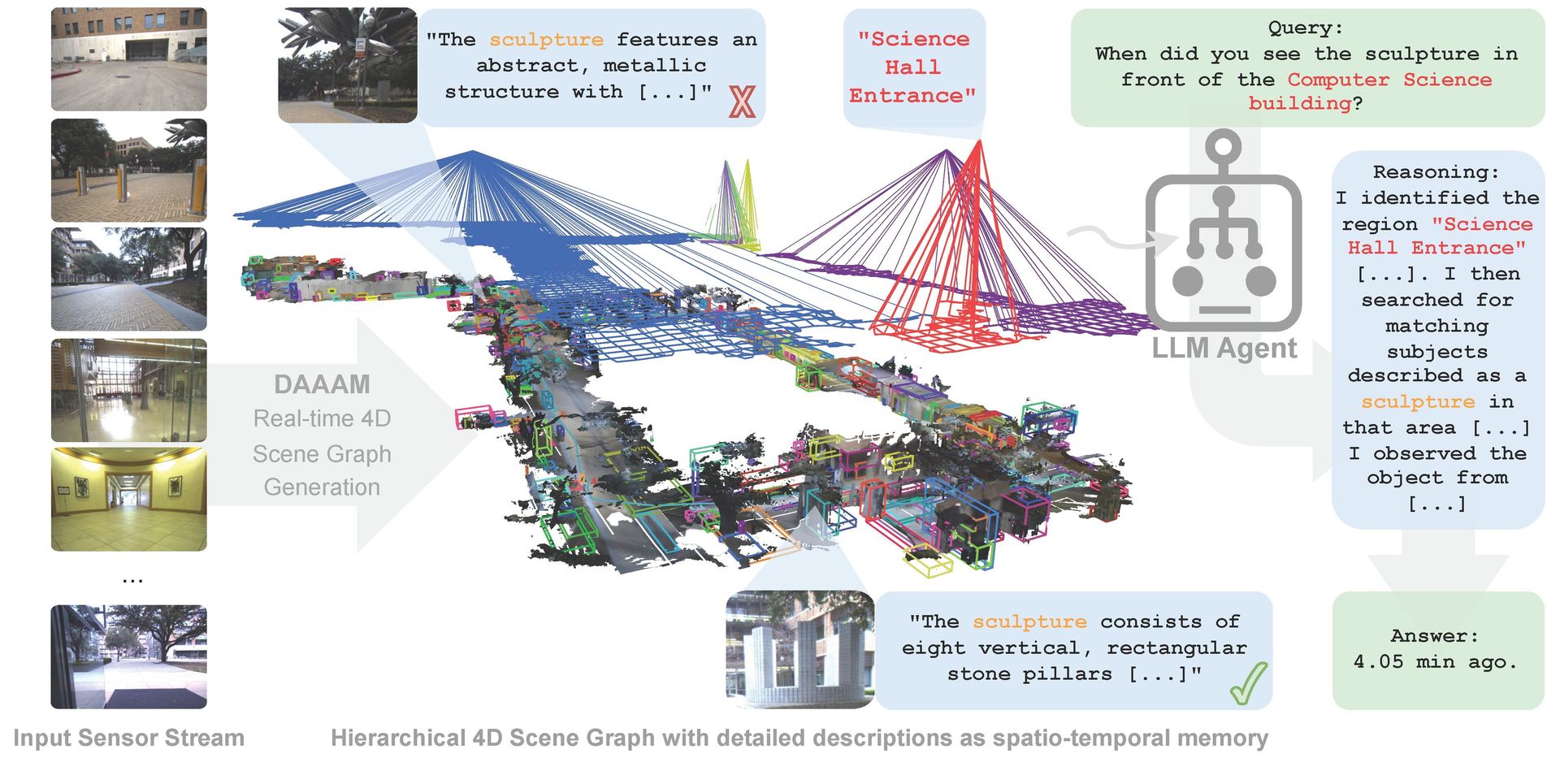

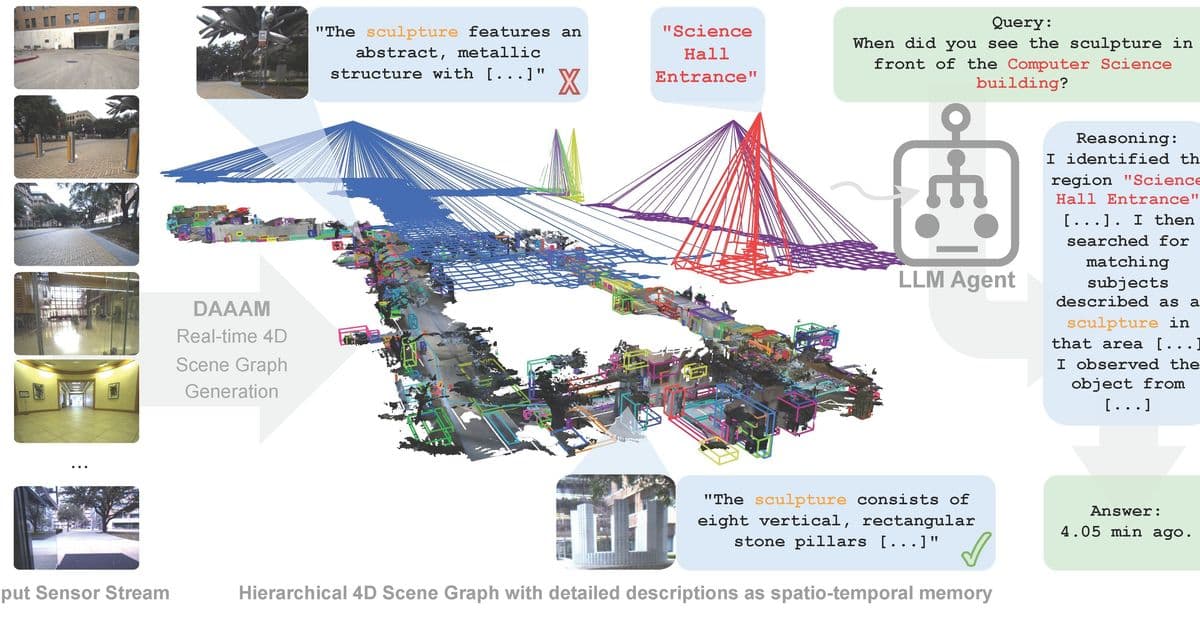

Enter Describe Anything, Anywhere, at Any Moment (DAAAM), a novel framework developed to resolve this tension. DAAAM introduces an optimization-based frontend that processes visual data through localized captioning models like the Describe Anything Model (DAM) but accelerates inference dramatically via batch processing. This enables 10x faster semantic analysis compared to conventional approaches while maintaining rich open-vocabulary descriptions.

At DAAAM's core lies a hierarchical 4D scene graph—a spatio-temporal memory structure that maintains geometric and semantic consistency across time and space. As explained in the research paper, the system processes RGB-D video streams by:

- Segmenting scenes into tracked fragments

- Building metric-semantic maps

- Selecting optimal frames via optimization algorithms

- Batch-processing segments through DAM for description generation

- Constructing clustered scene graphs with semantic region grouping

"This architecture allows robots to build persistent environmental understanding that evolves over time," the researchers note. The 4D scene graph serves as a queryable memory backbone, enabling complex spatio-temporal reasoning previously impossible at real-time speeds.

Validation across three challenging benchmarks demonstrates DAAAM's capabilities:

- 53.6% improvement in question accuracy on OC-NaVQA

- 21.9% reduction in positional errors

- 27.8% boost in sequential task grounding accuracy on SG3D

These gains stem from DAAAM's ability to ground language descriptions in precise geometric contexts while maintaining temporal coherence across long sequences. The framework's open-source release promises immediate applications in autonomous navigation systems, where understanding "the chair that was moved 5 minutes ago" requires both historical context and spatial precision.

As robotics and AR systems operate in increasingly complex environments, DAAAM represents a paradigm shift—transforming episodic perception into continuous environmental understanding. Its scene graph architecture not only answers complex queries today but provides the foundation for AI agents that build persistent world models through lived experience.

Comments

Please log in or register to join the discussion