Google's Gemini now offers Personal Intelligence – an opt-in beta feature that analyzes user data from Gmail, Photos, Drive and other Google services to deliver hyper-personalized responses.

Google has begun rolling out its "Personal Intelligence" beta for Gemini subscribers, marking a significant evolution in how AI assistants interact with user data. This experimental feature enables Gemini to analyze content across your Google ecosystem—including Gmail conversations, Calendar events, Drive documents, Photos albums, YouTube history, and Maps activity—to generate responses tailored to your personal context.

How Personal Intelligence Works

The system goes beyond simple chat memory by actively cross-referencing multiple data sources. For example, when asking about tire specifications for a minivan, Gemini might:

- Pull vehicle details from past Gmail receipts

- Reference road trip photos in Google Photos

- Suggest tire types based on weather conditions from Maps data

- Provide pricing comparisons using Shopping history

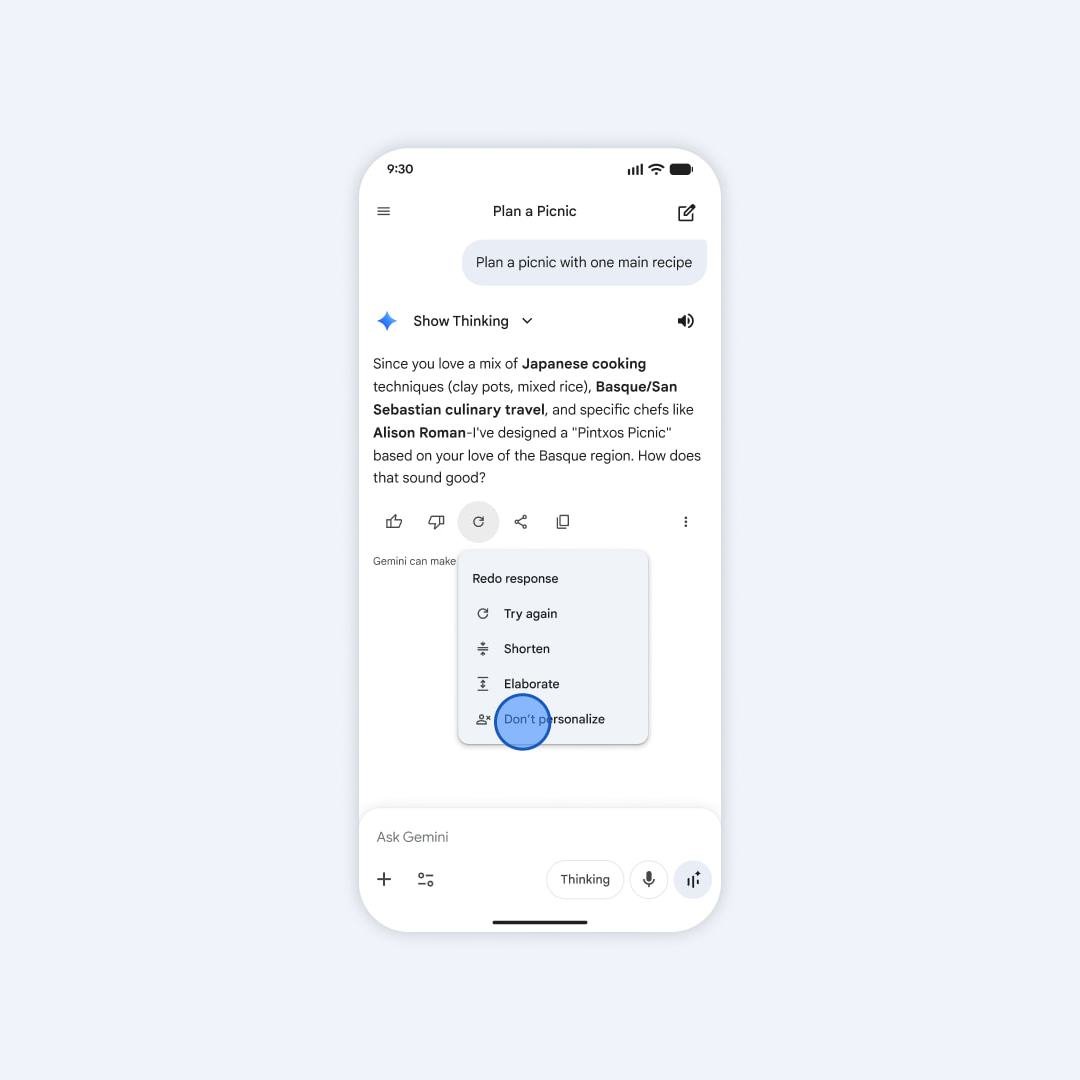

Unlike standard chatbots that retrieve generic information, Personal Intelligence constructs responses using your specific digital footprint. When generating answers, Gemini displays an "Answer now" button instead of "Skip," showing its reasoning process and citing sources like "From your June 2025 email receipt" or "Based on photos from your Utah trip."

Privacy Architecture

Google emphasizes privacy safeguards:

- Opt-in requirement: Disabled by default in Gemini settings

- Granular controls: Users select specific apps to connect (Gmail, Photos, etc.)

- Training isolation: Model trains only on prompts/responses, not source data

- Data compartmentalization: Your license plate photos or email contents aren't used for model training

As Google explains: "We don't train our systems to learn your license plate number; we train them to understand that when you ask for one, we can locate it." Sensitive data remains within Google's existing security infrastructure without external transfers.

Practical Applications

The feature shines in contextual scenarios:

- Planning trips using past travel patterns from Photos/Gmail

- Discovering niche restaurants matching your culinary history

- Getting product recommendations based on shopping receipts

- Career suggestions analyzing professional correspondence

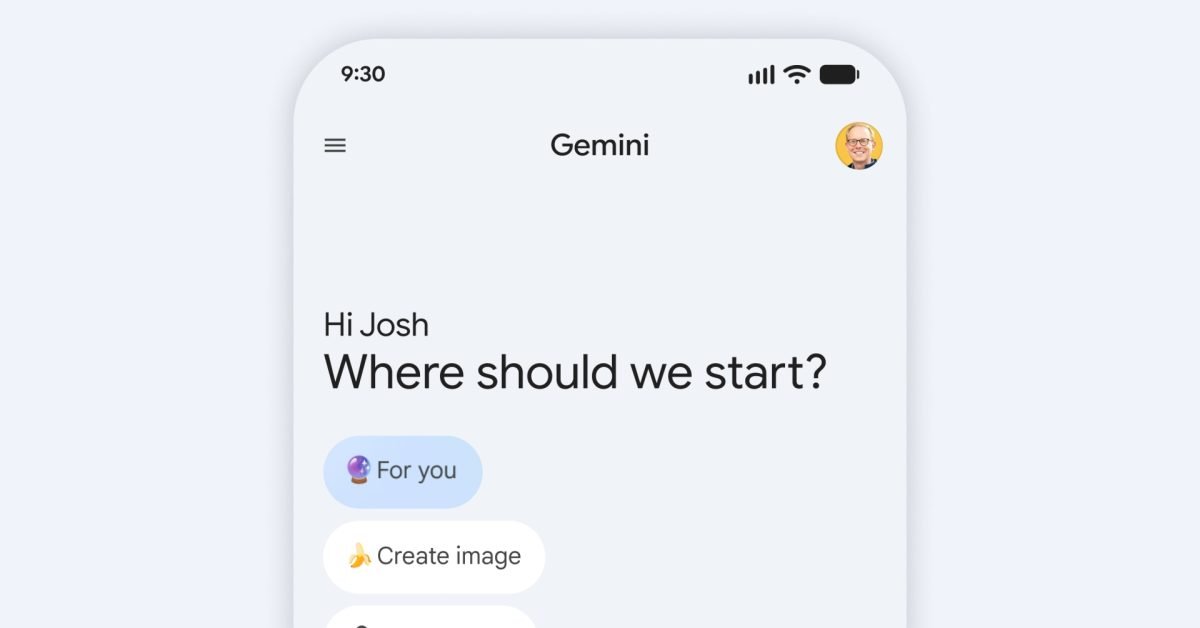

New "For you" prompt chips suggest personalized queries like:

"Recommend hiking boots I might like based on my REI purchases" "Suggest board games for our family using last vacation's photos"

Developer Implications

This advancement signals important shifts for developers:

- Data permission expectations: Users may anticipate similar personalization in third-party apps

- Contextual API design: Google's approach demonstrates multi-source data synthesis techniques

- Privacy patterns: The opt-in+compartmentalization model sets precedent for responsible AI implementation

- Integration opportunities: Developers could leverage Gemini APIs for personalized features

Beta Limitations

Google acknowledges current challenges:

- Over-personalization: May connect unrelated data (e.g., assuming golf enthusiasm from event photos)

- Temporal blind spots: Struggles with recent life changes like job transitions

- Sensitivity filters: Avoids health/financial assumptions unless explicitly asked

Users can correct inaccuracies with direct feedback ("I don't like golf") or regenerate responses without personalization.

Availability

The beta launches today for Gemini Pro/Ultra subscribers with personal accounts in the US. Enable via:

- Gemini Settings > Personal Intelligence

- Select Connected Apps

- Activate desired services

It works across Android, iOS, and web using Gemini 3 models. Google plans expansion to free tiers, more countries, and Search AI Mode soon.

This represents Google's most ambitious step toward its vision of a "proactive assistant," transforming passive data into active intelligence—while navigating the tightrope between utility and privacy with notable transparency.

Comments

Please log in or register to join the discussion