GitHub Security Lab has developed an AI-powered system using their Taskflow Agent framework to automate vulnerability triage, reducing false positives in security alerts by leveraging LLMs for pattern recognition tasks that traditionally required human intervention.

GitHub Security Lab has unveiled a new approach to vulnerability management that combines traditional code analysis with AI-powered automation. Their recently open-sourced Taskflow Agent framework now enables automated triage of security alerts by breaking down complex analysis into discrete tasks managed by large language models.

Why This Matters to Developers

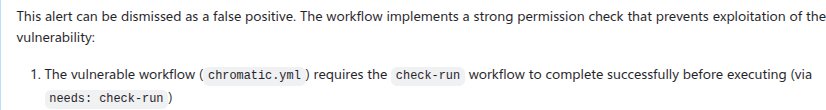

Security alert fatigue is a real problem. Traditional static analyzers like CodeQL generate valuable findings but often flood teams with false positives that require manual review. GitHub's approach targets precisely these repetitive triage tasks where human intuition excels but conventional tools struggle - like recognizing contextual security controls in GitHub Actions workflows or identifying custom sanitization patterns in JavaScript code.

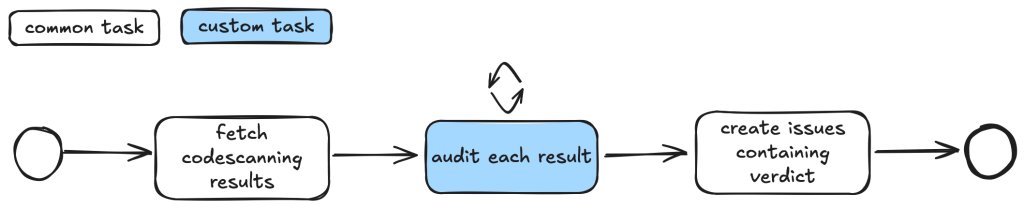

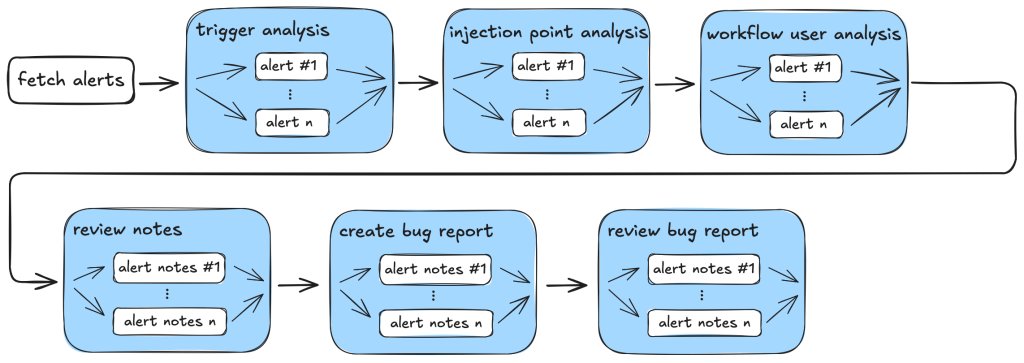

The system works through specialized "taskflows" - YAML-defined sequences where LLMs (primarily Claude Sonnet 3.5) perform specific audit steps with constrained toolsets. Each task focuses on a discrete element like checking workflow triggers, permission contexts, or input validation patterns. Results from each step are stored in a database, allowing subsequent tasks to build on previous findings without recomputation.

Proven Results

Since August, this system has:

- Triaged thousands of CodeQL alerts

- Identified ~30 real vulnerabilities in open-source projects

- Reduced false positives through pattern recognition impossible to encode in traditional SAST rules

- Generated actionable bug reports with code references

Notably, the system doesn't perform dynamic validation or exploit creation - it focuses on providing auditors with enriched context for faster decision-making.

Taskflow Architecture

Key architectural insights:

- Information Gathering: LLMs collect contextual data (permissions, triggers, call chains) with strict instructions to cite code locations

- Audit Stage: Dedicated checks for common false positive patterns using both LLMs and traditional tools

- Report Generation: Structured bug reports become GitHub issues for human review

Community Impact

Both the Taskflow Agent framework and example taskflows are open source. The team actively encourages contributions and provides development guidelines based on their experience:

- Database State Management: Persisting results between tasks enables efficient reruns

- Task Decomposition: Smaller, focused tasks reduce LLM errors

- Hybrid Approach: Offload deterministic checks to traditional tools via MCP servers

- Reusable Components: Shared prompts and tasks maintain consistency across workflows

Real-World Applications

The post details two practical implementations:

- GitHub Actions Alerts: Triaging code injection and untrusted checkout vulnerabilities by analyzing workflow triggers, permissions, and caller contexts

- JavaScript/TypeScript XSS: Identifying false positives from custom sanitizers or unreachable execution paths

Developers can extend this approach to any repetitive security analysis task matching these criteria:

- Involves recognizable patterns that resist formal encoding

- Requires semantic understanding of code context

- Generates many similar review tasks

As Man Yue Mo and Peter Stöckli note: "The taskflows may create GitHub Issues. Please be considerate and seek the repo owner’s consent before running them on somebody else’s repo."

Future Directions

The Security Lab hints at ongoing work in AI-assisted code auditing and vulnerability hunting. For teams drowning in security alerts, this represents a practical path toward more intelligent tooling that augments rather than replaces human expertise.

Comments

Please log in or register to join the discussion