OpenAI's GPT-5.2-Codex is now generally available through Azure OpenAI in Microsoft Foundry Models, offering enterprise developers a reasoning engine for complex codebases with built-in security awareness and multimodal capabilities.

Enterprise development teams face a constant tension between velocity and security. Legacy systems accumulate technical debt, dependency chains grow increasingly complex, and security reviews often become bottlenecks that delay releases. The challenge isn't just writing code—it's maintaining quality and safety while moving fast enough to meet business demands.

OpenAI's GPT-5.2-Codex, now generally available through Azure OpenAI in Microsoft Foundry Models, attempts to resolve this tension. Rather than functioning as simple autocomplete, it operates as a reasoning engine designed specifically for enterprise software engineering realities: large repositories, evolving requirements, and security constraints that cannot be compromised.

What Makes GPT-5.2-Codex Different

The model represents OpenAI's most advanced agentic coding system, bringing sustained reasoning and security-aware assistance directly into development workflows. What sets it apart is its ability to handle imperfect, incomplete inputs—legacy code with missing documentation, screenshots of UI designs, architecture diagrams—and work through multi-step changes while preserving context and intent.

Core Capabilities

Multimodal Reasoning Over Code and Artifacts GPT-5.2-Codex doesn't just parse text. It can reason across source code, visual diagrams, UI mocks, and natural language requirements simultaneously. This means when a developer provides an architecture diagram alongside legacy code, the model understands the relationship between visual design and implementation, ensuring alignment between design intent and actual code structure.

Extended Context Management With a 128K token context window—approximately equivalent to 100,000 lines of code—the model maintains continuity across substantial refactoring efforts. This is critical for enterprise scenarios where changes span multiple modules and developers need to preserve understanding of requirements that evolved weeks into a project.

Language and Platform Coverage The model supports over 50 programming languages including Python, JavaScript/TypeScript, C#, Java, Go, and Rust. It functions as a drop-in replacement for existing Codex API calls, reducing adoption friction for teams already using AI-assisted development tools.

Enterprise Use Cases Where It Excels

Legacy Modernization with Guardrails

Migrating "untouchable" legacy systems requires preserving exact behavior while improving structure. GPT-5.2-Codex helps teams refactor critical systems safely by analyzing existing behavior, suggesting modern patterns, and minimizing regression risk. Instead of wholesale rewrites, it enables incremental improvements that maintain business continuity.

Large-Scale Refactors Without Intent Loss

Cross-module updates often introduce inconsistencies when developers lose track of original intent. The model helps execute systematic refactors—like updating authentication patterns across an entire microservices architecture—while maintaining consistency and avoiding the typical "one step forward, two steps back" churn.

AI-Assisted Code Review That Raises Standards

Beyond catching syntax errors, GPT-5.2-Codex identifies risky patterns, proposes safer alternatives, and improves consistency across large teams. For organizations with hundreds of developers working on long-lived codebases, this provides a first line of defense that catches issues before human reviewers spend time.

Defensive Security Workflows

Security teams face a constant stream of vulnerability reports, dependency issues, and path analysis requests. GPT-5.2-Codex accelerates triage by analyzing unfamiliar code paths, understanding dependency relationships, and suggesting remediation strategies. Speed matters in security response, but precision matters more—the model balances both.

Sustained Multi-Step Development Sessions

Complex builds require planning, implementation, validation, and iteration. Maintaining context across multi-hour development sessions is cognitively demanding. GPT-5.2-Codex acts as a persistent reasoning partner, keeping track of decisions, trade-offs, and next steps as requirements evolve.

Security-Aware by Design

AI adoption in enterprise environments faces one critical question: Can it be trusted in security-sensitive workflows? GPT-5.2-Codex advances the Codex lineage with meaningful improvements in this area.

General reasoning improvements in larger models naturally translate to better performance in specialized domains, including defensive cybersecurity. With GPT-5.2-Codex, this manifests as:

- Improved analysis of unfamiliar code paths and dependencies – The model can trace through complex dependency chains to identify potential security implications of changes.

- Stronger secure coding assistance – It recognizes insecure patterns and suggests remediation aligned with industry standards.

- More dependable code review support – During vulnerability investigations and incident response, it provides consistent analysis that helps security teams scale their efforts.

Microsoft deploys these capabilities with platform-level controls that balance access, safeguards, and enterprise governance requirements.

Why Microsoft Foundry?

Powerful models require equally powerful infrastructure. Microsoft Foundry provides the enterprise foundation that makes GPT-5.2-Codex adoptable at scale:

Integrated Security and Compliance Deploy within existing Azure security boundaries, identity systems, and compliance frameworks. No need to reinvent controls or create separate governance models for AI workloads.

Enterprise-Ready Orchestration Build, evaluate, monitor, and scale AI-powered developer experiences using the same platform teams already rely on for production workloads. This includes observability, versioning, and rollback capabilities.

Unified Path from Experimentation to Production Foundry bridges the gap between proof-of-concept and real deployment. Teams can validate value with small experiments, then scale to production without changing platforms, vendors, or operating assumptions.

Platform-Level Trust For regulated or security-critical environments, Foundry and Azure provide assurances that extend beyond the model itself. This includes data residency controls, audit logging, and compliance certifications.

Pricing Model

Transparent pricing helps teams plan adoption and scale usage predictably:

| Model | Input Price/1M Tokens | Cached Input Price/1M Tokens | Output Price/1M Tokens |

|---|---|---|---|

| GPT-5.2-Codex | $1.75 | $0.175 | $14.00 |

Cached input pricing enables cost optimization for scenarios where the same context is reused across multiple queries, common in iterative development workflows.

Getting Started

Teams can adopt GPT-5.2-Codex incrementally based on their current workflows:

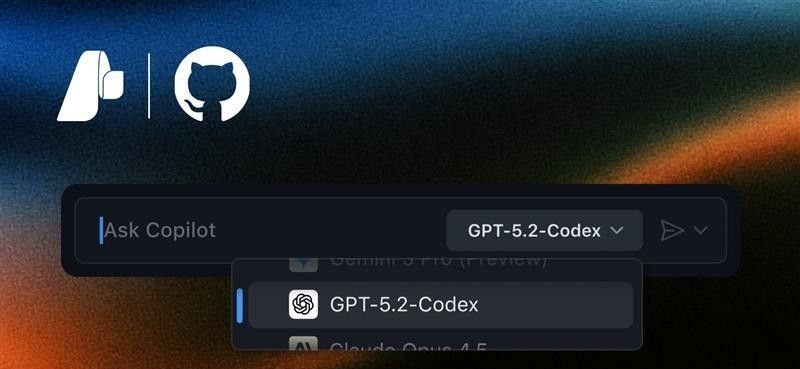

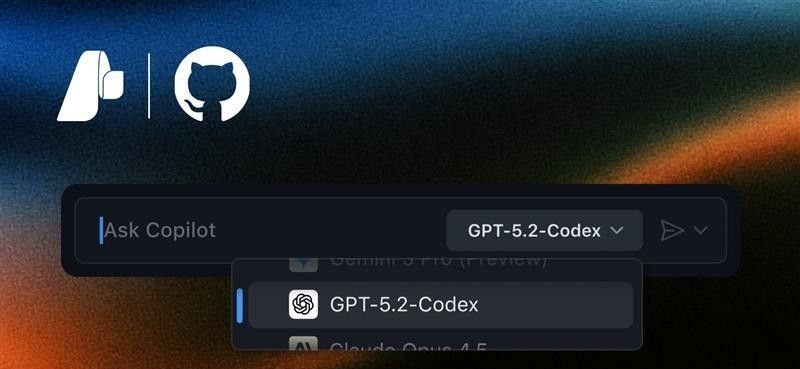

Start in GitHub Copilot Try GPT-5.2-Codex for everyday coding tasks directly in the IDE. This provides immediate value for individual developers while building familiarity with the model's capabilities.

Scale with Azure OpenAI in Microsoft Foundry Once value is proven, scale to larger workflows including automated code review pipelines, legacy migration tooling, and security analysis systems.

Integrate with Existing Tooling Because it uses standard APIs, GPT-5.2-Codex integrates with existing CI/CD pipelines, code review systems, and development workflows.

The Strategic Implication

GPT-5.2-Codex represents a shift from AI as code suggestion to AI as reasoning partner. For enterprise organizations, this means:

- Reduced cognitive load on senior developers who can delegate complex analysis while maintaining oversight

- Accelerated onboarding for new team members who get context-aware guidance rather than generic suggestions

- Improved security posture through consistent application of secure coding patterns across all contributions

- Faster time-to-market for features that require substantial refactoring or integration with legacy systems

The model becomes particularly valuable in organizations where:

- Codebases exceed hundreds of thousands of lines

- Multiple teams contribute to shared repositories

- Security and compliance requirements are stringent

- Technical debt requires systematic modernization

Availability

GPT-5.2-Codex is generally available starting today through Azure OpenAI in Microsoft Foundry Models. Teams can begin exploring capabilities immediately through GitHub Copilot integration and scale to production workflows using Microsoft's enterprise cloud infrastructure.

For organizations already invested in Azure ecosystems, this represents an opportunity to extend existing AI investments into the development lifecycle with minimal friction. For those evaluating cloud providers, it demonstrates how integrated AI capabilities can differentiate platform value beyond raw compute and storage.

The question for enterprise leaders is no longer whether AI will transform software development, but how to adopt it responsibly while maintaining the security, compliance, and quality standards their businesses require. GPT-5.2-Codex on Microsoft Foundry provides one answer to that question.

Updated January 14, 2026

Version 2.0

Categories: ARTIFICIAL INTELLIGENCE, AZURE OPENAI, MICROSOFT FOUNDRY

Posted by Naomi Moneypenny, Microsoft

Comments

Please log in or register to join the discussion