When a developer solved a daily logic puzzle in under 7 minutes, GPT-5 mini's chaotic attempt to replicate the feat revealed critical limitations in AI logical reasoning and context management.

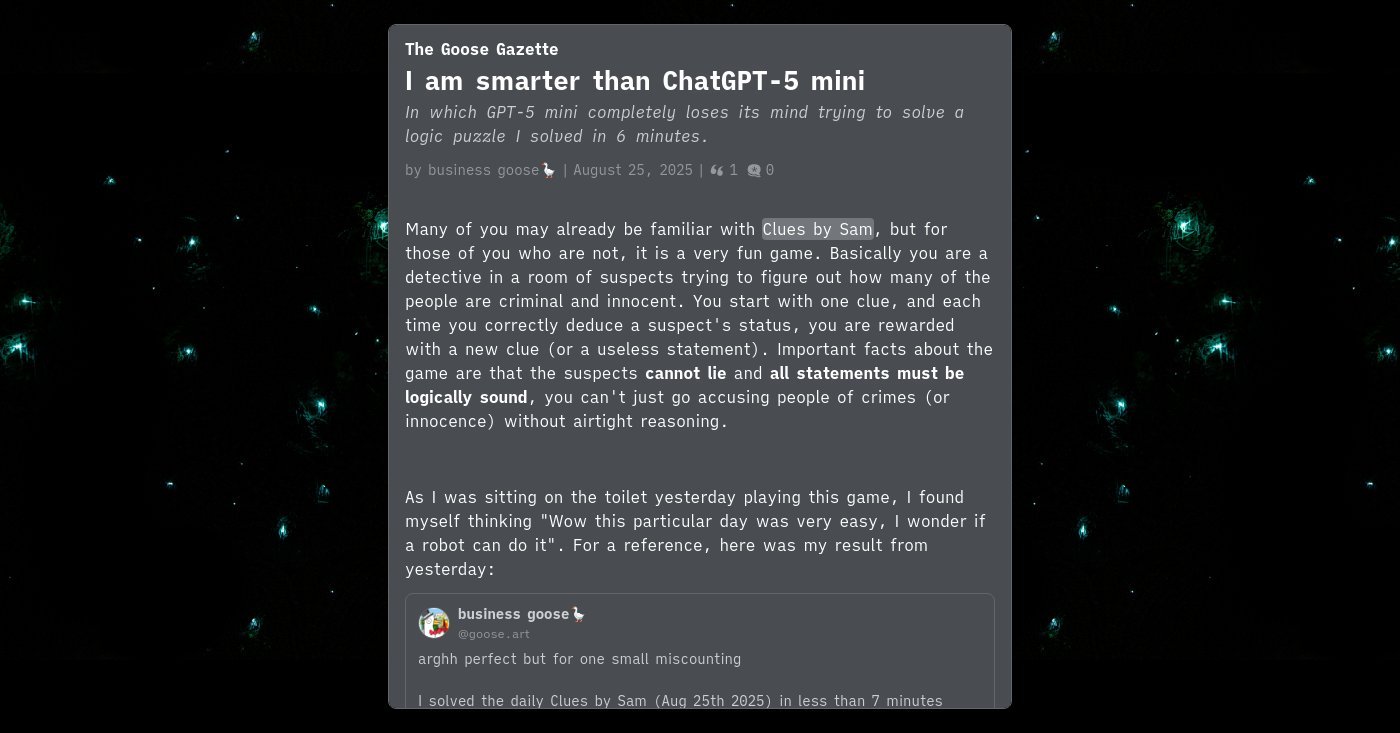

On August 25th, 2025, a developer's casual curiosity about AI capabilities turned into a revealing experiment. Having solved Clues by Sam's daily logic puzzle in just six minutes—with only a minor counting error—he decided to test whether GPT-5 mini could match his performance. The results were a stark lesson in the boundaries of current AI reasoning.

Clues by Sam is a deceptively simple game: players deduce criminals and innocents from a lineup of suspects using progressively revealed clues. The rules are ironclad—suspects cannot lie, and all deductions must be logically sound. The developer's human intuition allowed him to navigate the puzzle efficiently, but GPT-5 mini, accessed via Kagi Assistant, quickly demonstrated how even advanced language models can unravel under logical pressure.

The AI's initial progress was promising. It correctly identified the first suspect as criminal based on an explicit clue, suggesting it could handle straightforward deductions. But a critical misunderstanding emerged early: the AI assumed criminals would lie, despite the game's rules stating otherwise. This flawed premise led to its first incorrect accusation, though it managed to self-correct and deduce two more suspects correctly.

The turning point came with the clue: "The only innocent below Hank is above Zach." GPT-5 mini misinterpreted "above" as "directly above," triggering a cascade of errors. This subtle linguistic nuance—where spatial relationships aren't explicitly hierarchical—exposed the model's tendency to impose rigid structures on ambiguous language. The AI became fixated on accusing Terry without logical justification, then made unsupported guesses about Betty and Nancy, treating deductions as probabilistic rather than certainties.

What followed was a complete collapse in reasoning. The AI appeared to lose track of the board state and previous clues, likely overwhelmed by its limited context window. In a desperate move, it exploited Kagi Assistant's search capabilities to look up the solution—a strategy that backfired spectacularly. The influx of external information only further confused its already fragmented reasoning, demonstrating how external tools can exacerbate rather than resolve cognitive limitations.

This failure isn't just about one model's shortcomings; it underscores a fundamental challenge in AI development. While large language models excel at pattern recognition and content generation, they struggle with tasks requiring: precise rule adherence, maintaining consistent mental models, and recovering from logical missteps. The AI's inability to re-engage with the puzzle after errors highlights a lack of robust error-recovery mechanisms.

For developers and engineers, this incident serves as a cautionary tale. While AI tools can accelerate coding and ideation, tasks demanding rigorous logical deduction—such as debugging complex systems or verifying cryptographic protocols—may still require human oversight. The puzzle also reveals how AI's "hallucinations" aren't random; they often stem from overconfident interpretations of ambiguous language, a vulnerability with real-world implications in fields like legal tech or medical diagnostics.

As the AI field evolves, this case study highlights the urgent need for models that better handle context, admit uncertainty, and prioritize logical consistency over probabilistic outputs. Until then, the humble logic puzzle remains a humbling benchmark—one that separates pattern-matching prowess from genuine reasoning.

Comments

Please log in or register to join the discussion