As AI workloads explode in scale, traditional encryption fails to protect data during GPU computation, leaving models and inputs exposed to hypervisors, admins, and snooping attacks. GPU Trusted Execution Environments (TEEs) solve this by embedding hardware-enforced security directly into accelerators like NVIDIA's H100, enabling confidential AI with near-zero performance overhead. Phala Network's open-source stack is now making this accessible, allowing developers to deploy verifiable, tamper-p

In 2024, AI isn't just a feature—it's the engine powering Web3 agents, trading bots, and real-time analytics. But this explosion hinges on GPUs, where a critical vulnerability lurks: once data enters a GPU for computation, it's naked to attack. Traditional encryption safeguards data at rest or in transit but evaporates during processing, exposing model weights, user inputs, and outputs to threats like malicious hypervisors, rogue admins, or PCIe snooping. The stakes? Altered medical diagnoses, censored chatbot responses, or stolen proprietary models.

Why CPUs Can't Save AI

"Training a transformer model on CPUs would take weeks; GPUs accomplish the same in hours."

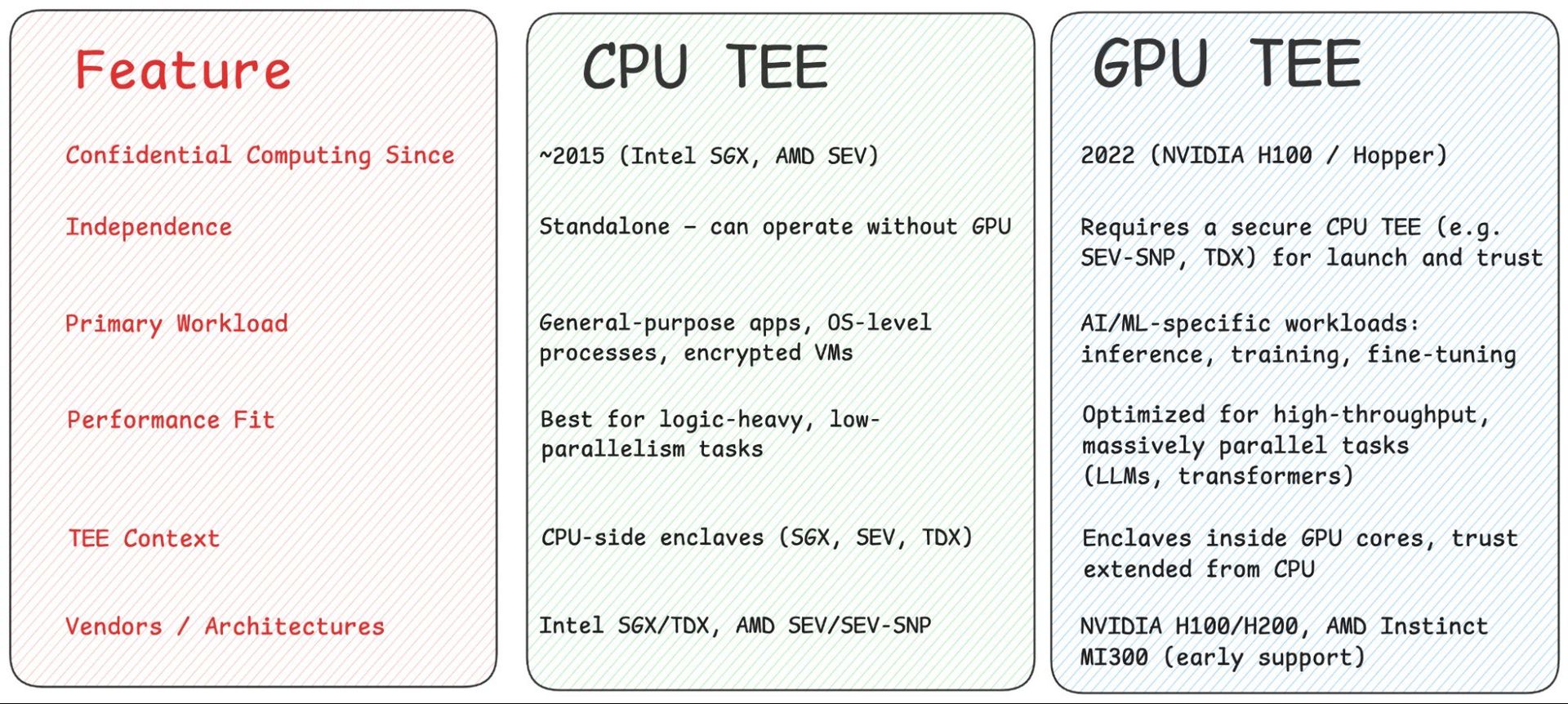

CPU-based TEEs (like Intel SGX) have long secured general-purpose workloads but buckle under AI's demands. Large models like Llama-3-70B require thousands of parallel threads and massive memory bandwidth—far beyond CPU capabilities. By 2030, over 70% of new compute capacity will be "GPU-class," dominated by accelerators like NVIDIA's H100. Yet, without hardware-level protection, these powerhouses become liability magnets.

Enter GPU TEEs: Confidential Computing at Accelerator Speed

A GPU TEE is a hardware-isolated enclave within the GPU itself, enforcing three pillars:

- Confidentiality: Data remains encrypted even during processing.

- Integrity: Code and outputs can't be tampered with.

- Attestation: Proof that the system is genuine and secure.

Modern implementations—NVIDIA's CC-On, AMD's Infinity Guard, and Intel's TDX Connect—activate these with minimal overhead. Recent benchmarks show sub-2% performance loss for Llama-class models, making secure AI practical.

How GPU TEEs Work: A Step-by-Step Breakdown

Using NVIDIA's H100 as a blueprint:

- Hardware Root of Trust: A cryptographic identity burned into the chip at manufacture verifies authenticity.

- Secure Boot: Firmware is signature-checked and locked against tampering.

- Confidential VM Launch: A CPU TEE (e.g., Intel TDX) isolates memory and sets up a secure base.

- GPU Enters CC Mode: Internal state is scrubbed, with firewalls blocking side-channel attacks.

- Secure Session Setup: Cryptographic keys are exchanged via SPDM, and an attestation report is generated.

- Attestation Verification: The report is validated locally or through NVIDIA's Remote Attestation Service (NRAS).

- Encrypted Data Transfer: AES-GCM encryption protects data shuttled via bounce buffers.

- Secure Execution: AI workloads run privately, with end-to-end protection.

Phala Network: Democratizing GPU TEEs for Developers

Phala bridges this hardware to Web3 with two open-source tools:

Private ML SDK: Package models into Docker images for deployment in GPU TEEs. It handles attestation, encrypted memory, and near-native performance—no infrastructure expertise needed.

"It turns an ordinary Docker workflow into a fully verifiable, privacy-preserving AI deployment."

Redpill: An API gateway for consuming TEE-hosted models (e.g., DeepSeek R1). Responses come signed with attestation proofs, verifiable onchain or via tools like Etherscan.

Benchmarks: Performance Meets Security

Phala's tests on H100/H200 GPUs reveal:

- <9% average overhead for models like Llama 70B and Phi-3 14B.

- Near-identical throughput/latency during inference.

- Startup delays (~25%) from attestation, but no code changes required.

The bottleneck? PCIe transfers—not GPU computation—proving GPU TEEs are ready for production.

Real-World Use Cases: From Healthcare to DeFi

- Healthcare: Run genomics models on shared GPU clusters while keeping patient data encrypted and HIPAA-compliant.

- Federated Learning: Train across siloed datasets (e.g., hospitals) without exposing raw data.

- Web3: Smart contracts validate attested AI outputs to prevent spoofing—critical for decentralized agents or ZK-acceleration.

Early adopters include a16z (private model hosting) and Flashbots X (secure DeFi transaction processing).

The Future: Onchain, Elastic, and Vendor-Agnostic

Phala's roadmap aims to turn GPU TEEs into a Web3 utility:

- Onchain Governance: Enclave-based AI apps upgraded via community votes, not vendors.

- Marketplace: Rent H100-class TEEs by the hour, with usage settled onchain.

- Hardware Expansion: Support for AMD's MI300 and Intel accelerators in 2025, enabling workload portability.

This evolution promises a world where confidential AI scales as seamlessly as blockchain services—secure by default, open by design.

Source: Phala Network GPU TEE Deep Dive

Comments

Please log in or register to join the discussion