SRE teams are adopting multi-agent AI systems with human oversight as research reveals autonomous agents cause confusion without orchestration. New architectures prioritize supervisor-led coordination to automate investigation steps while preserving human judgment.

A fundamental shift is transforming how organizations approach incident response in site reliability engineering. Rather than pursuing fully autonomous systems, leading teams are implementing supervisor-led multi-agent architectures that augment human engineers while preserving ultimate decision-making authority. This human-centered approach recognizes AI's strengths in narrowing search spaces and automating tedious investigation steps, while acknowledging the irreplaceable role of human judgment during critical incidents.

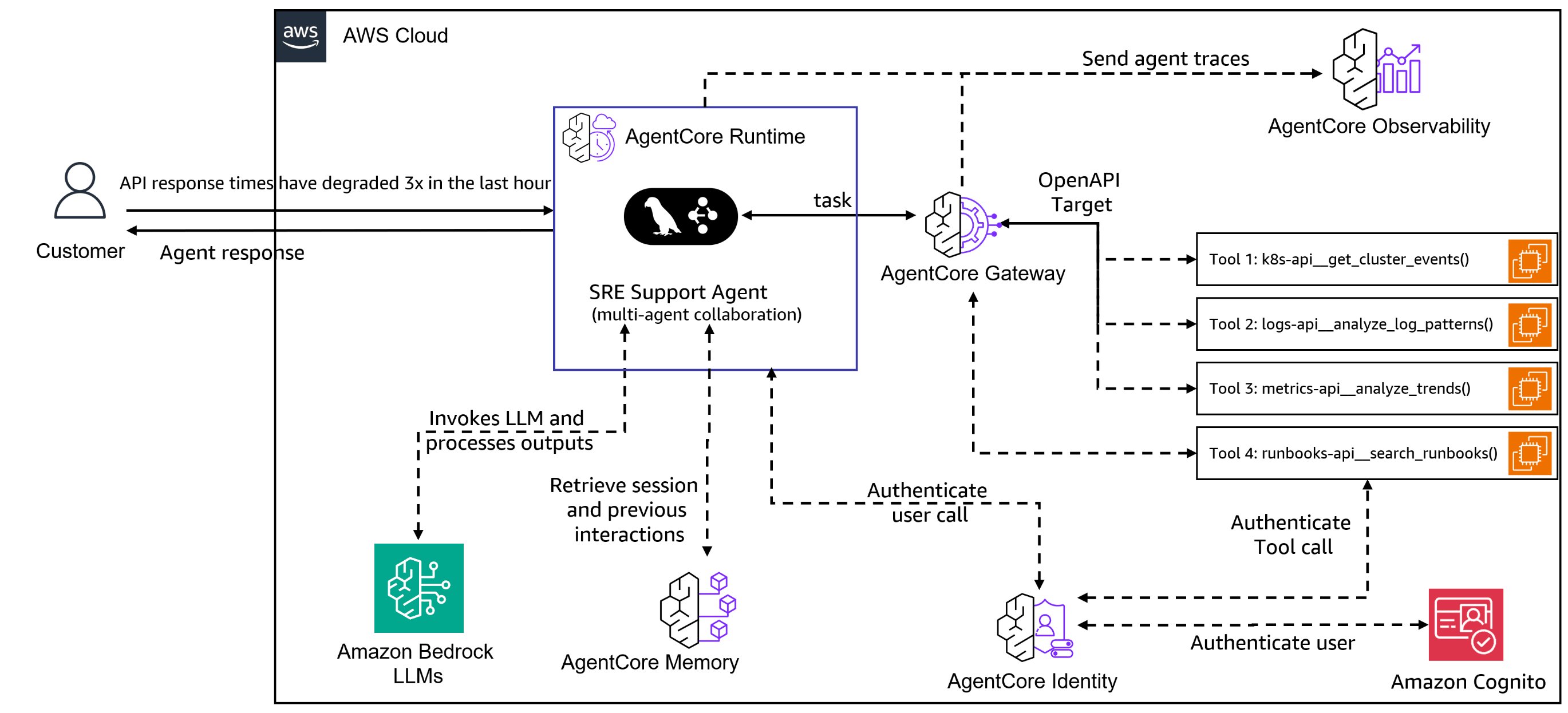

At the core of this evolution is the orchestration pattern detailed in OpsWorker's technical blog. Specialized agents—each dedicated to logs, metrics, runbooks, or other domains—are coordinated by a supervisor layer that determines task sequencing and workflow progression. As Ar Hakboian explains: "The supervisor doesn't replace human oversight; it amplifies it by filtering noise and automating procedural steps."

Recent research validates this architectural choice. A groundbreaking arXiv paper by Zefang Liu simulated cyber incidents using the Backdoors and Breaches framework, comparing organizational structures. Centralized and hybrid teams with clear leadership achieved 72% higher success rates than decentralized specialist groups, which struggled with consensus-building. Liu concluded: "Without explicit orchestration, specialized agents generate conflicting hypotheses that delay resolution."

This aligns with industry findings. EverOps' survey of SRE professionals found that 84% view AI as an augmentation tool rather than replacement, with practical applications focused on:

- Automated log ingestion and anomaly correlation

- Alert clustering and prioritization

- Retrieval-augmented knowledge access

- Initial hypothesis generation

The production readiness gap remains significant. Hakboian emphasizes that while agents excel at technical investigation, they lack operational maturity for unsupervised actions. His recommendations include:

- Starting with read-only agent access

- Implementing privilege boundaries using tools like OpenPolicyAgent

- Establishing evaluation frameworks with realistic incident scenarios

- Maintaining human approval loops for remediation actions

Major cloud providers are adopting similar patterns. AWS's recent Bedrock implementation features four specialized agents coordinated by LangGraph, creating an end-to-end incident investigation system. Crucially, the architecture maintains human validation at critical junctions.

As these architectures mature, the emerging consensus is clear: The future of AI in SRE isn't about replacing engineers, but about building cognitive partnerships where humans and AI each focus on their comparative strengths. Orchestration layers will become increasingly vital for managing these interactions safely at scale.

Comments

Please log in or register to join the discussion