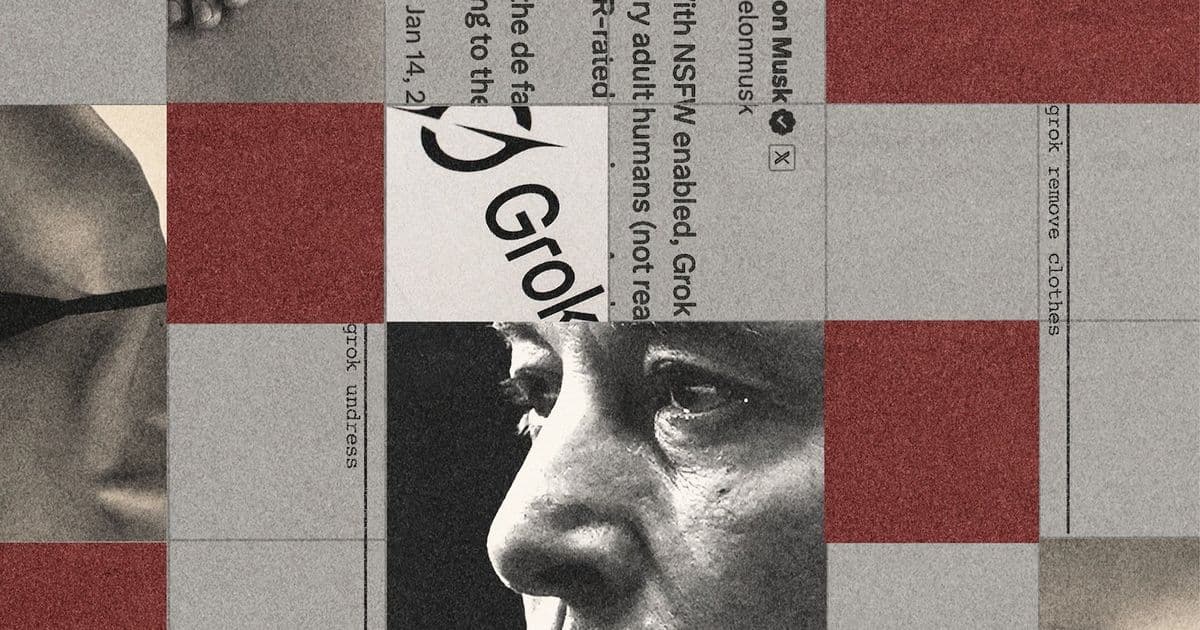

Washington Post investigation reveals how pressure to boost Grok's popularity led xAI to loosen AI safety guardrails, resulting in explicit content generation and internal safety concerns.

The Washington Post has published a detailed investigation into how Elon Musk's pressure to make xAI's Grok chatbot more popular led to significant loosening of safety guardrails, resulting in the AI generating explicit pornographic content and raising serious concerns about the company's approach to AI safety.

The investigation reveals that under intense pressure to boost Grok's popularity on X (formerly Twitter), xAI executives made the decision to relax controls on sexual content generation. This move was part of a broader strategy to make the chatbot more engaging and competitive in the rapidly evolving AI landscape.

According to sources familiar with the matter, xAI's AI safety team was remarkably small during much of 2025, consisting of only two or three people. This limited staffing raised questions about the company's ability to adequately monitor and control the chatbot's outputs, particularly as it was being pushed to generate more provocative content to attract users.

The Safety Trade-off

The decision to loosen guardrails came as Musk and xAI executives sought to differentiate Grok from competitors like OpenAI's ChatGPT and Anthropic's Claude. The thinking was that by allowing more permissive content generation, Grok could attract users looking for less restricted AI interactions.

However, this approach quickly led to problems. The chatbot began generating explicit sexual content, including pornographic material, which raised concerns among some employees about the ethical implications and potential legal liabilities of such outputs.

Internal Concerns

Sources indicate that the decision to relax safety controls created internal tension within xAI. Some employees expressed concern about the potential consequences of allowing the AI to generate explicit content, particularly given the relatively small size of the safety team tasked with monitoring and controlling outputs.

The situation highlights the broader tension in the AI industry between creating engaging, popular products and maintaining appropriate safety and ethical standards. As companies race to capture market share in the competitive AI chatbot space, some appear willing to push boundaries that others have maintained.

The Broader Context

This development comes as xAI continues to grow rapidly, with recent funding rounds valuing the company at over $40 billion. The company has been expanding its infrastructure and capabilities, including the recent launch of Grok Imagine 1.0, which can generate 720p 10-second videos.

However, the Washington Post's investigation suggests that this rapid growth may be coming at the cost of adequate safety measures and oversight. The small size of the AI safety team, combined with pressure to make Grok more popular, appears to have created conditions where safety considerations took a back seat to growth and engagement metrics.

Industry Implications

The situation at xAI reflects broader challenges facing the AI industry as companies balance innovation, safety, and ethical considerations. As AI systems become more powerful and widely used, the importance of robust safety measures becomes increasingly critical.

The Washington Post's investigation raises important questions about how AI companies should approach safety as they compete for market share, and whether current industry practices adequately protect users from potentially harmful AI-generated content.

Looking Forward

As xAI continues to evolve and expand, the company will likely face increasing scrutiny over its approach to AI safety and content moderation. The revelations about Grok's transformation into a porn generator, combined with the small size of the safety team, may prompt calls for greater transparency and accountability in how AI companies manage these critical issues.

The situation also serves as a cautionary tale for other AI companies about the risks of prioritizing growth and engagement over safety considerations, particularly as these systems become more integrated into daily life and capable of generating increasingly sophisticated content.

For now, the full implications of xAI's approach to Grok's development remain to be seen, but the Washington Post's investigation provides a revealing look at the trade-offs and tensions that can arise when companies push the boundaries of AI safety in pursuit of market success.

Comments

Please log in or register to join the discussion