A developer's deep dive reveals how moving from Python to C++ static autograd with tracing delivers 11x speedups on Tenstorrent's Wormhole hardware, while exploring the surprising limitations of parallelism at GPT-2 scale. The benchmarks expose a fundamental divide between dispatch-bound and compute-bound workloads.

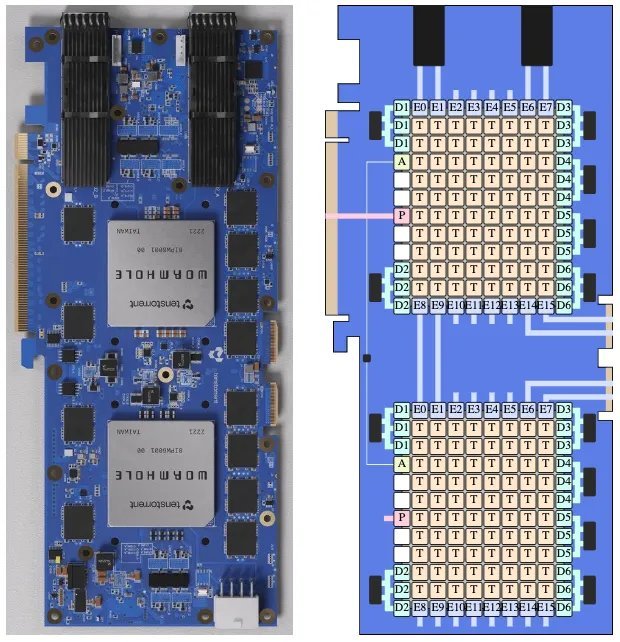

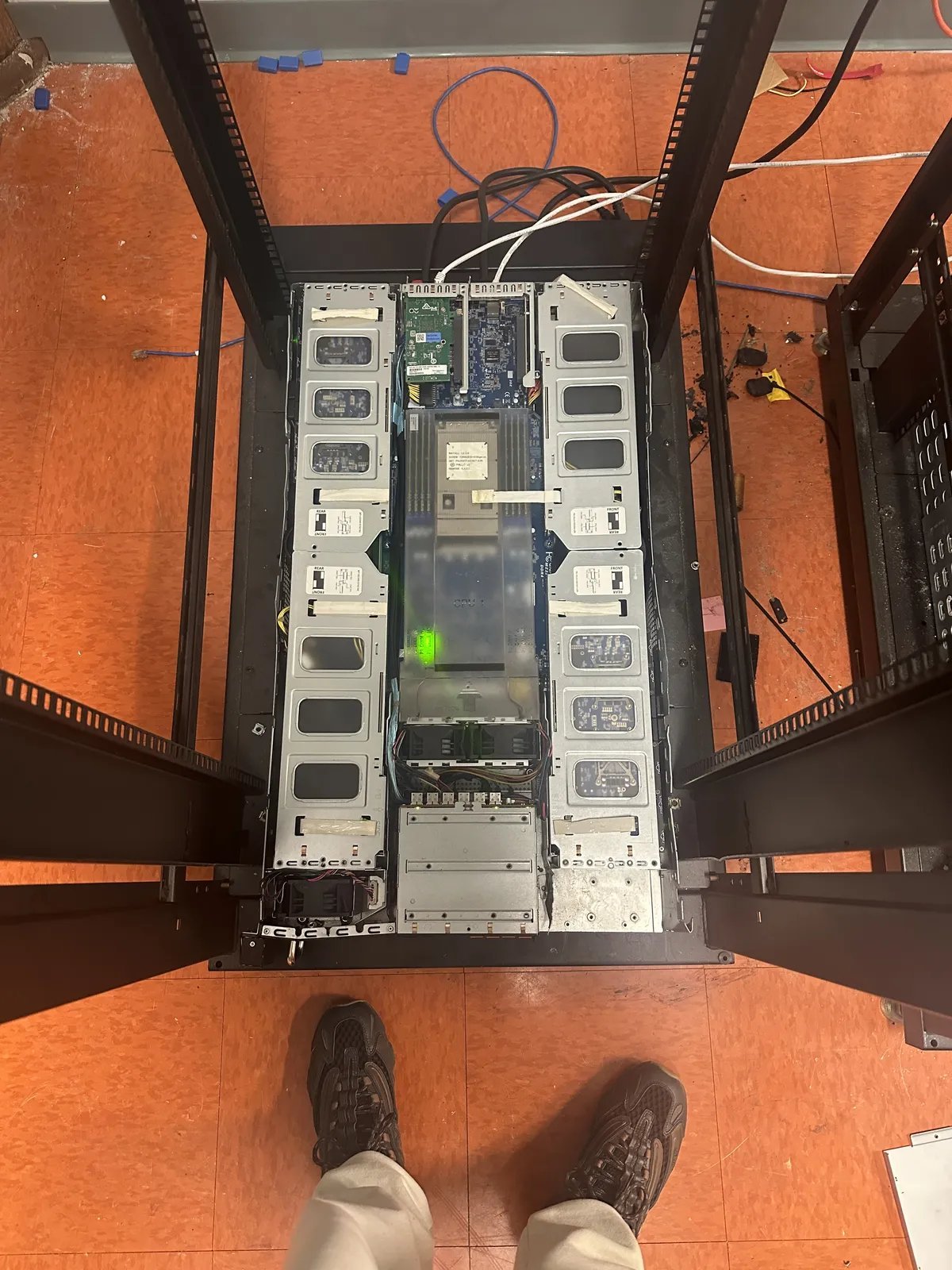

When Jim Keller appeared in a developer's dream chastising idle hardware resources, it sparked a deep technical journey into optimizing Tenstorrent's Wormhole architecture. The setup—dubbed 'Howard'—features 4 N300 accelerator cards housing 8 Wormhole B0 chips, totaling 512 Tensix RISC-V cores and 96GB GDDR6 memory hosted by an AMD EPYC CPU. This hardware became the testbed for pushing autograd performance beyond conventional approaches.

The Autograd Evolution: From Python Overhead to C++ Efficiency

The initial Python-based autograd implementation proved counterproductive—9.5ms per iteration for a simple MLP benchmark, slower than PyTorch on CPU due to Python's object allocation and GIL overhead. Transitioning to C++ dynamic autograd delivered an immediate 6.34x speedup (0.984ms) by eliminating interpreter bottlenecks.

The breakthrough came with static autograd: pre-allocating persistent buffers for activations and gradients, plus an "overwrite-on-first-write" gradient accumulation technique. This reduced iterations to 0.673ms (9.27x faster than CPU) by eliminating per-operation device allocations.

Tracing: When Hardware Utilization Matters

Integrating Tenstorrent's trace API—which captures and replays operation sequences without host dispatch overhead—yielded dramatic gains for smaller workloads:

| Batch | Dim | Static (ms) | Traced (ms) | Speedup |

|-------|-----|------------|------------|---------|

| 256 | 256 | 1.36 | 0.17 | 8.1x |

| 2048 | 2048| 4.12 | 3.31 | 1.2x |

At GPT-2 scale, however, tracing provided no measurable benefit (323ms vs 323ms). Why? The model became compute-bound—each 50ms+ operation dwarfed trace dispatch overhead.

Parallelism: The Core Utilization Challenge

With Wormhole chips underutilized on small tensors (just 2.9% peak FLOPs on 512×512 matmuls), two parallelism approaches were tested:

- Core grid partitioning (single command queue): Slowed performance by 32% due to sequential dispatch constraints.

- Subdevice API with dual command queues: Delivered 1.71x speedups for 256×256 tensors but hurt performance on larger workloads by splitting compute resources.

The verdict hinges on workload size:

- Dispatch-bound (small ops): Trace + multi-CQ wins

- Compute-bound (large ops): Full-core utilization wins

GPT-2 Reality Check

Against Tenstorrent's reference ML library (ttml), the static autograd implementation ran 1.58x faster for identical GPT-2 architectures—proving that eliminating graph-rebuilding overhead matters even at scale. Gradient verification against PyTorch confirmed numerical equivalence within bfloat16 tolerances.

The Hardware-Software Balance

This exploration reveals a crucial lesson: optimization strategies must align with workload characteristics. While tracing and parallelism shine when dispatch overhead dominates, large models like GPT-2 benefit most from memory-efficient autograd architectures. As accelerators grow more complex, understanding the interplay between tensor dimensions, core utilization, and dispatch mechanisms becomes paramount for unlocking peak performance.

Source: Benchmark data and technical analysis derived from mewtwo.bearblog.dev

Comments

Please log in or register to join the discussion