An experiment using Claude, Codex, and Gemini agents to collaboratively build a SQLite-compatible database engine reveals surprising insights about AI coordination overhead and the critical role of testing in multi-agent development.

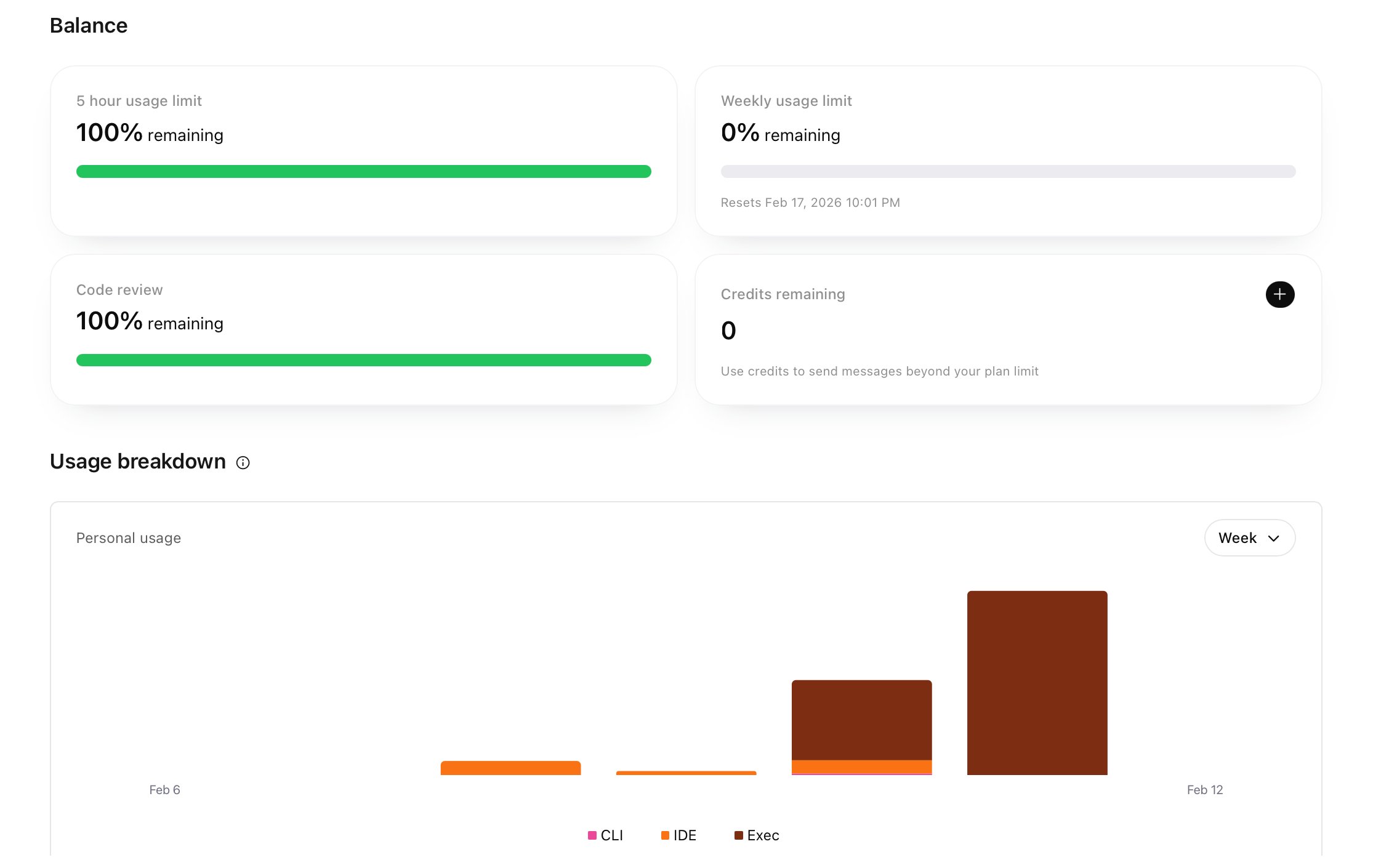

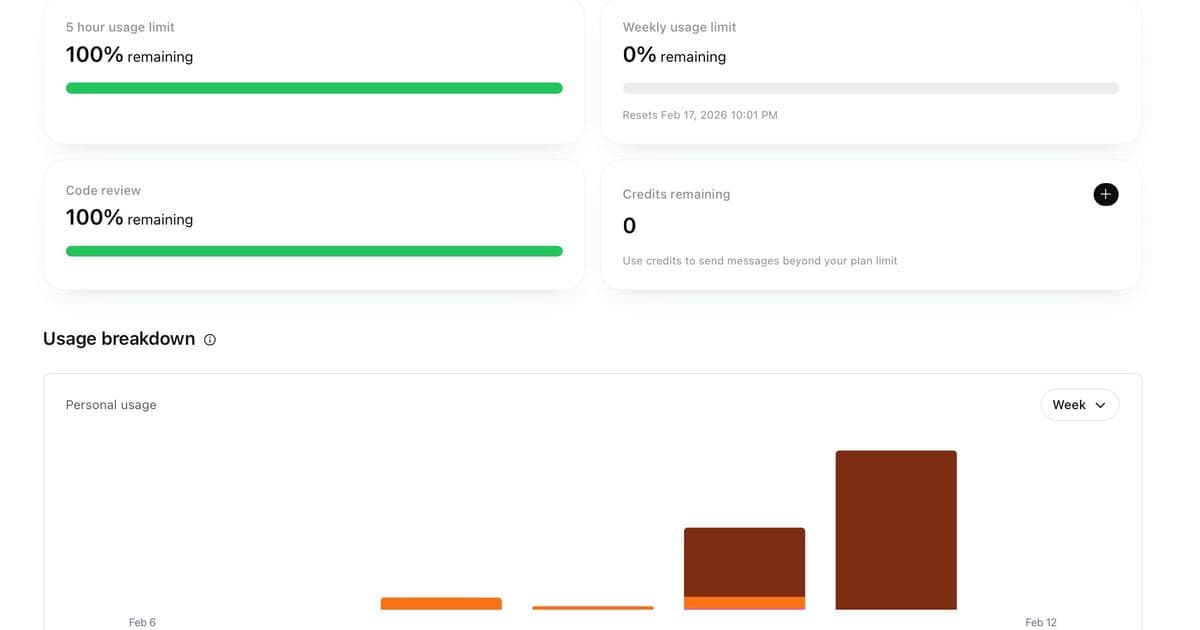

When Kian Kyars tasked six AI agents with building a SQLite-compatible database engine in Rust, the results weren't just about the 18,650 lines of functional code. The real story emerged in the commit history: 84 of 154 commits (54.5%) were purely coordination overhead—lock management, stale file cleanup, and task claiming. This experiment reveals both the promise and pitfalls of multi-agent software development.

Project structure showing agent workspaces and coordination files

Project structure showing agent workspaces and coordination files

The project followed a distributed systems approach: Two Claude, two Codex, and two Gemini agents operated in isolated workspaces, coordinated through Git, lock files, and a shared progress tracker. Agents would:

- Pull the latest

mainbranch - Claim a task via lock files

- Implement features against SQLite as an oracle

- Run tests (282 unit tests via

cargo testand custom validation scripts) - Update shared documentation

- Push changes

The bootstrap phase (initialization prompt) generated foundational architecture docs and a test harness. During the worker phase, agents implemented a full database stack: parser, B+tree indexing, WAL-based recovery, join algorithms, and statistics-aware query planning. All tests passed—a testament to rigorous validation against SQLite's behavior.

Agent workflow showing test-driven validation loop

Agent workflow showing test-driven validation loop

But the coordination tax proved staggering. "Shared state docs like PROGRESS.md and design notes became part of the runtime, not documentation," Kyars noted. The coalescer agent, designed to deduplicate code, failed mid-execution during the project due to token limits, leaving drift unmanaged. Documentation exploded—PROGRESS.md grew to 490 lines alongside volumes of inter-agent notes.

Three factors proved decisive:

- Oracle Validation: Continuous testing against SQLite provided ground truth

- Module Boundaries: Clear separation between parser, planner, and executor minimized merge conflicts

- Test Cadence: Fast feedback loops caught errors early

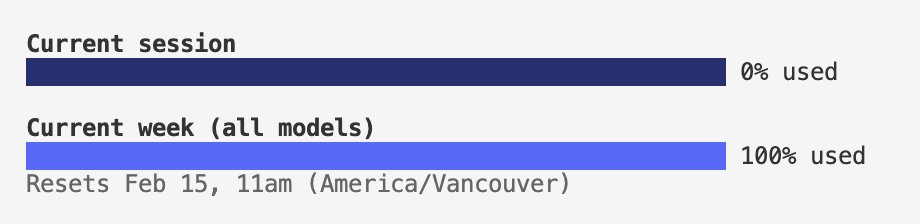

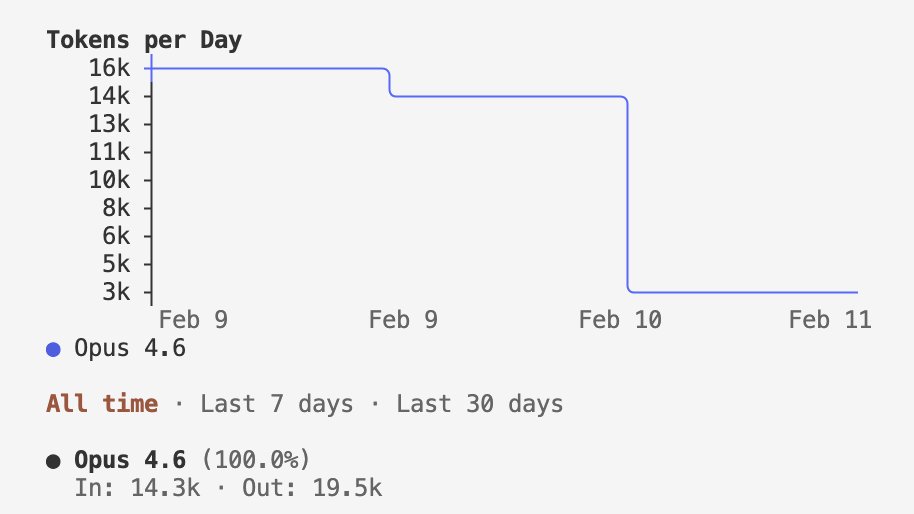

Yet limitations loom: Token usage tracking was impossible across providers (Gemini lacks usage metrics; Codex exhausted its quota). Rate limits caused partial commits when agents hit quota walls mid-task. Attribution was inconsistent—only Claude added co-author tags to commits.

Codebase statistics showing 18,849 lines across Rust and shell

Codebase statistics showing 18,849 lines across Rust and shell

This experiment challenges the "more agents = faster progress" assumption. As Kyars observes: "Parallelism is great, but only with strict task boundaries." The 54.5% coordination overhead suggests diminishing returns beyond optimal agent counts. Interestingly, this mirrors human team dynamics—Brook's Law states adding manpower to late projects makes them later.

Counter-intuitively, the most successful components weren't those with the flashiest AI-generated code, but those with the tightest feedback loops. The storage layer (B+trees, WAL) saw fewer merge conflicts because tests immediately validated page formats and recovery semantics. Meanwhile, the query planner required more coordination due to interconnected statistics and cost models.

Replication instructions showing agent restart and coalesce scripts

Replication instructions showing agent restart and coalesce scripts

The replication instructions reveal setup complexity: Isolated workspaces, lockfile protocols, and periodic coalescing are mandatory. Future work needs better "substantive run rate" tracking to distinguish actual progress from coordination noise.

This experiment validates that AI agents can build complex systems—but also proves they inherit distributed computing's hard problems: consensus, drift, and coordination. As Kyars concludes: "Tests are the anti-entropy force." The real breakthrough wasn't the SQLite clone, but demonstrating that validation mechanisms matter more than raw generative power in multi-agent systems.

Inspiration: Scaling Agents | Building a C Compiler Full experiment details: Building SQLite With a Small Swarm Source Code: parallel-ralph

Comments

Please log in or register to join the discussion