Meta is deploying Nvidia's Grace and upcoming Vera CPUs at scale, marking a significant departure from industry trends toward custom Arm processors and highlighting a deepening partnership between the social media giant and the GPU leader.

Meta is making a bold move in the AI infrastructure landscape by deploying Nvidia's standalone CPUs at scale, marking a significant shift in the company's hardware strategy and deepening its partnership with the GPU giant. This deployment represents one of the first major hyperscaler adoptions of Nvidia's CPU technology, traditionally known for its graphics processing units.

Meta's CPU Deployment Strategy

The social media giant has already deployed Nvidia's Grace processors in CPU-only systems at scale and is working with Nvidia to field its upcoming Vera CPUs beginning next year. This move is particularly noteworthy because Meta joins a select group of scientific institutions as one of the few organizations to deploy Nvidia's nearly three-year-old Grace CPUs in standalone configurations.

Unlike previous deployments where Meta used Nvidia's Grace-Hopper Superchips as part of its Andromeda recommender system, the company is now leveraging Nvidia's CPU-only Grace systems to power both general-purpose and agentic AI workloads that don't require GPU acceleration. According to Ian Buck, Nvidia's VP and General Manager of Hyperscale and HPC, "What we found is that Grace is an excellent backend datacenter CPU. It can actually deliver 2x the performance per watt on those backend workloads."

Technical Specifications and Advantages

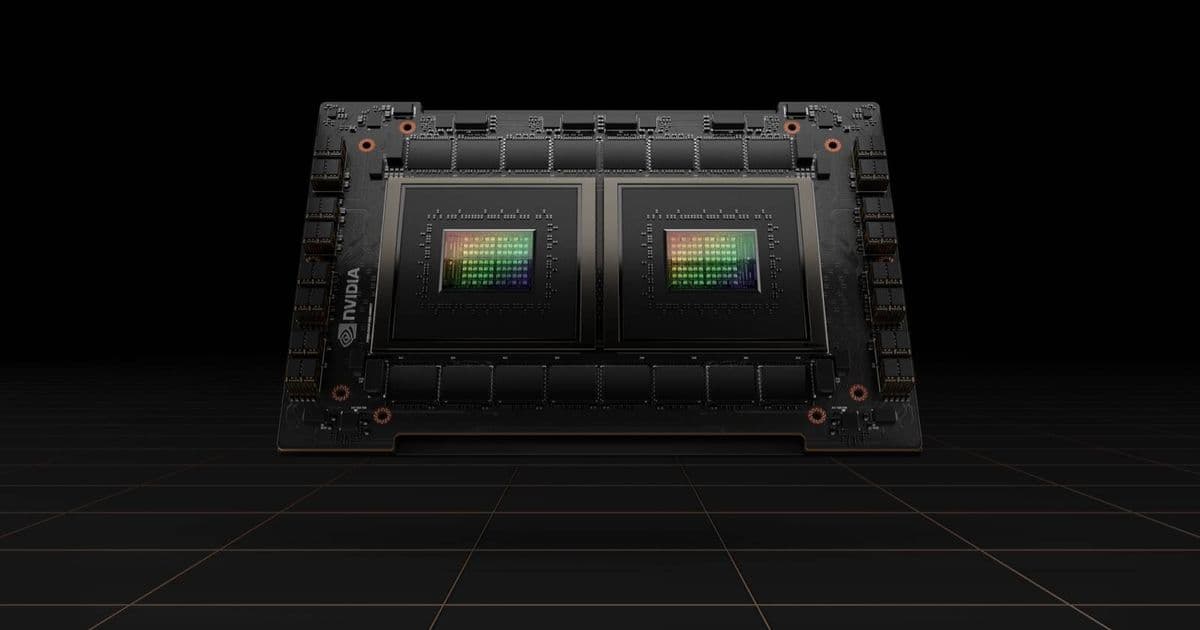

Nvidia's Grace CPUs are equipped with 72 Arm Neoverse V2 cores clocked at up to 3.35 GHz. The chip is available in a standalone configuration with up to 480 GB of memory or can be combined with another processor to form the Grace-CPU Superchip with up to 960 GB of LPDDR5x memory. While LPDDR5x memory isn't typically seen in server platforms, it offers significant advantages in terms of space and bandwidth, with the Grace-CPU Superchip boasting up to 1 TB of memory bandwidth between the two dies.

Looking ahead, Nvidia's Vera CPU, officially unveiled at CES earlier this year, boosts core counts to 88 custom Arm cores and adds support for simultaneous multi-threading (Hyperthreading) and confidential computing functionality. Meta plans to leverage this confidential computing capability for private processing and AI features in its WhatsApp encrypted messaging service.

Industry Context and Strategic Implications

Meta's adoption of Nvidia CPUs runs counter to the broader industry trend, which has increasingly pivoted toward custom Arm CPUs like Amazon's Graviton or Google's Axion. This strategic decision highlights Meta's confidence in Nvidia's CPU technology and suggests the company sees unique value in the performance-per-watt metrics that Grace and Vera CPUs offer for specific workloads.

The timing of this announcement coincides with Meta's aggressive capital expenditure plans, with the company targeting $115 billion to $135 billion in capex for 2026. This massive investment will fund the deployment of "millions" of Nvidia GB300 and Vera Rubin Superchips, alongside additional GPUs and Spectrum-X networking equipment.

Financial Scale and Market Impact

While Nvidia has been tight-lipped about the exact scale of the expanded collaboration, the company has indicated that the deal will contribute tens of billions to its bottom line. Industry analysts estimate that at over $3.5 million per rack, a million GPUs would represent approximately $48 billion in hardware investment – a substantial figure even for a company that earned $31.9 billion in net income on revenues of $57 billion in the quarter ended Oct. 26.

This partnership represents a significant diversification for Nvidia beyond its core GPU business and validates the company's strategy of expanding into the CPU market. It also signals potential challenges for traditional CPU vendors like Intel and AMD, as well as custom Arm CPU developers, as one of the world's largest technology companies places a major bet on Nvidia's CPU technology.

Competitive Landscape

It's worth noting that Meta isn't exclusively partnering with Nvidia. The company maintains a significant fleet of AMD Instinct GPUs in its datacenters and was directly involved in the design of AMD's Helios rack systems, which are due out later this year. Meta is widely expected to deploy AMD's competing rack systems, though no formal commitment has yet been made.

This multi-vendor approach suggests that Meta is taking a pragmatic stance, selecting the best technology for specific workloads while maintaining flexibility in its infrastructure strategy. The company's willingness to work with both Nvidia and AMD on large-scale deployments demonstrates the competitive nature of the AI infrastructure market and the importance of having multiple technology options available.

The deployment of Nvidia's standalone CPUs by Meta represents more than just a hardware purchase – it's a strategic signal about the future of AI infrastructure and the growing importance of specialized computing solutions in the era of large-scale AI deployment. As the industry continues to evolve, Meta's decision may influence other hyperscalers to reconsider their CPU strategies and potentially open new opportunities for Nvidia in the datacenter CPU market.

Comments

Please log in or register to join the discussion