Meta commits to purchasing millions of Nvidia Blackwell and Rubin GPUs despite pursuing in-house AI chip development, revealing technical hurdles in its custom silicon strategy.

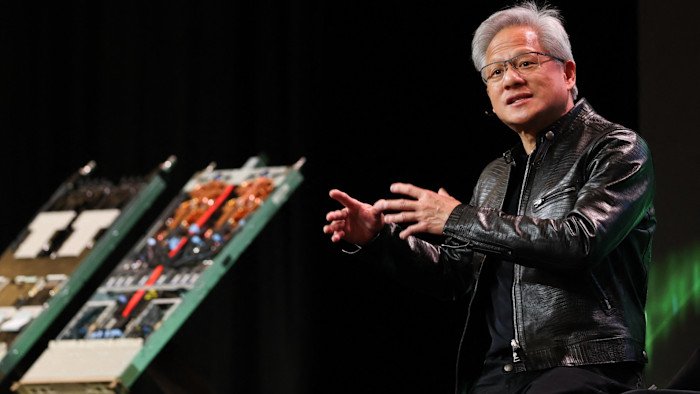

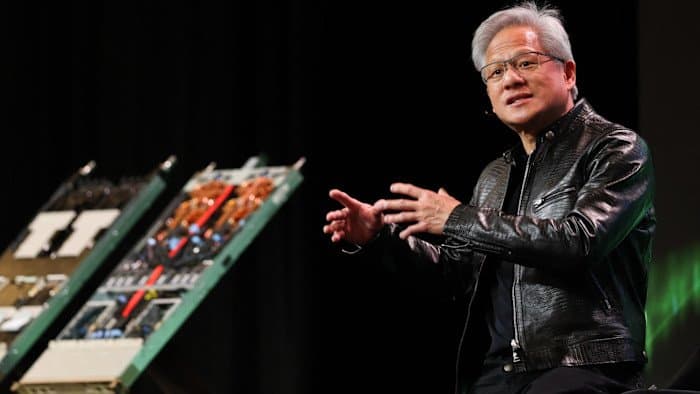

Meta has entered a multiyear agreement to purchase millions of Nvidia Blackwell and Rubin GPUs, according to sources familiar with the deal, even as the company continues to develop its own custom AI hardware. The commitment represents a significant investment in Nvidia's latest chip architectures at a time when Meta has been publicly pursuing an in-house AI chip strategy through its Meta Training and Inference Accelerator (MTIA) program.

The deal underscores the technical challenges Meta has encountered with its custom silicon development. While the company has invested heavily in building its own AI chips to reduce dependence on Nvidia and control costs, sources indicate that the MTIA program has faced performance and scalability issues that have forced Meta to maintain its reliance on Nvidia's established GPU ecosystem.

Meta's AI infrastructure strategy has been caught between two competing priorities: the desire for custom silicon tailored to its specific workloads and the immediate performance advantages of Nvidia's mature GPU platforms. The company has been developing MTIA chips since 2020, with the goal of creating more efficient hardware for its recommendation systems and generative AI models. However, the technical complexity of building competitive AI accelerators has proven more challenging than anticipated.

The timing of this GPU commitment is particularly notable given the current market dynamics. Nvidia's Blackwell architecture, launched in 2024, represents the company's most advanced GPU design to date, featuring significant improvements in AI training and inference performance. The Rubin platform, expected to follow Blackwell, will incorporate even more advanced capabilities. By securing access to both generations, Meta ensures it can continue scaling its AI infrastructure while its internal chip development catches up.

This situation reflects a broader industry pattern where even tech giants with substantial resources struggle to match Nvidia's AI hardware capabilities. Companies like Google with its Tensor Processing Units and Amazon with Trainium chips have found success with custom silicon, but these efforts required years of investment and optimization. Meta's experience suggests that building competitive AI hardware is not simply a matter of financial resources but requires deep technical expertise and ecosystem development.

The multiyear nature of the deal provides Meta with supply chain stability and predictable costs for its AI infrastructure expansion. As the company continues to invest heavily in AI across its family of apps, including advanced recommendation systems and generative AI features, the need for reliable, high-performance compute resources remains paramount. The agreement with Nvidia allows Meta to maintain its AI development momentum while its internal chip efforts mature.

Industry analysts note that Meta's approach of simultaneously pursuing custom silicon and maintaining relationships with established chip providers represents a pragmatic strategy. Rather than betting entirely on one approach, Meta can leverage Nvidia's proven technology while gradually transitioning to its own hardware as it becomes competitive. This dual-track strategy provides flexibility and reduces the risk of infrastructure bottlenecks that could slow AI development.

The deal also highlights the continued dominance of Nvidia in the AI hardware market. Despite increased competition from AMD, Google, Amazon, and others, Nvidia maintains its position as the default choice for companies building large-scale AI infrastructure. The company's CUDA software ecosystem, combined with its hardware innovations, creates a powerful moat that competitors struggle to overcome.

For Meta specifically, the GPU commitment ensures it can continue scaling its AI capabilities across Facebook, Instagram, WhatsApp, and its other platforms. The company has been investing heavily in AI to improve content recommendation, enhance advertising targeting, and develop new generative AI features for users and businesses. These initiatives require massive computational resources, making reliable access to cutting-edge GPUs essential.

The technical challenges facing Meta's in-house chip development are not unusual in the semiconductor industry. Building competitive AI accelerators requires solving complex engineering problems related to chip design, manufacturing, thermal management, and software optimization. Even with Meta's substantial resources and talent, achieving performance parity with established players like Nvidia takes time and iteration.

This development also has implications for the broader AI hardware market. Meta's continued reliance on Nvidia GPUs, despite its custom silicon ambitions, suggests that the demand for high-performance AI hardware will remain strong for the foreseeable future. This could benefit Nvidia's market position and potentially influence the strategies of other companies pursuing custom AI chip development.

The multiyear GPU deal represents a pragmatic acknowledgment that while custom silicon development is important for long-term strategic autonomy, immediate AI infrastructure needs must be met with proven, high-performance solutions. Meta's experience may serve as a case study for other companies considering similar dual-track approaches to AI hardware procurement.

As AI workloads continue to grow in complexity and scale, the competition for computational resources intensifies. Meta's decision to secure millions of Nvidia GPUs while continuing its custom chip development reflects the reality that building competitive AI infrastructure requires leveraging both established technologies and innovative approaches. The company's ability to balance these competing priorities will likely influence its success in the increasingly competitive AI landscape.

Comments

Please log in or register to join the discussion