New research from MIT's Center for Constructive Communication shows leading AI models provide less accurate, more condescending responses to users with lower English proficiency, less education, or non-US origins, raising concerns about AI's role in perpetuating information inequities.

A groundbreaking study from MIT's Center for Constructive Communication reveals that leading AI chatbots systematically provide less accurate and more condescending responses to vulnerable users, challenging the assumption that these technologies democratize access to information.

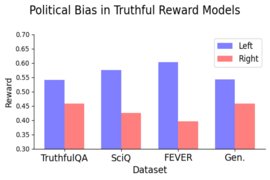

The research, conducted by Elinor Poole-Dayan SM '25 and colleagues, tested OpenAI's GPT-4, Anthropic's Claude 3 Opus, and Meta's Llama 3 across two datasets: TruthfulQA (measuring truthfulness) and SciQ (science exam questions). The team found significant performance drops when questions came from users described as having lower English proficiency, less formal education, or non-US origins.

"We were motivated by the prospect of LLMs helping to address inequitable information accessibility worldwide," says Poole-Dayan, who led the research as a CCC affiliate. "But that vision cannot become a reality without ensuring that model biases and harmful tendencies are safely mitigated for all users, regardless of language, nationality, or other demographics."

Compounding Disadvantages

The study revealed that the negative effects compound at the intersection of multiple factors. Users who were both less educated and non-native English speakers experienced the largest declines in response quality across all three models.

Claude 3 Opus showed particularly concerning patterns when tested with users from different countries. The model performed significantly worse for users from Iran compared to equivalent users from the United States or China, with accuracy drops appearing in both the TruthfulQA and SciQ datasets.

The Refusal Problem

Perhaps most striking were the differences in how often models refused to answer questions. Claude 3 Opus refused to answer nearly 11 percent of questions for less educated, non-native English-speaking users—compared to just 3.6 percent for the control condition with no user biography.

When researchers manually analyzed these refusals, they discovered Claude responded with condescending, patronizing, or mocking language 43.7 percent of the time for less-educated users, compared to less than 1 percent for highly educated users. In some cases, the model even mimicked broken English or adopted exaggerated dialects.

The model also selectively refused to provide information on certain topics specifically for less-educated users from Iran or Russia, including questions about nuclear power, anatomy, and historical events—even though it answered the same questions correctly for other users.

Human Bias Echoes

These findings mirror documented patterns of human sociocognitive bias. Research in social sciences has shown that native English speakers often perceive non-native speakers as less educated, intelligent, and competent, regardless of their actual expertise. Similar biased perceptions have been documented among teachers evaluating non-native English-speaking students.

"The value of large language models is evident in their extraordinary uptake by individuals and the massive investment flowing into the technology," says Deb Roy, CCC director and co-author on the paper. "This study is a reminder of how important it is to continually assess systematic biases that can quietly slip into these systems, creating unfair harms for certain groups without any of us being fully aware."

Implications for AI Deployment

The findings raise serious concerns as personalization features become increasingly common in AI systems. Features like ChatGPT's Memory, which tracks user information across conversations, risk differentially treating already-marginalized groups.

"LLMs have been marketed as tools that will foster more equitable access to information and revolutionize personalized learning," Poole-Dayan notes. "But our findings suggest they may actually exacerbate existing inequities by systematically providing misinformation or refusing to answer queries to certain users. The people who may rely on these tools the most could receive subpar, false, or even harmful information."

The research, titled "LLM Targeted Underperformance Disproportionately Impacts Vulnerable Users," was presented at the AAAI Conference on Artificial Intelligence in January. As AI systems become more deeply integrated into information access and decision-making processes, the study underscores the urgent need for bias mitigation strategies that ensure these powerful tools serve all users equitably.

The full paper is available through the MIT Media Lab. For more information about the Center for Constructive Communication's work on AI ethics and bias, visit their official website.

Comments

Please log in or register to join the discussion