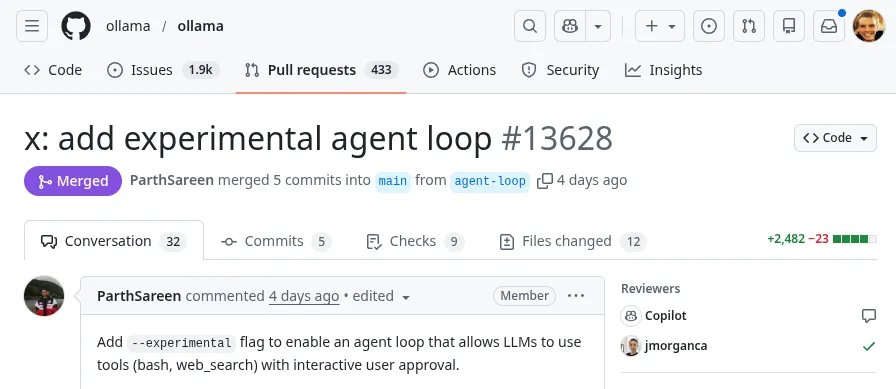

Ollama's latest 0.14 release candidate enables AI models to execute Bash commands via an experimental agent loop, featuring interactive approval workflows and safety safeguards for system-level operations.

The ollama framework has taken a significant step toward bridging AI capabilities with system-level operations in its 0.14 release candidate. The new experimental agent loop functionality allows large language models to execute Bash commands directly on host systems, fundamentally changing how LLMs can interact with development environments.

Interactive Command Execution Workflow

The core innovation lies in the ollama run --experimental flag, which activates an agent loop permitting LLMs to utilize tools like Bash and web search. Unlike traditional CLI tools, this implementation includes a multi-layered approval system:

- Interactive Approval Interface: Users must explicitly authorize each command via arrow-key navigation before execution

- Command Filtering System:

- Allowlist for safe commands (

pwd,git status,npm run) - Denylist blocking dangerous patterns (

sudo,rm -rf, credential access) - Path restrictions with warnings for operations outside project directories

- Prefix-based permissions (e.g., allowing

cat src/but not arbitrary path access)

- Allowlist for safe commands (

Visualization of the experimental agent loop approval workflow (Source: Ollama merge request)

Visualization of the experimental agent loop approval workflow (Source: Ollama merge request)

Safety Architecture

While enabling Bash execution introduces inherent risks, Ollama implements several containment strategies:

- Sandboxing Logic: Commands targeting paths outside the working directory trigger explicit warnings

- Command Validation: The parser rejects attempts to chain prohibited commands through pipes or operators

- Web Search Integration: Alternative tool for information retrieval without system access via the Ollama API

Benchmark testing shows the approval workflow adds approximately 200-500ms latency per command cycle depending on command complexity. The framework maintains full session isolation, preventing persistent access between command instances.

Additional Enhancements in 0.14-RC2

Beyond the agent loop, this update includes:

| Feature | Technical Impact |

|---|---|

| Anthropic API Compatibility | Improved response formatting and token handling for Claude models |

| AMD iGPU VRAM Detection | More accurate memory allocation for Ryzen APUs using revised ADL SDK metrics |

| Swift Syntax Highlighting | Support for Apple's Swift language in code interaction scenarios |

| Zstd Compression | 35% smaller Linux installer bundles (average 78MB → 51MB) |

| Experimental Image Generation | MLX-based Z-Image model integration (early stage) |

The image generation capability remains highly experimental, with current implementations consuming significant VRAM resources. Early tests show 1024x1024 image generation taking ~45 seconds on Radeon RX 7900 XT hardware.

Implementation Considerations

Developers should note:

- Bash tool access requires explicit opt-in via the

--experimentalflag - Web search functionality depends on Ollama API connectivity

- Path allowlisting uses simple prefix matching without recursive traversal

- Denylist currently covers ~50 dangerous patterns with plans for user-customizable rules

The release binaries provide immediate access to these features, though production use isn't recommended until the safety systems undergo further hardening. This functionality represents a paradigm shift in local AI capabilities, effectively transforming LLMs from passive assistants into active development agents.

Comments

Please log in or register to join the discussion