OpenAI is deploying an age prediction system for ChatGPT that uses behavioral signals to estimate whether an account belongs to a user under 18, applying additional content safeguards for teens while maintaining adult access. The model combines account history, usage patterns, and stated age, with a verification fallback through Persona.

OpenAI has begun rolling out an age prediction model for ChatGPT consumer plans, a system designed to automatically estimate whether an account likely belongs to someone under 18. This isn't a simple age gate—it's a behavioral analysis tool that aims to apply appropriate safeguards for teens while preserving the full experience for adults. The move is framed as part of the company's broader Teen Safety Blueprint, a framework that acknowledges young people deserve technology that expands opportunity while protecting their well-being.

How the Model Actually Works

The age prediction system relies on a combination of behavioral and account-level signals. It examines how long an account has existed, typical times of day when the user is active, usage patterns over time, and any stated age information the user provides. This multi-signal approach attempts to infer age without requiring explicit verification for every user, which would create friction and privacy concerns.

OpenAI is treating this as a learning system. As the model deploys, the company will gather data on which signals prove most accurate and refine the model over time. This iterative approach is common in machine learning deployments but carries inherent risks—initial accuracy will determine user experience, and errors could lead to either inappropriate content exposure for teens or unnecessary restrictions for adults.

When the model estimates an account may belong to someone under 18, ChatGPT automatically applies additional protections designed to reduce exposure to sensitive content. These restrictions target material that could be particularly harmful or inappropriate for minors, including graphic violence or gory content, viral challenges that might encourage risky behavior, sexual or violent role play, depictions of self-harm, and content promoting extreme beauty standards, unhealthy dieting, or body shaming.

These restrictions are guided by input from experts and academic literature on child development, acknowledging that teens process risk differently due to differences in impulse control, peer influence, and emotional regulation. The company notes that while these safeguards help reduce exposure to sensitive material, they're focused on continually improving protections, especially to address attempts to bypass safeguards.

The Verification Fallback

A critical component of the system is the handling of incorrect predictions. Users who are incorrectly placed in the under-18 experience can confirm their age and restore full access through a selfie verification process using Persona, a secure identity-verification service. This process is accessible via Settings > Account, allowing users to check what safeguards have been applied to their account at any time.

The system defaults to a safer experience when OpenAI is not confident about someone's age or has incomplete information. This conservative approach prioritizes safety over convenience, though it may create friction for users who are incorrectly categorized.

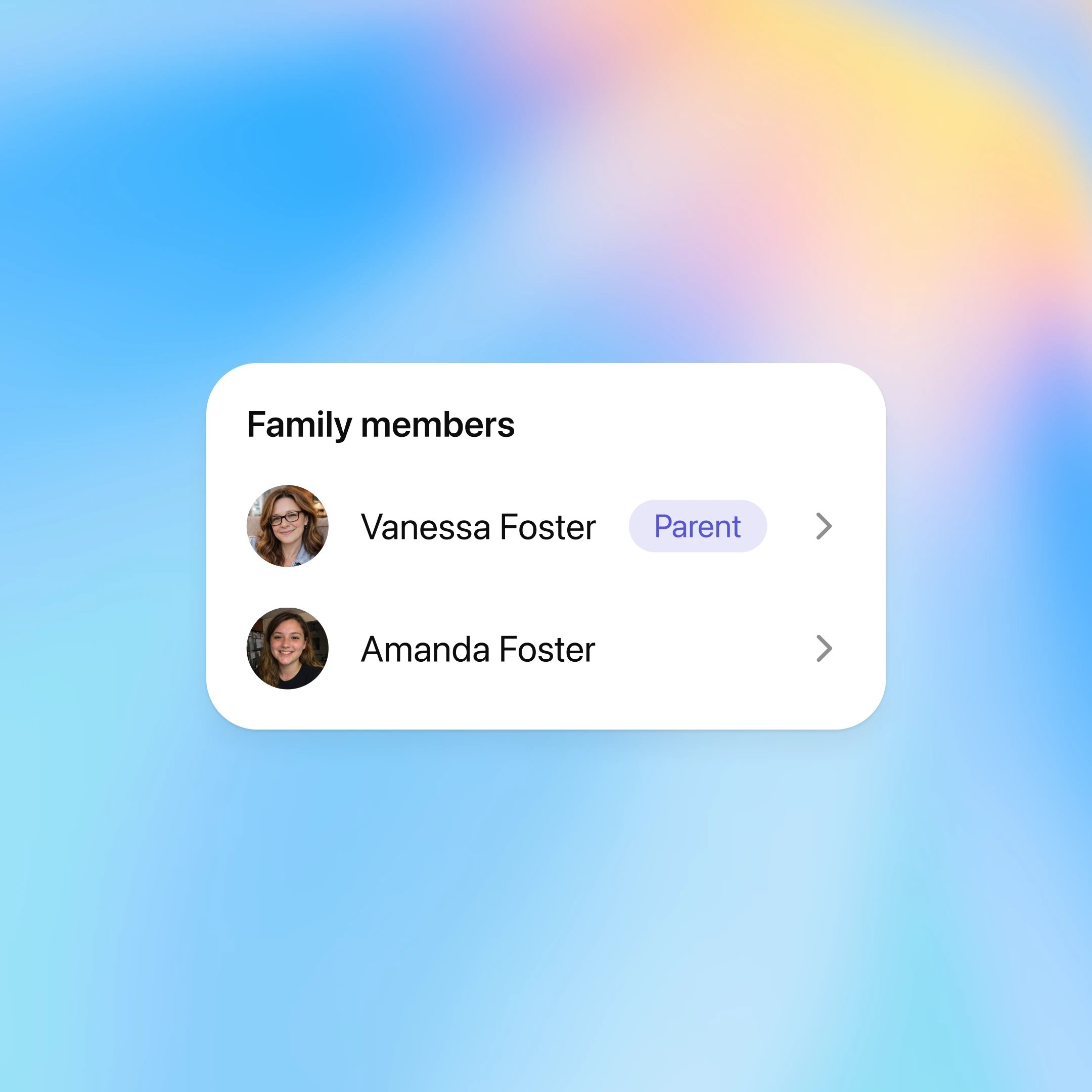

Parental Controls and Customization

Beyond the automated age prediction, OpenAI offers parental controls that allow parents to customize their teen's experience further. These controls include setting quiet hours when ChatGPT cannot be used, controlling features such as memory or model training, and receiving notifications if signs of acute distress are detected.

This layered approach—automated age prediction plus parental controls—reflects a recognition that different families have different needs and comfort levels with technology. It also acknowledges that automated systems alone cannot solve the complex challenge of keeping teens safe online.

Regional Considerations and Ongoing Development

The rollout is happening in phases, with the EU receiving the age prediction system in the coming weeks to account for regional requirements. This geographic consideration is important, as different jurisdictions have varying regulations around age verification and data privacy.

OpenAI is tracking the rollout closely and using signals to guide ongoing improvements. The company is working with experts including the American Psychological Association, ConnectSafely, and the Global Physicians Network to inform its approach.

The Broader Context

This age prediction system represents a significant technical and ethical challenge. On one hand, there's clear value in protecting teens from potentially harmful content. On the other, automated systems that infer age from behavior raise questions about accuracy, bias, and privacy.

The model's effectiveness will depend heavily on its accuracy rate. If too many adults are incorrectly flagged as teens, the system could create frustration and undermine trust. If too many teens slip through, the safeguards fail their primary purpose. The verification fallback helps, but it places the burden on users to correct errors.

The behavioral signals used also raise questions about what patterns truly correlate with age. Usage times, for instance, might correlate with school schedules for teens, but adults also have work schedules that create similar patterns. The model must distinguish between these contexts, which is a complex machine learning problem.

Technical and Ethical Trade-offs

OpenAI's approach reflects a broader trend in tech: using machine learning to solve complex social problems. The company is attempting to automate a decision that traditionally required explicit user input or parental oversight. This automation offers scale but introduces the risks inherent in any AI system—bias, error, and opacity.

The system also highlights the tension between safety and autonomy. Teens receive additional protections, but adults maintain full access. This distinction is reasonable but requires careful implementation to avoid overreach or under-protection.

The use of Persona for verification adds another layer. While it provides a secure way to confirm age, it also requires users to share biometric data—a significant privacy consideration. The company must balance the need for verification with respect for user privacy.

What Comes Next

OpenAI acknowledges this is an important milestone but not the final solution. The company will continue to share updates on progress and what it learns from the rollout. This transparency is crucial, especially given the sensitive nature of age-related systems.

The age prediction model will likely evolve as more data is collected and the system is refined. Future iterations might incorporate additional signals, improve accuracy, or adjust the balance between automated safeguards and user control.

For now, the system represents a significant step in OpenAI's approach to responsible AI deployment. It's an attempt to use technology to solve a technology-related problem—how to keep young people safe while using powerful AI tools—while acknowledging that no automated system is perfect and human oversight remains essential.

The success of this rollout will be measured not just by technical accuracy but by how well it protects teens without unnecessarily restricting adults. It's a complex balance that will require ongoing attention and refinement from OpenAI and the broader AI community.

Learn more about OpenAI's age prediction approach | Teen Safety Blueprint | Under-18 Principles for Model Behavior | Parental Controls Documentation | Persona Identity Verification

Comments

Please log in or register to join the discussion