Cisco researchers uncovered widespread security lapses in large language model deployments, identifying over 1,100 publicly accessible Ollama servers vulnerable to unauthorized access and prompt injection. The study leverages Shodan scanning to reveal how default configurations enable risks like model theft and resource hijacking, demanding urgent industry-wide security reforms.

The democratization of large language models (LLMs) through tools like Ollama has revolutionized AI accessibility—but at a steep security cost. A new study by Cisco Talos reveals that over 1,100 Ollama servers are exposed to the public internet, with 20% actively hosting models vulnerable to exploitation. This isn't theoretical: attackers can execute unauthorized prompts, steal proprietary models, or even hijack computational resources, all due to basic misconfigurations that prioritize convenience over security.

The Invisible Epidemic of Exposed AI Endpoints

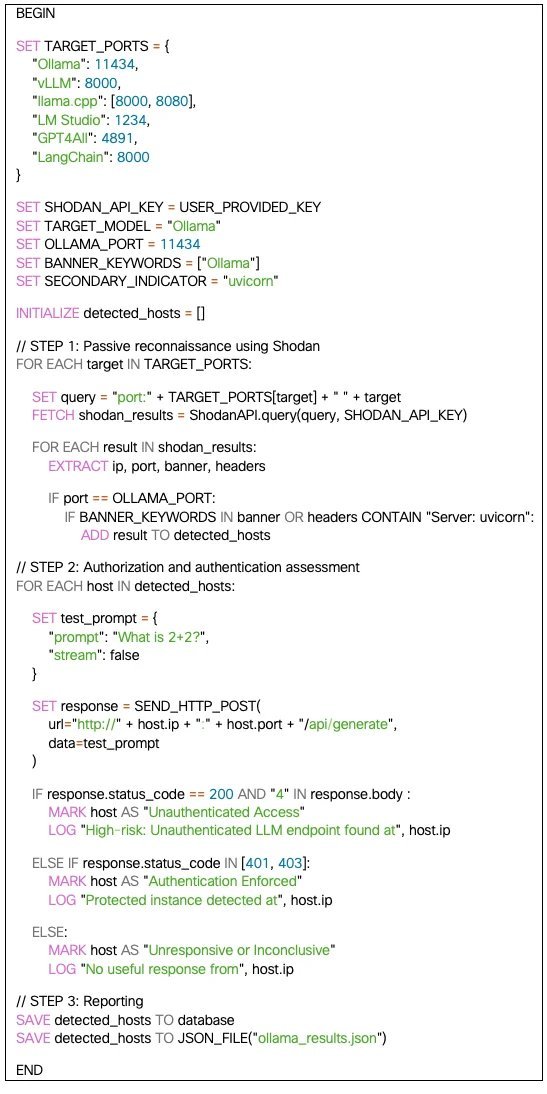

Researchers Dr. Giannis Tziakouris and Elio Biasiotto developed a Python-based tool that leveraged Shodan—a search engine for internet-connected devices—to scan for Ollama instances. By targeting default port 11434 and signature banners like Server: "uvicorn", they identified servers lacking authentication. The approach avoided active probing to minimize ethical concerns, instead relying on Shodan's indexed data. As Dr. Tziakouris notes:

"These deployments often reflect a 'deploy first, secure later' mindset. Many organizations don't realize their LLM APIs are as exposed as an unpatched WordPress site."

{{IMAGE:5}}

Stark Findings: Global Exposure and Live Threats

The results were alarming:

- 1,139 exposed servers detected, with 214 actively serving models like Mistral and LLaMA.

- Geographically concentrated in the U.S. (36.6%), China (22.5%), and Germany (8.9%).

- 88.89% used OpenAI-compatible API routes (e.g.,

v1/chat/completions), creating a uniform attack surface for automated exploits.

A simple test prompt—What is 2+2?—returned 4 with an HTTP 200 status on vulnerable servers, proving trivial unauthorized access. Dormant servers posed equal risk, allowing attackers to upload malicious models or trigger resource-intensive operations.

Why Developers Should Care

This isn't just about data leaks. The implications cascade across the AI stack:

- Model Extraction: Repeated queries can reconstruct proprietary models.

- Prompt Injection: Unsecured endpoints enable jailbreaking for malware generation or disinformation.

- Resource Hijacking: Attackers abuse free computation, inflating cloud costs.

- Supply Chain Risks: Compromised models could poison downstream applications.

The homogeneity of OpenAI-style APIs means a single exploit script could target thousands of endpoints—a bonanza for malicious actors.

Mitigating the Crisis: Practical Steps for Teams

The report prescribes layered defenses:

- Enforce Authentication: Mandate API keys or OAuth 2.0 (reference: OWASP API Security Top 10).

- Isolate Networks: Deploy LLMs behind firewalls or VPCs, never on public IPs.

- Obfuscate Signatures: Change default ports and suppress server banners (e.g., disable

uvicornidentifiers). - Adopt Rate Limiting: Use tools like Kong to throttle suspicious requests.

# Example: Basic Ollama auth check using Python requests

import requests

response = requests.post("http://ollama-host:11434/api/generate",

json={"model": "mistral", "prompt": "2+2"})

if response.status_code == 200:

print("VULNERABLE: No authentication enforced")

Beyond Ollama: A Broader Call to Action

While focused on Ollama, the study underscores a systemic failure in AI deployment hygiene. As open-source LLMs proliferate, security can't remain an afterthought. Future work must expand to frameworks like Hugging Face and vLLM, integrating Censys or Nmap for broader scans. For now, every exposed server is a ticking clock—and the time for complacency is over.

Source: Detecting Exposed LLM Servers: A Shodan Case Study on Ollama by Dr. Giannis Tziakouris and Elio Biasiotto, Cisco Talos.

Comments

Please log in or register to join the discussion