Security researchers warn that viral AI-generated work caricatures shared on social media could expose users and their employers to social engineering attacks, data theft, and account takeovers.

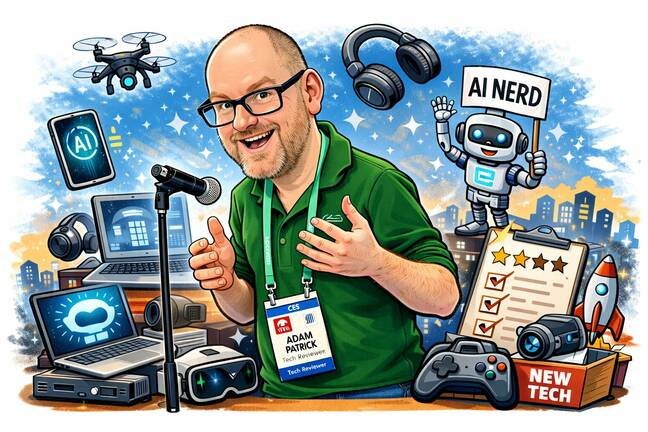

If you've seen the viral AI work pic trend where people are asking ChatGPT to "create a caricature of me and my job based on everything you know about me" and sharing it to social, you might think it's harmless. You'd be wrong.

Forta security analyst Josh Davies says it puts people and their employers at risk of social engineering attacks, LLM account takeovers, and sensitive data theft. "At the time of writing, this is a hypothetical risk," Davies told The Register. "But given the scale of participants publicly posting this trend, we believe it is highly likely that some could be exploited in this way with the LLM account takeover. The fact that users are posting this personal work information publicly and using a prompt that said 'based on everything you know about me' it is feasible that sensitive information related to their employer could be viewable in the prompt history if takeover is successful."

As of February 8, Davies says 2.6 million of these images have been added to Instagram with links to users' profiles, including both private and public accounts. "I am currently looking through different posts, and have identified a banker, a water treatment engineer, HR employee, a developer and a doctor in the last 5 posts I viewed," he said in a Wednesday blog.

Sometimes the model will ask the user for more context before it creates their cartoon image. But even without those extra details, these caricatures signal to an attacker that the person uses an LLM at work - meaning there's a chance they input company data into a publicly available model. As The Register has previously reported, many employees use personal chatbot accounts to help them do their jobs, and most companies have no idea how many AI agents and other systems have access to their corporate apps and data.

"Many users do not realise the risks of inputting sensitive data into prompts or may make mistakes when looking to use LLMs to augment their tasks," Davies wrote. "Even fewer understand that this data is saved in their prompt history and (although unlikely) could even be returned to another user, by accident, or intentionally in responses."

An attacker could combine the individual's social media username, profile information, and clues from the LLM-generated image to figure out the person's email address using search engine queries or open-source intelligence, he explained. "Account takeover would not require a sophisticated or especially technical actor," Davies told The Register. "Much of the information [in] the public images will support doxing and spear phishing, which would increase the ease and chances of a successful social engineering attack."

While LLM account takeover is the most likely risk and requires the technical skill, prompt injection and jailbreaking are also possibilities, although Davies told us those require "a high level of sophistication....While not impossible, it is highly unlikely we would see this."

To prevent this type of unintentional data leakage, Davies says organizations first need visibility into LLM and AI usage by employees, and then governance policies to identify unapproved apps and limit their access to corporate systems and data. He also recommends monitoring for compromised credentials. While this example focuses on personal LLM accounts as these are more likely to be used for social media posts, "compromised corporate credentials would be even more damaging," he noted. ®

Comments

Please log in or register to join the discussion