A novel technique transforms photorealistic scenes into interactive 3D line drawings by combining 3D Gaussian Splatting with AI-powered image stylization. By strategically swapping original photos with algorithmically generated line art during the rendering pipeline, developers can create view-dependent sketches that preserve geometry while introducing artistic styles. This hybrid approach opens new possibilities for stylized real-time visualization and computational art.

The quest to translate physical reality into expressive digital line art has taken a significant leap forward through an innovative fusion of neural rendering and computer graphics techniques. Researcher Amritansh Kwatra demonstrates how combining 3D Gaussian Splatting with AI-powered line drawing generation creates interactive 3D sketches that preserve both geometric accuracy and artistic expression.

The Technical Fusion

The methodology bridges two groundbreaking papers:

- 3D Gaussian Splatting (Kerbl et al.): Transforms multi-view images into real-time renderable volumetric representations

- Informative Line Drawings (Chan, Isola & Durand): Uses a GAN trained with geometry, semantics, and appearance losses to convert photos into stylized sketches

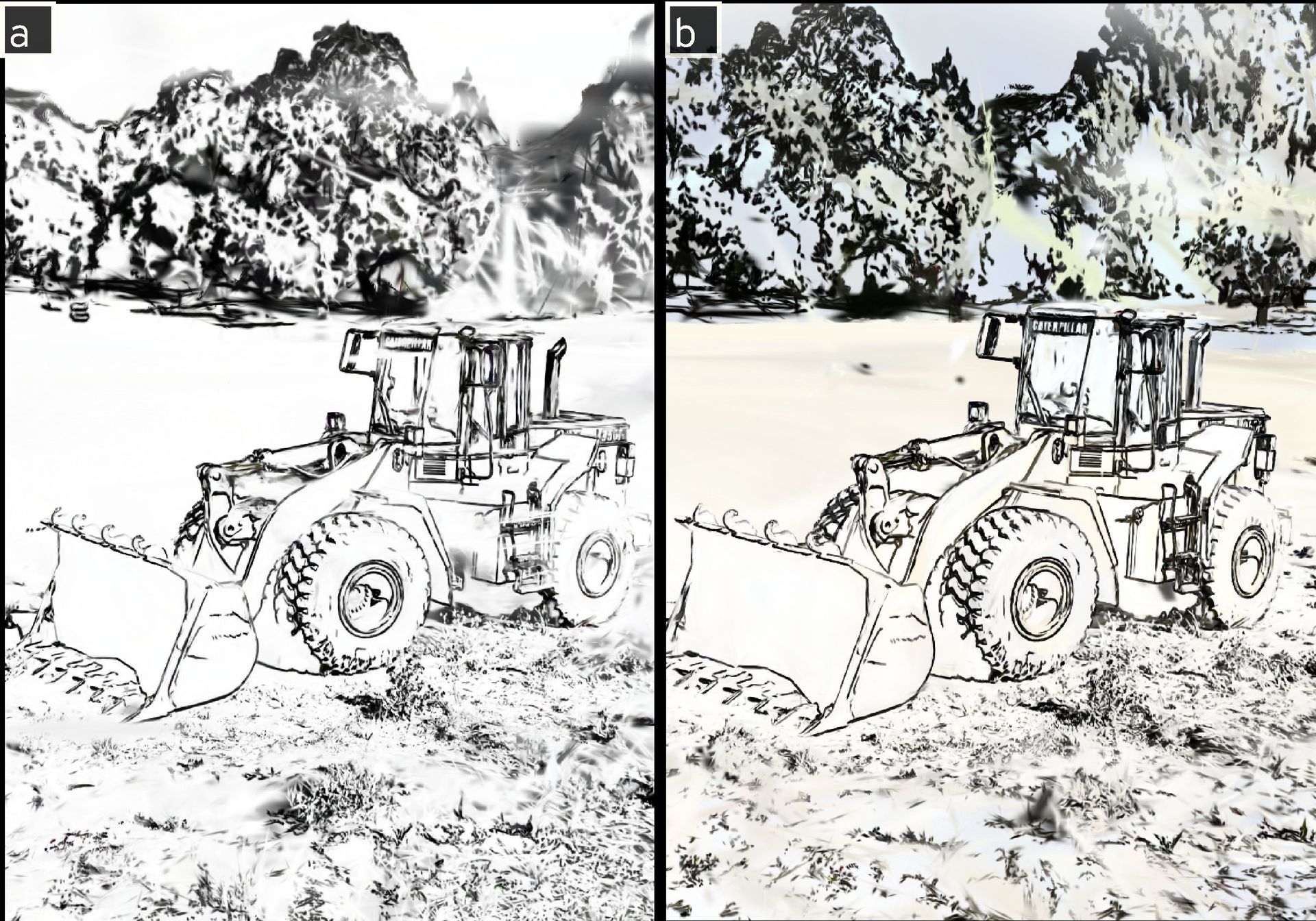

Figure 1: Original photo (a) vs. contour style (b) and anime style (c) line drawings generated using Chan et al.'s technique. (Source: Amritansh Kwatra)

Figure 1: Original photo (a) vs. contour style (b) and anime style (c) line drawings generated using Chan et al.'s technique. (Source: Amritansh Kwatra)

The core innovation lies in strategically replacing original photographs with line-drawn versions during the Gaussian Splatting pipeline. As Kwatra explains: "We simply swap the original images with the ones generated using Chan et al.'s method." This substitution can occur at two critical junctures:

- Pre-Structure from Motion (SfM): Replacing images before camera pose estimation

- Pre-Gaussian Training: Swapping images before the splatting optimization phase

Figure 2: Image swap points in the Gaussian Splatting pipeline. (Adapted from Kerbl et al.)

Figure 2: Image swap points in the Gaussian Splatting pipeline. (Adapted from Kerbl et al.)

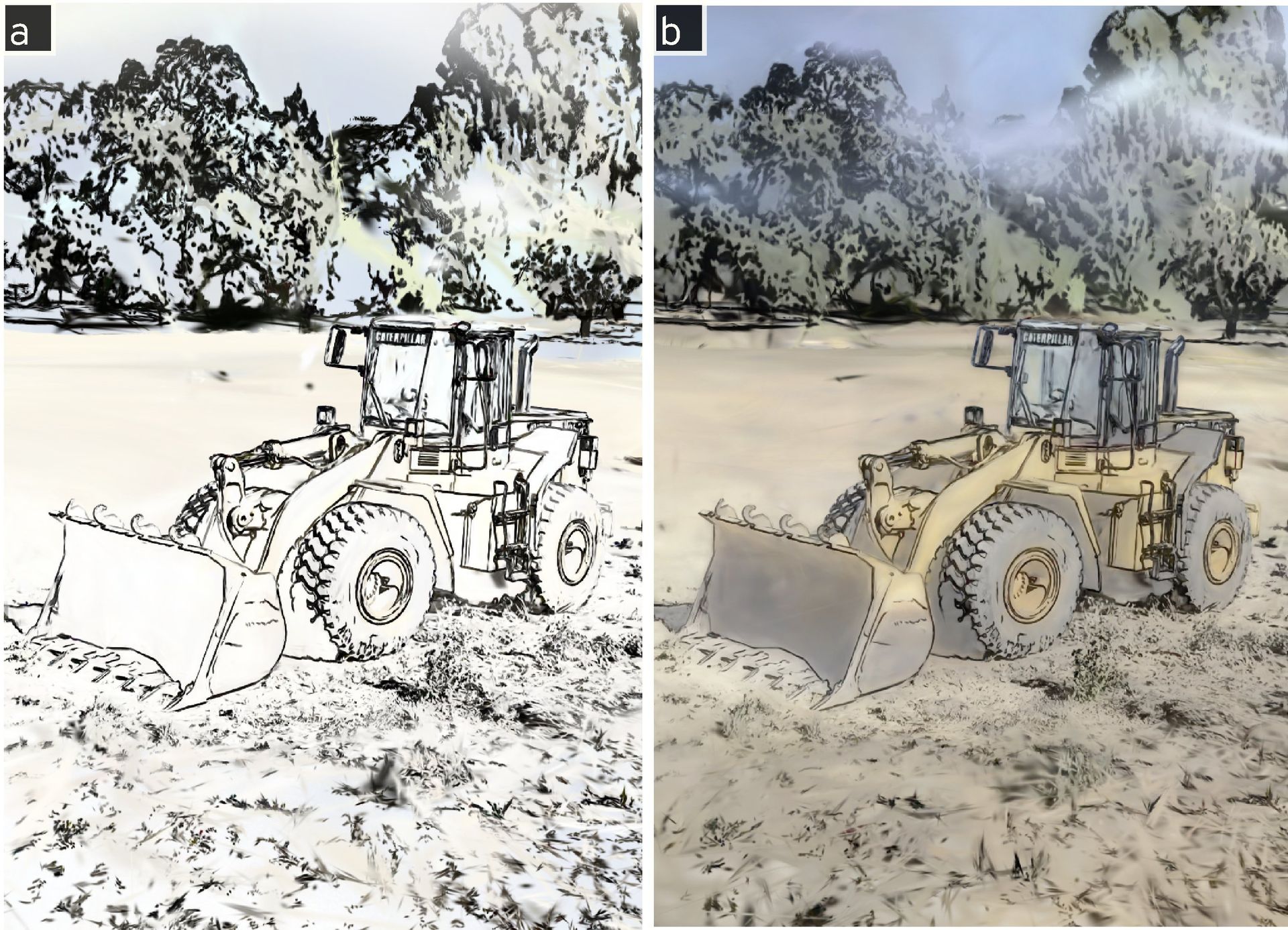

Pre-training swaps proved more practical, avoiding recomputation of camera poses via COLMAP when iterating on styles. However, Kwatra notes that pre-SfM swapping eliminates color artifacts caused by initializing Gaussians with original images:

{{IMAGE:3}} Figure 3: Visual comparison of pre-SfM (a) vs. pre-training (b) swap approaches. (Source: Amritansh Kwatra)

Enhancing Artistic Expression

The technique unlocks creative possibilities:

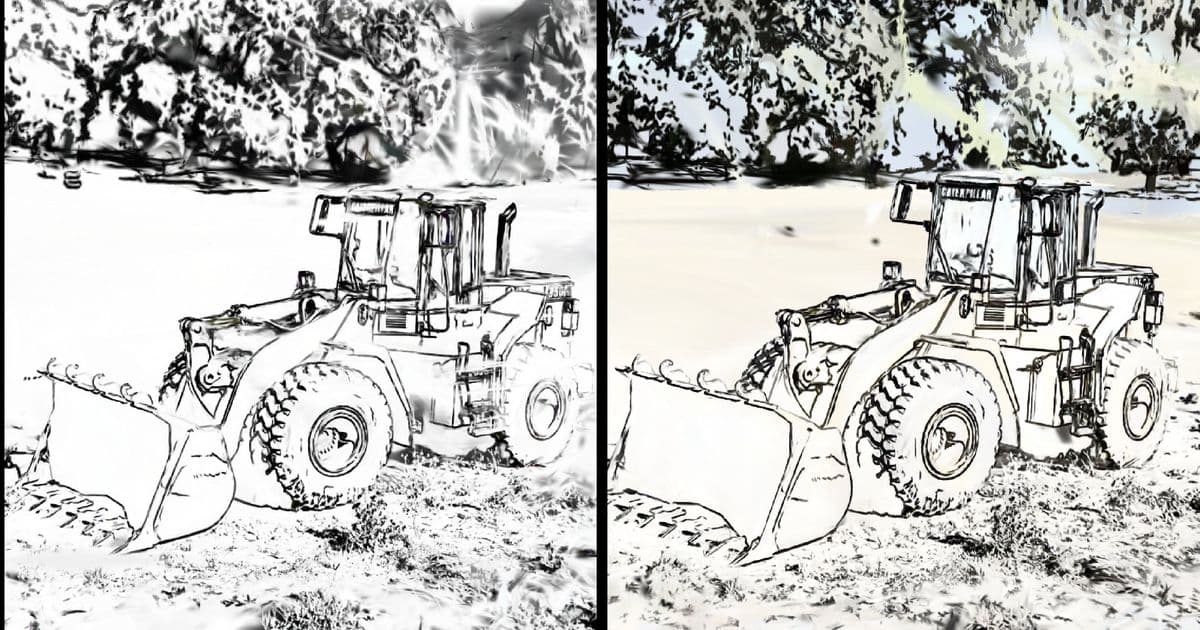

Color Integration: By blending low-frequency color data from original images into line drawings, Kwatra achieved watercolor-like effects:

{{IMAGE:4}} Figure 4: Pure contour (a) vs. color-blended (b) results. (Source: Amritansh Kwatra)

Style Splicing: Different viewing perspectives can render distinct styles within the same scene. A hemisphere might show photorealism while another displays line art, creating seamless transitions as users navigate.

Object-Specific Stylization: Using Meta's Segment Anything Model (SAM), Kwatra selectively replaced subjects with line drawings while preserving background realism:

{{IMAGE:5}} Figure 6: Object-specific stylization via segmentation. (Source: Amritansh Kwatra)

Technical Observations

- Resolution Impact: Lower resolutions capture major contours while higher resolutions preserve finer details

- Performance: Line drawing scenes require approximately 2x more splats than photorealistic equivalents due to Gaussians' inefficiency at modeling thin strokes

- Training Times: 1080p scenes required ~15 minutes on an RTX 4080S versus ~4 minutes for 460x256 resolutions

Implications for Developers

This technique demonstrates how neural rendering pipelines can be creatively hacked for artistic applications. The modular approach—decoupling capture (SfM), representation (Gaussians), and stylization (GANs)—enables new workflows for:

- Stylized real-time visualization

- Computational art installations

- Non-photorealistic game assets

- Educational visualization tools

By revealing how view-dependent artistic rendering can emerge from photogrammetry pipelines, Kwatra's work invites further experimentation at the intersection of computer vision and computational art.

Source: Experiment by Amritansh Kwatra. Techniques based on '3D Gaussian Splatting for Real-Time Radiance Field Rendering' (Kerbl et al.) and 'Learning to Generate Line Drawings that Convey Geometry and Semantics' (Chan, Isola & Durand). Full details at amritkwatra.com

Comments

Please log in or register to join the discussion