Samsung's Device Solutions CTO reveals unprecedented demand for HBM memory from AI hyperscalers, with HBM4 mass production underway and innovative hybrid bonding and zHBM technologies set to revolutionize memory performance and efficiency.

Samsung is riding a massive wave of demand for memory chips that shows no signs of cresting, with the company's Device Solutions CTO Song Jai-hyuk revealing at Semicon Korea that AI hyperscalers are ordering memory at unprecedented levels. This surge in demand has sent prices skyrocketing and positioned Samsung at the center of the AI infrastructure boom that's reshaping the tech industry.

HBM4 Production and Market Response

The company is already mass-producing its next-generation HBM4 (High Bandwidth Memory), following strong performance from the previous HBM3E format. Samsung reported booming sales in Q3 last year, driven by robust demand for HBM3E, with this momentum continuing into Q4. The first quarter of this year marks the commercial launch of HBM4, and early feedback from corporate customers who received initial shipments has been overwhelmingly positive, with performance described as "very satisfactory."

This timing is critical as AI workloads continue to explode across cloud infrastructure, with companies like Microsoft, Google, and Amazon racing to build out massive data centers to support their AI ambitions. The memory requirements for these systems are staggering, with each AI server potentially requiring multiple HBM modules to handle the massive parallel processing demands of large language models and other AI workloads.

Hybrid Bonding Technology Breakthrough

Looking beyond immediate production, Samsung has developed a hybrid bonding technology for HBM that addresses one of the most critical challenges in high-performance memory: thermal management. The technology reduces thermal resistance in 12H and 16H stacks by 20%, with testing showing an 11% lower temperature on the base die.

This thermal improvement is significant because as memory stacks get taller and denser, heat dissipation becomes increasingly challenging. Excessive heat not only limits performance but can also reduce the lifespan of memory modules and increase cooling costs in data centers. While Samsung hasn't announced a timeline for commercial availability of hybrid-bonded dies, this technology represents a crucial step forward in making higher-density memory stacks practical for widespread deployment.

zHBM: Revolutionizing Memory Architecture

Perhaps the most exciting development is Samsung's zHBM technology, which stacks dies in the Z-axis. This innovative approach promises to boost memory bandwidth by 4x while simultaneously reducing power consumption by 25%.

The Z-axis stacking represents a fundamental shift in memory architecture, moving beyond traditional planar designs to create three-dimensional memory structures. This could be particularly transformative for AI workloads that require massive amounts of data to be moved quickly between memory and processing units, as the increased bandwidth could dramatically reduce bottlenecks that currently limit AI system performance.

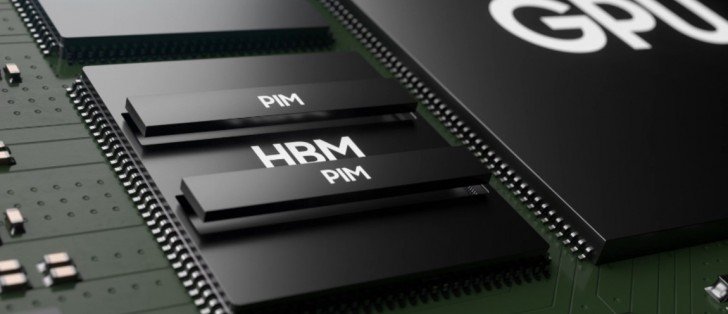

Processing-in-Memory: Bringing Compute to the Data

Samsung is also pioneering custom HBM designs with built-in compute capabilities, known as processing-in-memory (PIM). The company has already tested HBM-PIM memory in a custom AMD Instinct MI100 system, demonstrating the practical viability of this approach.

According to the CTO, these custom HBM designs can increase performance by 2.8x while maintaining the same power efficiency. This represents a fundamental rethinking of how memory and processing interact, moving compute closer to where data resides rather than shuttling data back and forth between separate memory and CPU/GPU units.

The implications for AI workloads are profound. Many AI operations, particularly those involving large matrix multiplications and other linear algebra operations, can be partially offloaded to memory units, reducing the burden on primary processors and potentially enabling more efficient, faster AI inference and training.

Market Context and Future Outlook

Samsung's aggressive push into advanced memory technologies comes as the company faces both opportunity and challenge. The unprecedented demand from AI hyperscalers has created a seller's market for memory, with prices surging and supply struggling to keep pace.

However, this demand is expected to extend well into 2027, according to Samsung's projections, suggesting that the current boom isn't just a short-term phenomenon but rather reflects a fundamental shift in how computing infrastructure is being built out to support AI workloads.

The company's comprehensive approach—spanning immediate production of HBM4, near-term improvements through hybrid bonding, architectural innovations with zHBM, and revolutionary concepts like PIM—positions Samsung as a key enabler of the AI revolution. As AI continues to permeate everything from cloud services to edge devices, the memory technologies being developed today will form the foundation for tomorrow's AI capabilities.

For the broader tech industry, Samsung's advancements signal that the memory bottleneck that has long constrained computing performance may finally be breaking. With bandwidth increasing 4x, power consumption dropping 25%, and performance potentially improving 2.8x through PIM, the next generation of AI systems could be significantly more capable than current generations, enabling more complex models, faster training, and more efficient inference across a wide range of applications.

Comments

Please log in or register to join the discussion