As developers push LLMs into production workflows, a critical design philosophy is emerging: prioritize deterministic code and limit AI autonomy. This case study reveals how shifting from 'autonomy first' to 'autonomy last' architectures solves critical reliability issues in complex systems.

The Hidden Cost of LLM Autonomy: Why Less Freedom Means More Reliability

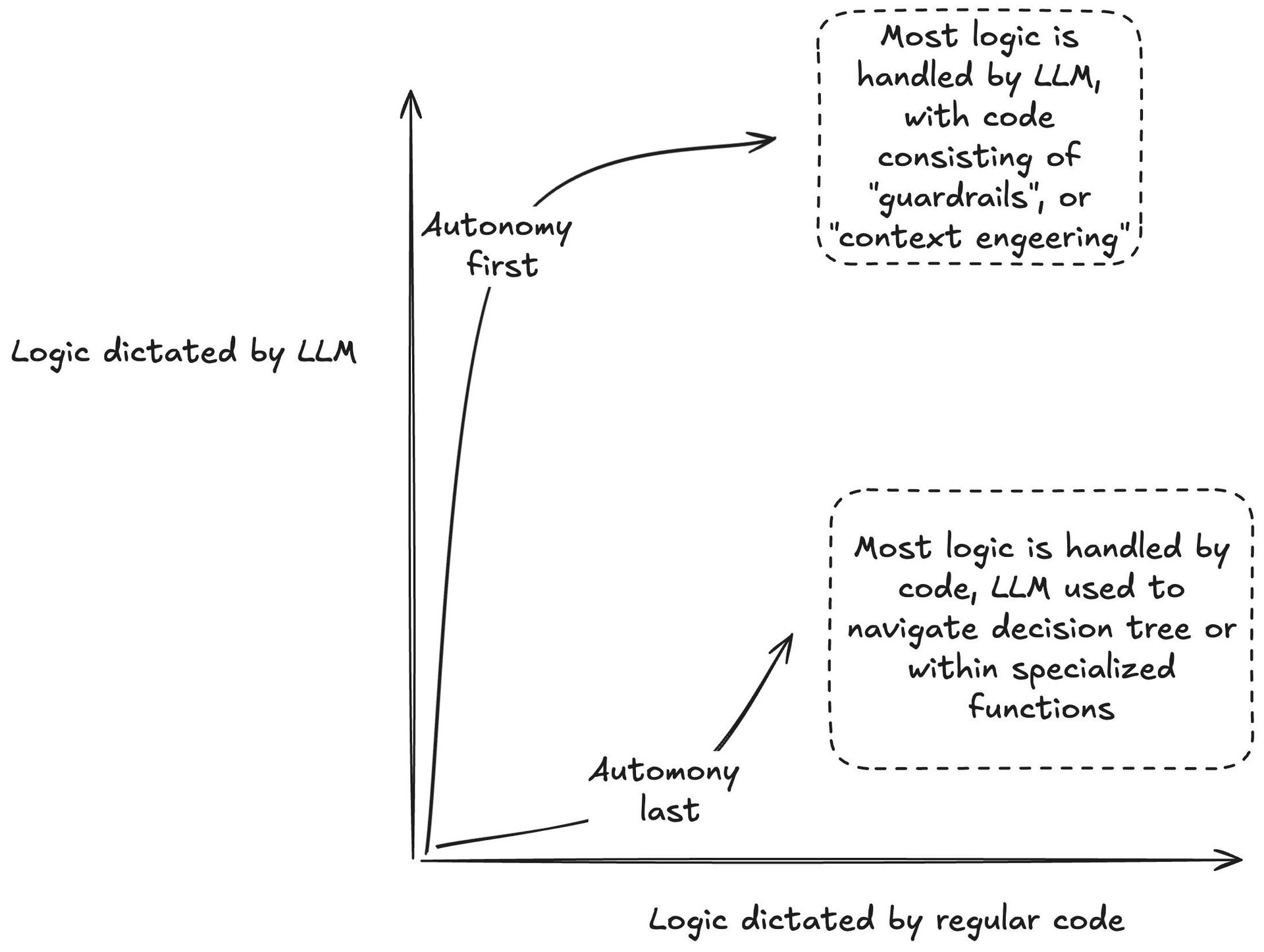

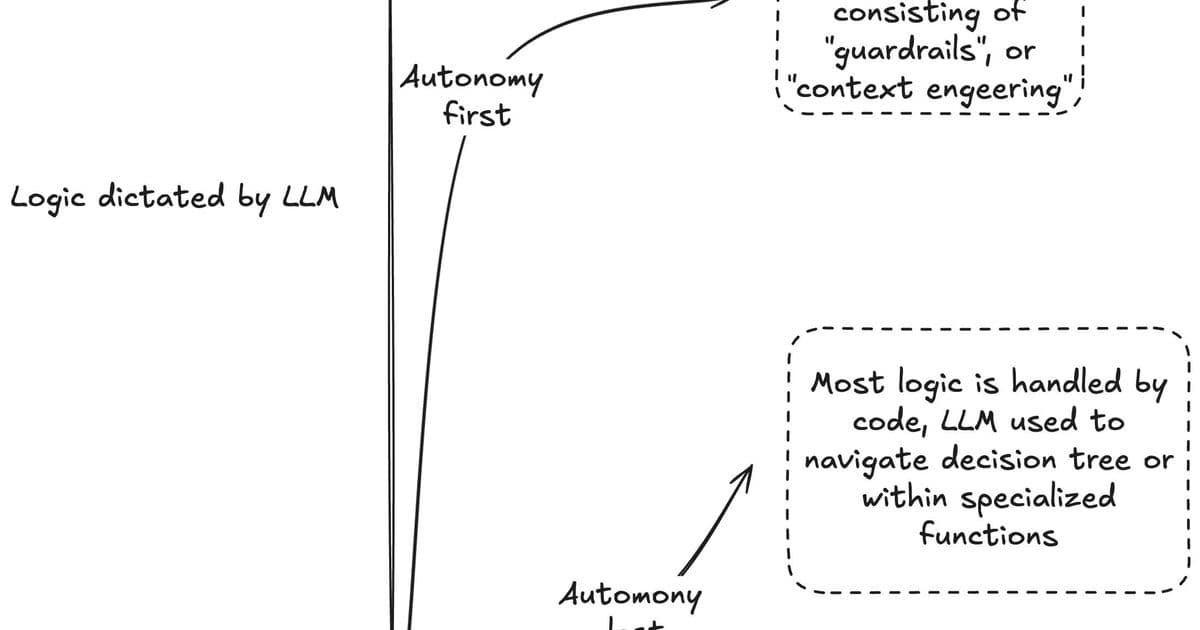

Building production systems with large language models presents a fundamental tension: how much decision-making authority should we delegate to inherently unpredictable neural networks? This dilemma is crystallizing into two competing philosophies—"autonomy first" versus "autonomy last"—with profound implications for system reliability and maintainability.

Autonomy first (left) vs. autonomy last (right) architectural approaches (Source: elroy.bot)

Autonomy first (left) vs. autonomy last (right) architectural approaches (Source: elroy.bot)

The Allure and Peril of Autonomous Agents

The dominant paradigm today—"autonomy first"—gives LLMs maximum freedom upfront. Techniques like agent frameworks, Model Context Protocol (MCP), and prompt engineering all share this core approach:

# Classic autonomy-first pseudocode

while True:

user_input = get_input()

# LLM decides EVERYTHING: tools, memory, actions

llm_response = llm.decide_actions(user_input, available_tools)

execute_actions(llm_response)

These systems start with full LLM autonomy and attempt to constrain behavior through added guardrails and context engineering. But as systems scale, critical flaws emerge:

- Model sensitivity: Behavior radically changes when switching LLMs (GPT-4 vs. Claude Sonnet)

- Hallucination cascades: Misuse of tools or memory systems compounds errors

- Prompting fragility: Fixes for one model break others, creating maintenance nightmares

"Programmers tend to repair autonomy-first systems with more prompting—it's digital duct tape. This doesn't scale," observes the Elroy.bot developer behind a revealing case study.

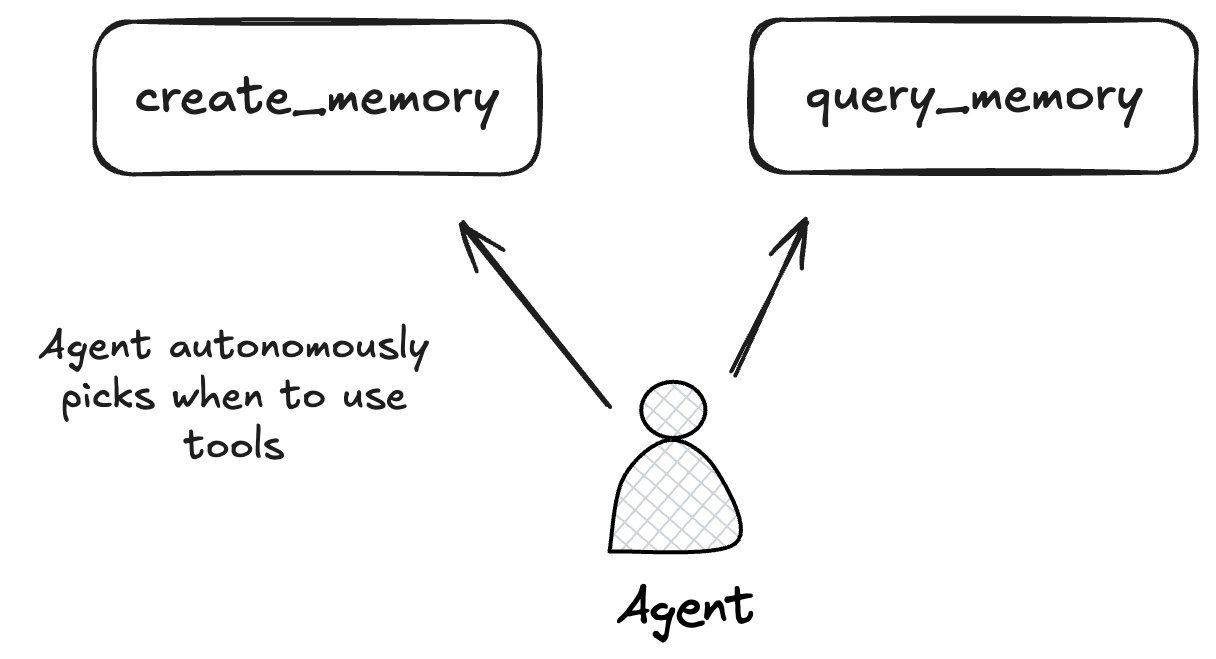

Case Study: Building a Chatbot That Remembers

The journey to build Elroy—a conversational AI with persistent memory—exposed these limitations starkly. The initial "agent with tools" architecture gave the LLM full control over memory operations:

Initial agent architecture with LLM-driven memory decisions (Source: elroy.bot)

Initial agent architecture with LLM-driven memory decisions (Source: elroy.bot)

When switching from GPT-4 to Claude Sonnet, the system went haywire—creating useless memories for trivial queries and over-referencing historical context. Prompt tuning couldn't solve the inconsistency.

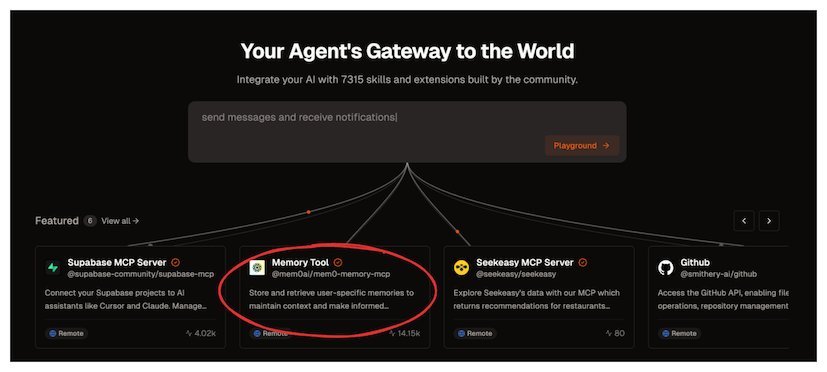

The MCP Experiment

Model Context Protocol (MCP) offered a promising alternative—a standardized interface for tool usage:

MCP implementations promise standardized tool integration (Source: smithery.ai)

MCP implementations promise standardized tool integration (Source: smithery.ai)

Yet the core autonomy problem remained: the LLM still decided when to access memory, leading to the same model-specific inconsistencies.

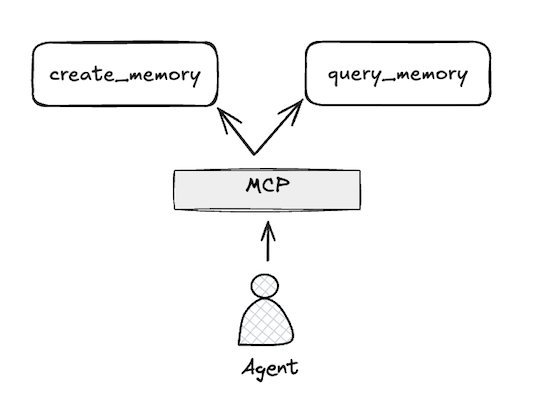

The Autonomy Last Breakthrough

The solution emerged by flipping the design paradigm:

- Deterministic code handles workflow: Fixed rules trigger memory operations

- LLMs handle narrow tasks: Only generate responses from pre-fetched context

- Encapsulated autonomy: LLMs operate within strictly bounded contexts

# Autonomy-last pseudocode

def handle_message(user_input):

# DETERMINISTIC: Always search memories first

memories = memory_search(user_input)

# AUTONOMY: Generate response from context

response = llm.generate(user_input, memories)

# DETERMINISTIC: Create memory every 5 messages

if message_count % 5 == 0:

create_memory(user_input, response)

This approach delivered transformative benefits:

- Consistent behavior across LLM models

- Predictable scaling through encapsulated AI functions

- Reduced hallucination via context boundaries

Engineering Wisdom for the LLM Era

The autonomy last principle represents a maturation of LLM engineering—recognizing that neural networks excel as specialized components rather than central controllers. As systems grow in complexity, delegating core program flow to deterministic code creates robust foundations, while strategically placed AI modules provide adaptive capabilities where truly needed.

This isn't about limiting potential, but about building systems that actually work tomorrow—not just in today's demo. The most powerful LLM applications may be those that know precisely when not to be autonomous.

Source: Add Autonomy Last

Comments

Please log in or register to join the discussion