Critical vulnerabilities in Anthropic's official Git MCP server could allow attackers to read arbitrary files, delete data, and execute code through prompt injection. The flaws affect the reference implementation used by developers across the ecosystem.

A set of three security vulnerabilities has been disclosed in mcp-server-git, the official Git Model Context Protocol (MCP) server maintained by Anthropic. These flaws could be exploited to read or delete arbitrary files and execute code under certain conditions. The vulnerabilities are particularly concerning because they can be triggered through prompt injection, meaning an attacker who can influence what an AI assistant reads—such as a malicious README, poisoned issue description, or compromised webpage—can weaponize these flaws without direct access to the victim's system.

Mcp-server-git is a Python package and MCP server that provides built-in tools to read, search, and manipulate Git repositories programmatically via large language models. The security issues were addressed in versions 2025.9.25 and 2025.12.18 following responsible disclosure in June 2025.

The Three Vulnerabilities

CVE-2025-68143 (CVSS 8.8/6.5) - A path traversal vulnerability in the git_init tool that accepts arbitrary file system paths during repository creation without validation. Fixed in version 2025.9.25.

CVE-2025-68144 (CVSS 8.1/6.4) - An argument injection vulnerability where git_diff and git_checkout functions pass user-controlled arguments directly to git CLI commands without sanitization. Fixed in version 2025.12.18.

CVE-2025-68145 (CVSS 7.1/6.3) - A path traversal vulnerability caused by missing path validation when using the --repository flag to limit operations to a specific repository path. Fixed in version 2025.12.18.

Attack Chain: From Prompt Injection to RCE

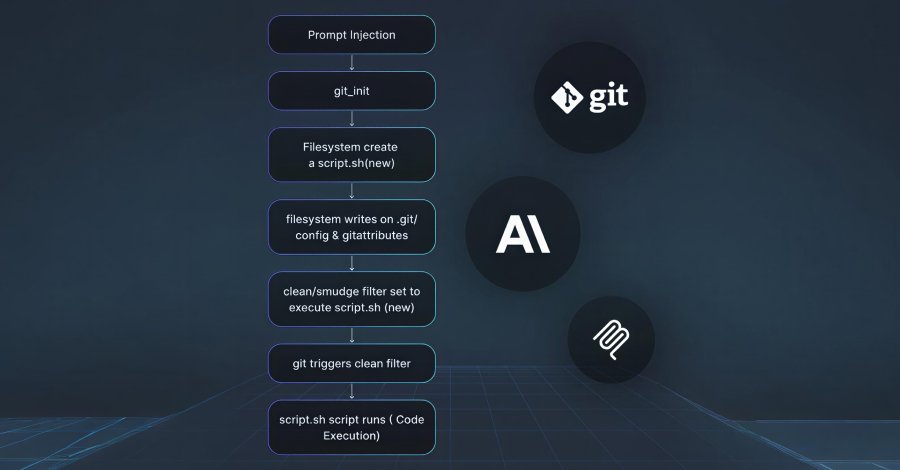

Cyata researcher Yarden Porat documented a sophisticated attack sequence that chains all three vulnerabilities with the Filesystem MCP server to achieve remote code execution:

- Use

git_initto create a repository in a writable directory (exploiting CVE-2025-68143) - Use the Filesystem MCP server to write a malicious

.git/configfile with a clean filter - Write a

.gitattributesfile to apply the filter to certain files - Write a shell script containing the payload

- Write a file that triggers the filter

- Call

git_add, which executes the clean filter and runs the payload

This attack works because the .git/config file can contain filter definitions that execute arbitrary commands during Git operations. The clean filter runs when files are added to the repository, providing a code execution vector.

Why This Matters

Shahar Tal, CEO and co-founder of Agentic AI security company Cyata, emphasized the broader implications: "This is the canonical Git MCP server, the one developers are expected to copy. If security boundaries break down even in the reference implementation, it's a signal that the entire MCP ecosystem needs deeper scrutiny. These are not edge cases or exotic configurations, they work out of the box."

The MCP (Model Context Protocol) is designed to allow AI assistants to interact with external tools and data sources. The Git MCP server provides a standard way for LLMs to work with version control, making it a fundamental component for AI-assisted development workflows. When the reference implementation contains vulnerabilities, it creates a ripple effect across the entire ecosystem.

The Prompt Injection Vector

What makes these vulnerabilities particularly dangerous is the prompt injection attack surface. Unlike traditional vulnerabilities that require direct system access, these flaws can be exploited through data that an AI assistant processes:

- Malicious README files in repositories that an assistant reads

- Poisoned issue descriptions on platforms like GitHub

- Compromised webpages that an assistant might browse

- Manipulated commit messages or code comments

An attacker doesn't need to compromise the developer's machine directly—they only need to influence what the AI assistant reads. Once the assistant processes the malicious input, it can trigger the vulnerabilities through normal Git operations.

Mitigation and Response

Anthropic responded to these findings by:

- Removing the

git_inittool entirely from the package - Adding extra validation to prevent path traversal primitives

- Releasing patched versions (2025.9.25 and 2025.12.18)

Users of the Python package are strongly recommended to update to the latest version for optimal protection.

Broader Ecosystem Implications

This disclosure highlights a critical challenge in the emerging MCP ecosystem. As AI assistants become more integrated into development workflows, the security of the tools they interact with becomes paramount. The reference implementations set the standard for how these tools should work, making their security posture essential for the entire community.

The vulnerabilities demonstrate that even well-maintained projects from reputable organizations like Anthropic can contain serious security flaws. This underscores the need for:

- Rigorous security review of all MCP server implementations

- Defense in depth when designing AI tool integrations

- Clear security boundaries between AI assistants and system resources

- Continuous monitoring of how AI tools interact with sensitive operations

Recommendations for Developers

If you're using mcp-server-git or similar MCP servers:

- Update immediately to version 2025.12.18 or later

- Audit your dependencies for other MCP servers that might have similar issues

- Implement sandboxing when running AI assistants with Git access

- Review repository contents before allowing AI assistants to process them

- Consider using read-only modes when possible

The security community continues to analyze the MCP ecosystem for similar vulnerabilities. As AI assistants become more capable and integrated into development workflows, the attack surface they present will require ongoing scrutiny and robust security practices.

For more information about these vulnerabilities, visit Cyata's research blog and the Anthropic MCP documentation.

This article was based on research by Yarden Porat at Cyata. The vulnerabilities were responsibly disclosed to Anthropic in June 2025 and have been patched.

Comments

Please log in or register to join the discussion