Verbit's architecture team eliminated ML algorithm integration headaches by abstracting research code into Temporal workflows. This approach enables automatic production updates without rewrites, ML engineers, or cross-team bottlenecks—delivering resilience and scale for free.

For machine learning teams, the journey from research prototype to production algorithm is often a nightmare of duplicated effort, dependency conflicts, and organizational friction. At Verbit, where I serve as Chief Architect, this pain reached breaking point. Our researchers struggled to integrate ML algorithms into production services, triggering a cycle of re-implementation, cross-team meetings, and delayed deployments.

The Algorithm Integration Trap

Traditional approaches force painful trade-offs:

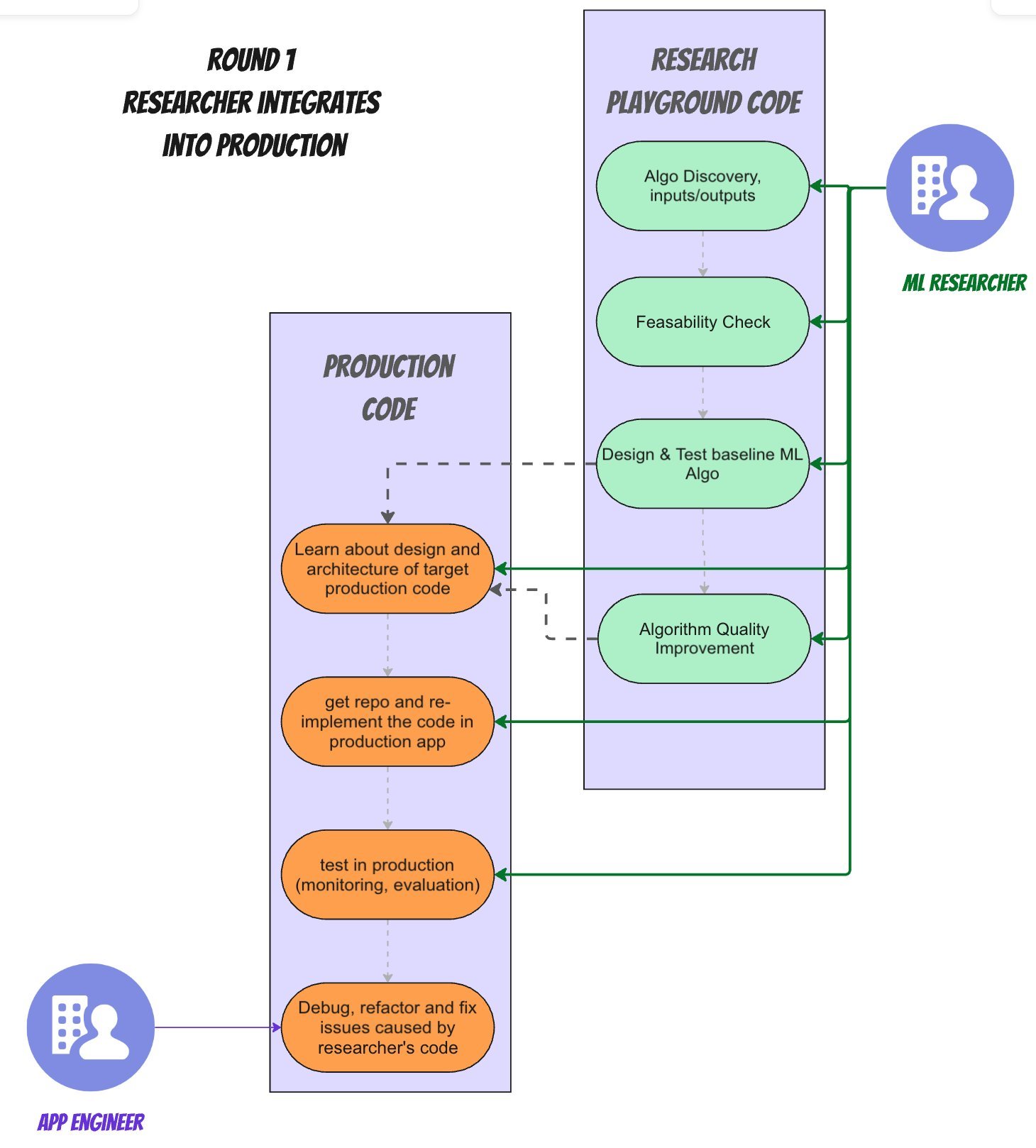

Direct Integration (Round 1): Researchers surgically embed algorithms into app code—a process requiring deep knowledge of production systems, dependency management, and ongoing maintenance across repositories.

shows the chaos: minor algorithm updates demand changes in both research and application codebases. Researchers become accidental infrastructure engineers, distracted from core work.

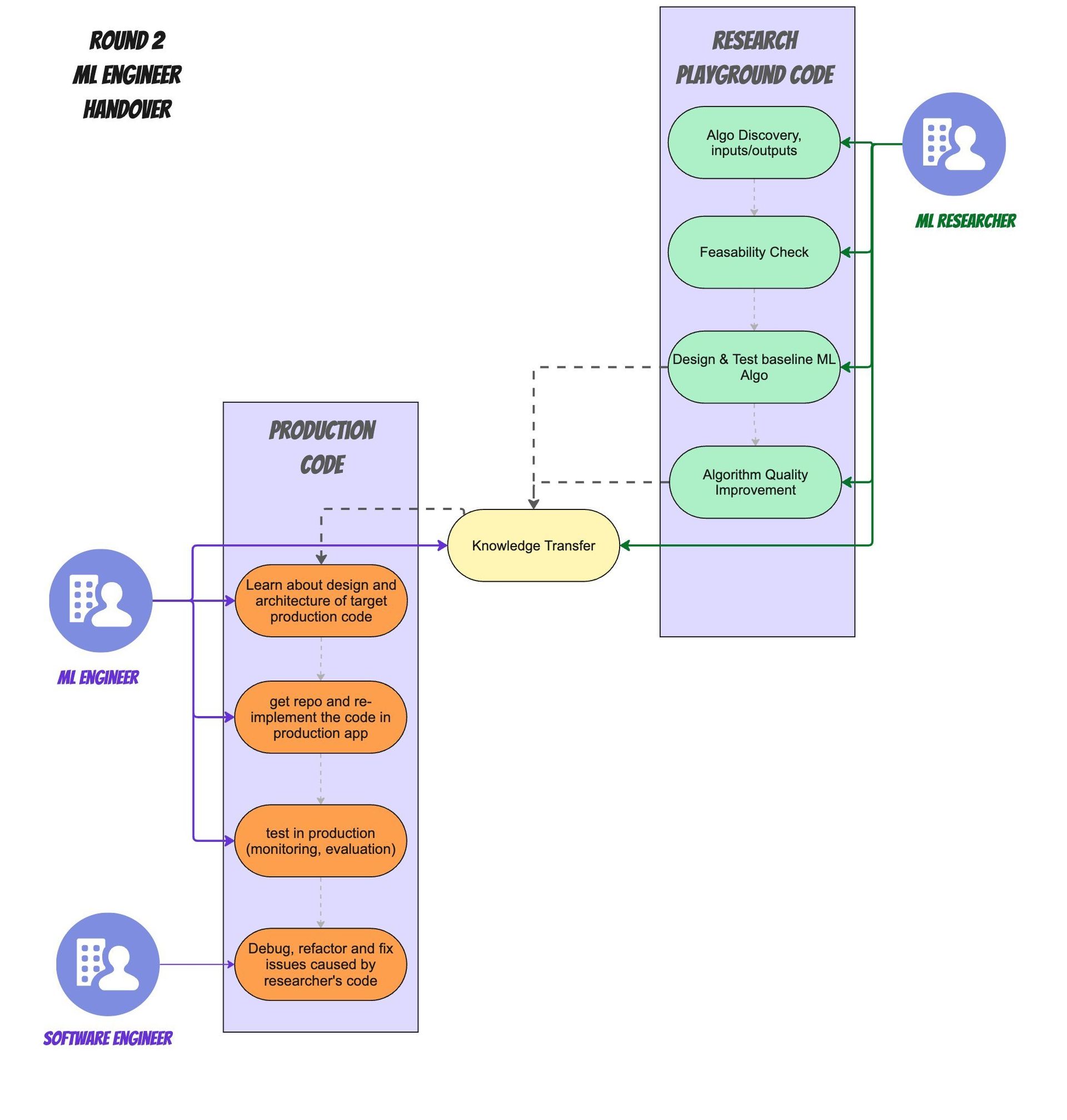

shows the chaos: minor algorithm updates demand changes in both research and application codebases. Researchers become accidental infrastructure engineers, distracted from core work.The ML Engineer Band-Aid (Round 2): Adding intermediary ML engineers creates new problems: hiring costs, knowledge transfer overhead, priority misalignment, and persistent duplication of effort. Updates queue behind team backlogs, while engineers grow frustrated with limited research involvement.

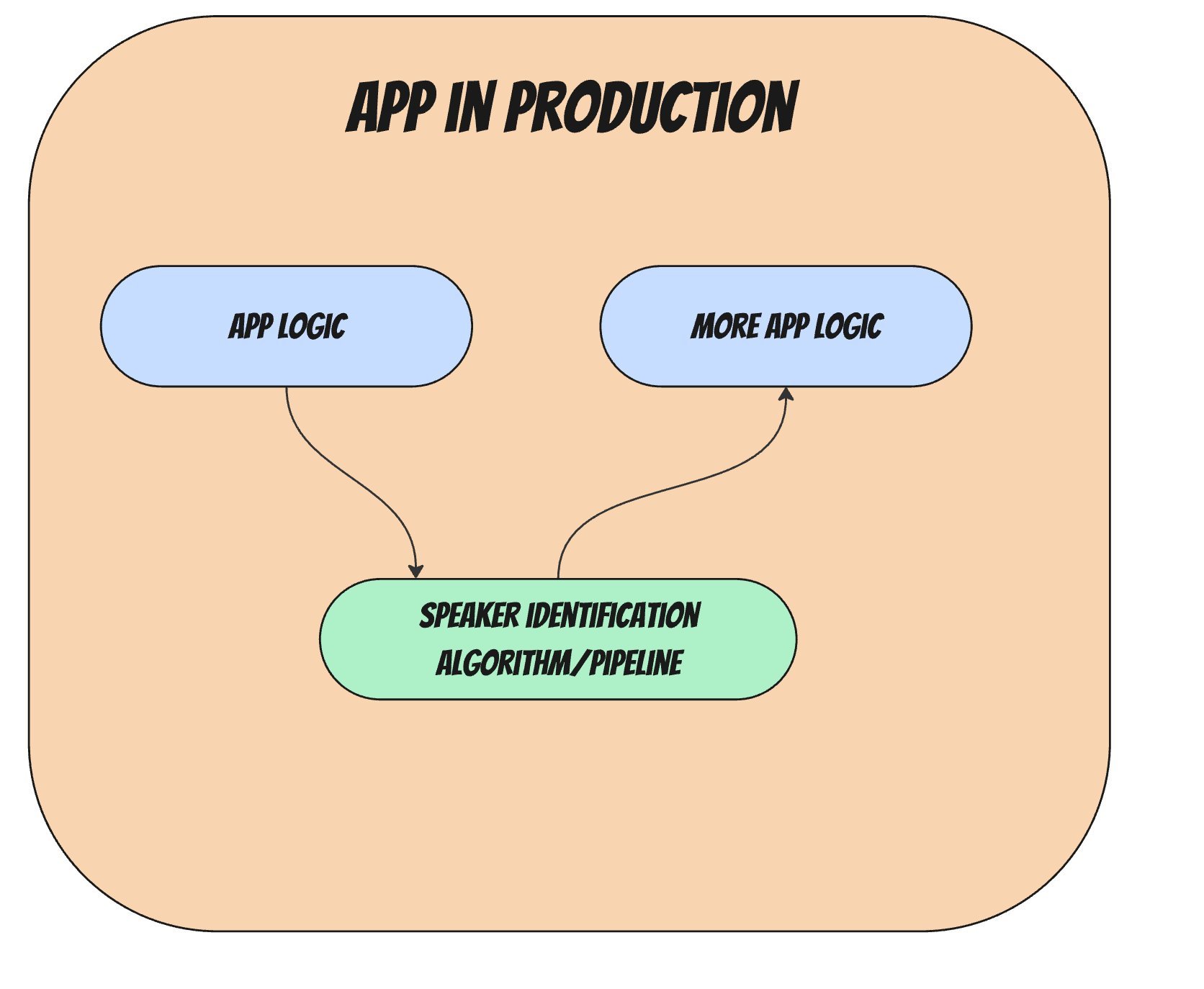

Worse, microservice architectures amplify these issues.  illustrates how algorithms spaghetti across repositories, multiplying integration points and ownership ambiguity.

illustrates how algorithms spaghetti across repositories, multiplying integration points and ownership ambiguity.

Temporal: The Workflow Wedge

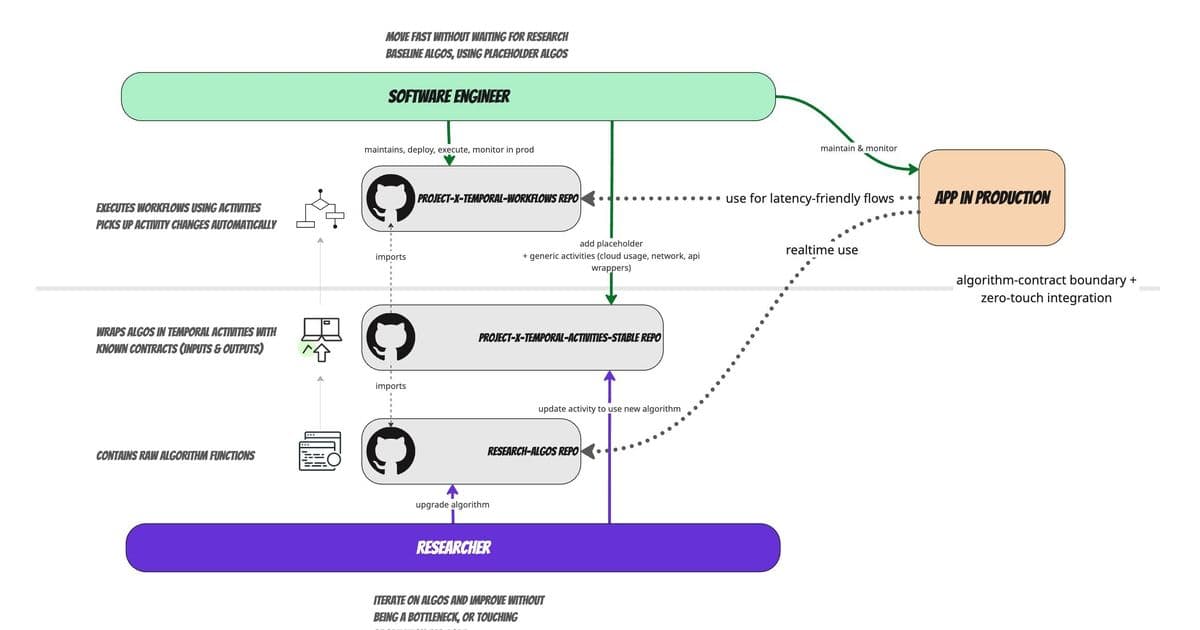

Our breakthrough came by treating algorithms as temporal activities within stateful workflows:

# Workflow (Engineer-Owned)

@workflow.defn

class SpeakerIdentificationWorkflow:

@workflow.run

async def run(self, audio: AudioClip) -> SpeakerID:

return await workflow.execute_activity(

identify_speaker, # Activity wrapping researcher's algorithm

audio,

start_to_close_timeout=timedelta(seconds=30)

)

# Activity (Researcher-Owned)

@activity.defn

def identify_speaker(audio: AudioClip) -> SpeakerID:

return researcher_algorithm(audio) # Direct research code

How It Works

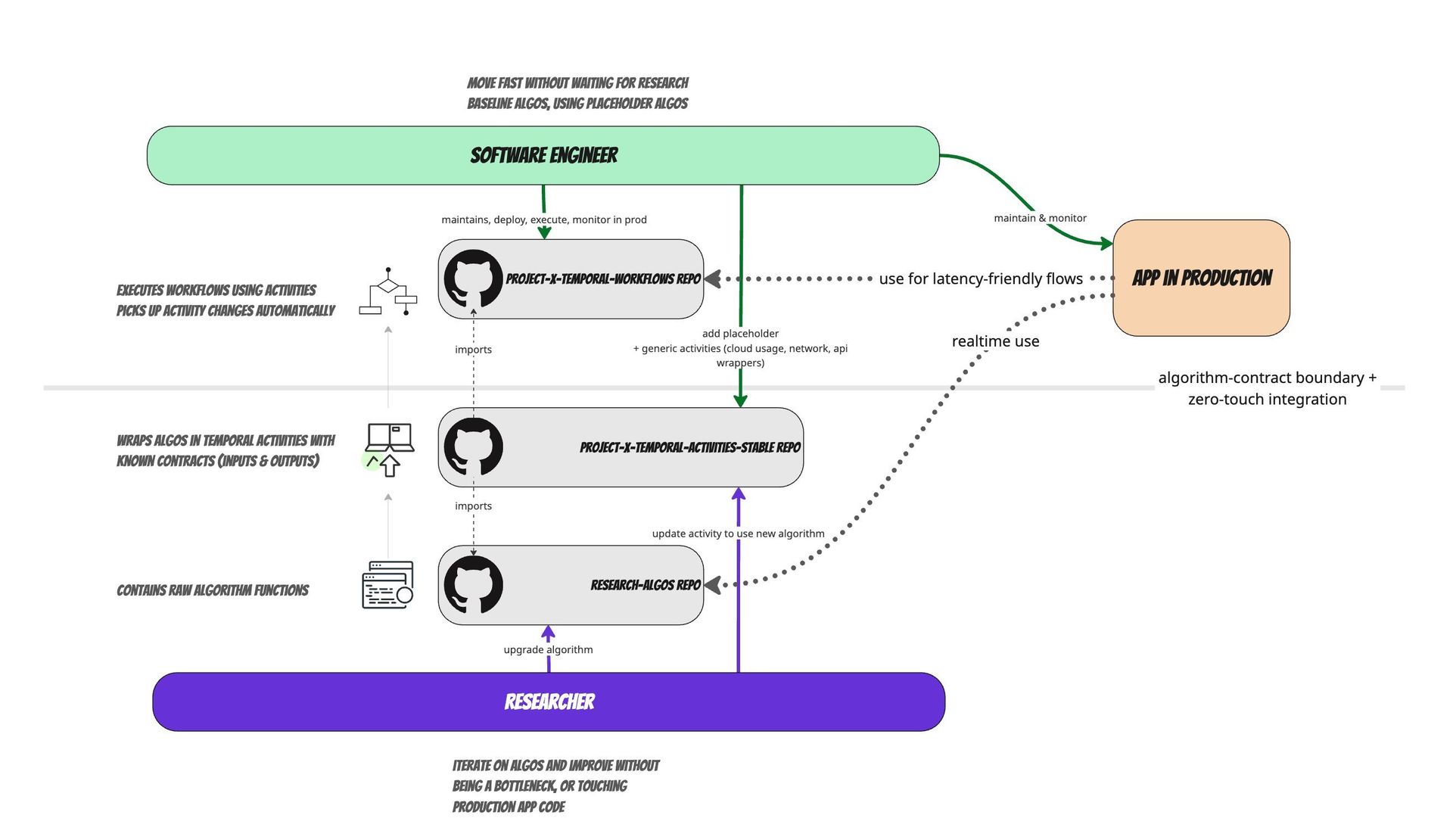

- Researchers maintain algorithms in their repositories, wrapping them as Temporal activities (~10 lines of code).

- Engineers write simple workflows calling these activities—abstracting away algorithm internals.

- On commit: New activity code deploys automatically. Workflows invoke the latest version without engineer intervention.

- Production Benefits: Temporal provides automatic retries, timeouts, and scalability without requiring researchers to implement them.

Why This Transforms Deployment

- Zero-Touch Updates: Algorithm improvements deploy instantly—no re-implementation or cross-team coordination.

- Decoupled Ownership: Researchers own activities; engineers own workflows. Neither needs deep knowledge of the other's domain.

- Eliminated Bottlenecks: No ML engineer handoffs. Workflows act as versioned "algorithm APIs."

- Resilience By Default: Temporal handles failures, retries, and scaling transparently.

- Parallel Development: Engineers can ship workflows with mock activities before algorithms exist, enabling incremental delivery.

Real-World Impact at Verbit

Setup took ~1 month (one architect full-time), plus two weeks for initial POCs. Results:

- Researchers push production-ready algorithms independently

- 95% of updates require zero engineer involvement

- Teams ship features faster using placeholder activities

- Monitoring centralizes in Temporal’s UI

The Road Ahead

While Temporal solved our core handoff problem, challenges remain for stateful/long-running algorithms. We're exploring patterns for offline/online hybrid workflows. This approach—perhaps called the Algorithm Conduit Pattern—proves that clean separation between research and engineering isn't just possible: it unlocks velocity. As one researcher remarked: "I finally feel empowered to improve production without drowning in YAML."

Comments

Please log in or register to join the discussion