ZLUDA's latest update delivers complete Llama.cpp support on AMD GPUs with near-native ROCm performance, improved Windows stability, and ROCm 7 compatibility, advancing cross-platform CUDA translation.

The open-source ZLUDA project has reached critical milestones in its mission to enable unmodified CUDA applications on non-NVIDIA hardware. Recent developments significantly enhance AMD GPU viability for AI workloads, particularly through full compatibility with Llama.cpp's CUDA backend.

Technical Advancements

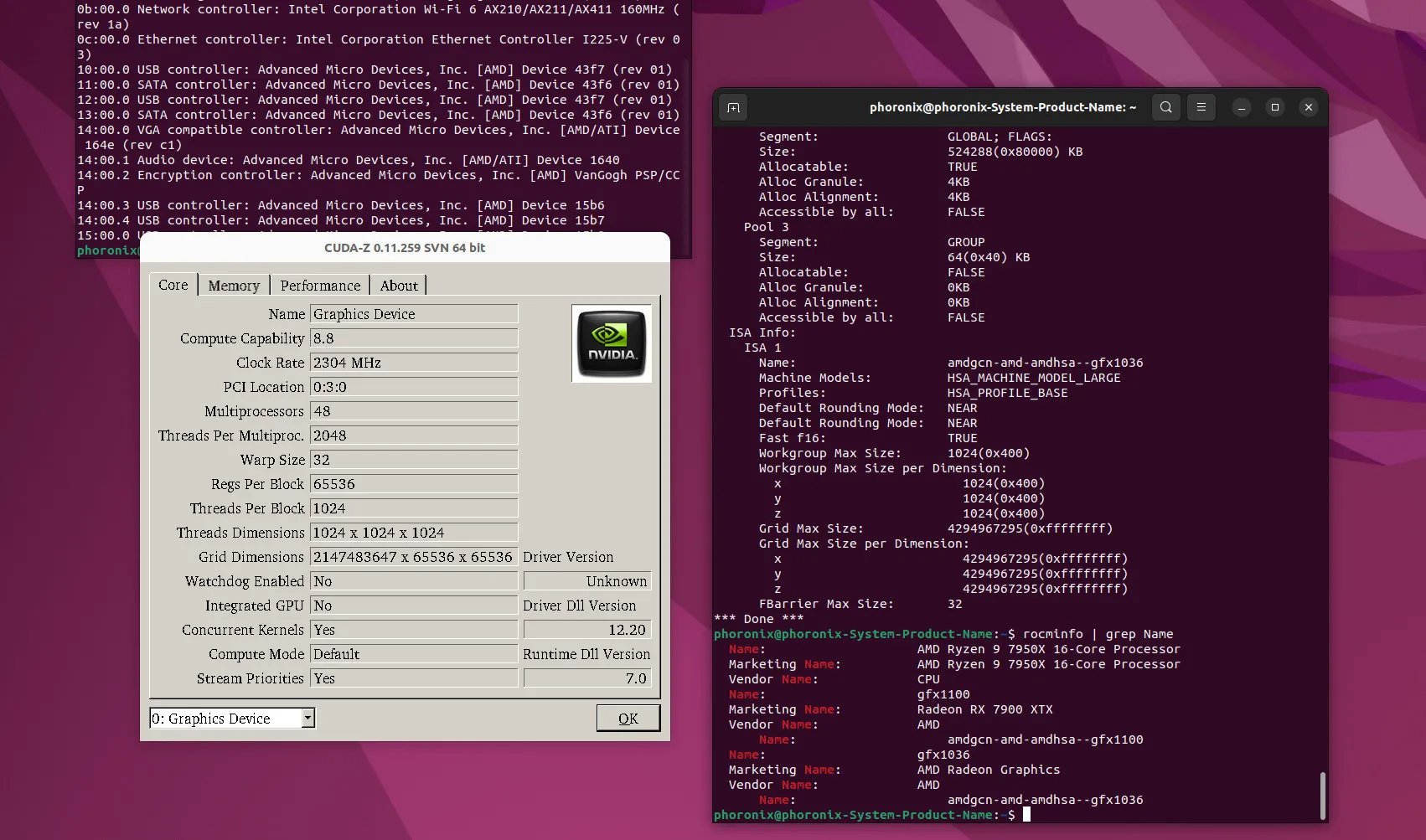

ZLUDA now achieves "nearly identical" performance to AMD's native ROCm backend when running Llama.cpp inference workloads. This parity is notable given Llama.cpp's existing ROCm and Vulkan implementations. The project ships its own bundled LLVM compiler instead of relying on AMD's comgr library, reducing dependency conflicts and improving compilation reliability across hardware configurations.

Windows support sees substantial improvements with the zluda.exe implementation reaching "acceptable quality" levels matching Linux functionality. This expands deployment scenarios for workstation users leveraging AMD GPUs in mixed-OS environments.

Architectural Implications

ROCm 7 compatibility positions ZLUDA alongside AMD's latest architectural enhancements, including CDNA 3 optimizations and MI300 series support. The performance equivalence in Llama.cpp suggests efficient translation of CUDA kernels to AMD's instruction set architecture, though microarchitecture-specific optimizations remain vendor-exclusive.

Market Impact

By narrowing the CUDA compatibility gap, ZLUDA potentially accelerates AMD GPU adoption in three key areas:

- AI Development: Researchers can benchmark CUDA-based models against native ROCm/Vulkan implementations

- Enterprise Deployment: Windows compatibility enables broader workstation deployment

- Cloud Economics: Datacenter operators gain flexibility in hardware procurement

Development Outlook

While PyTorch support missed its 2025 target, ZLUDA's trajectory indicates steady progress. The project maintains transparency through quarterly GitHub updates, with community testing revealing <5% performance variance versus native ROCm in Llama.cpp workloads. As CUDA remains the dominant AI framework, ZLUDA's continued evolution could reshape competitive dynamics in accelerator markets.

Comments

Please log in or register to join the discussion