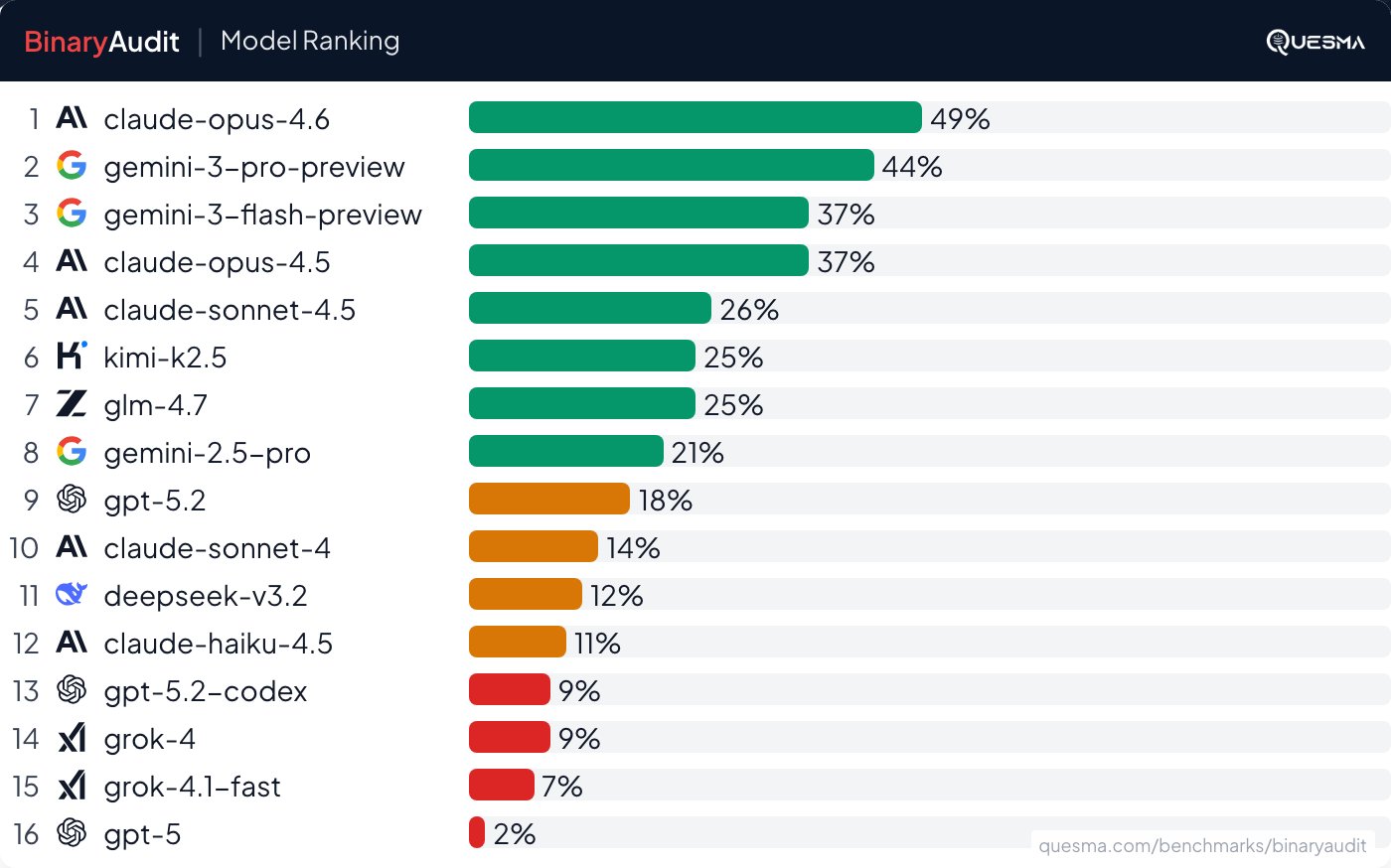

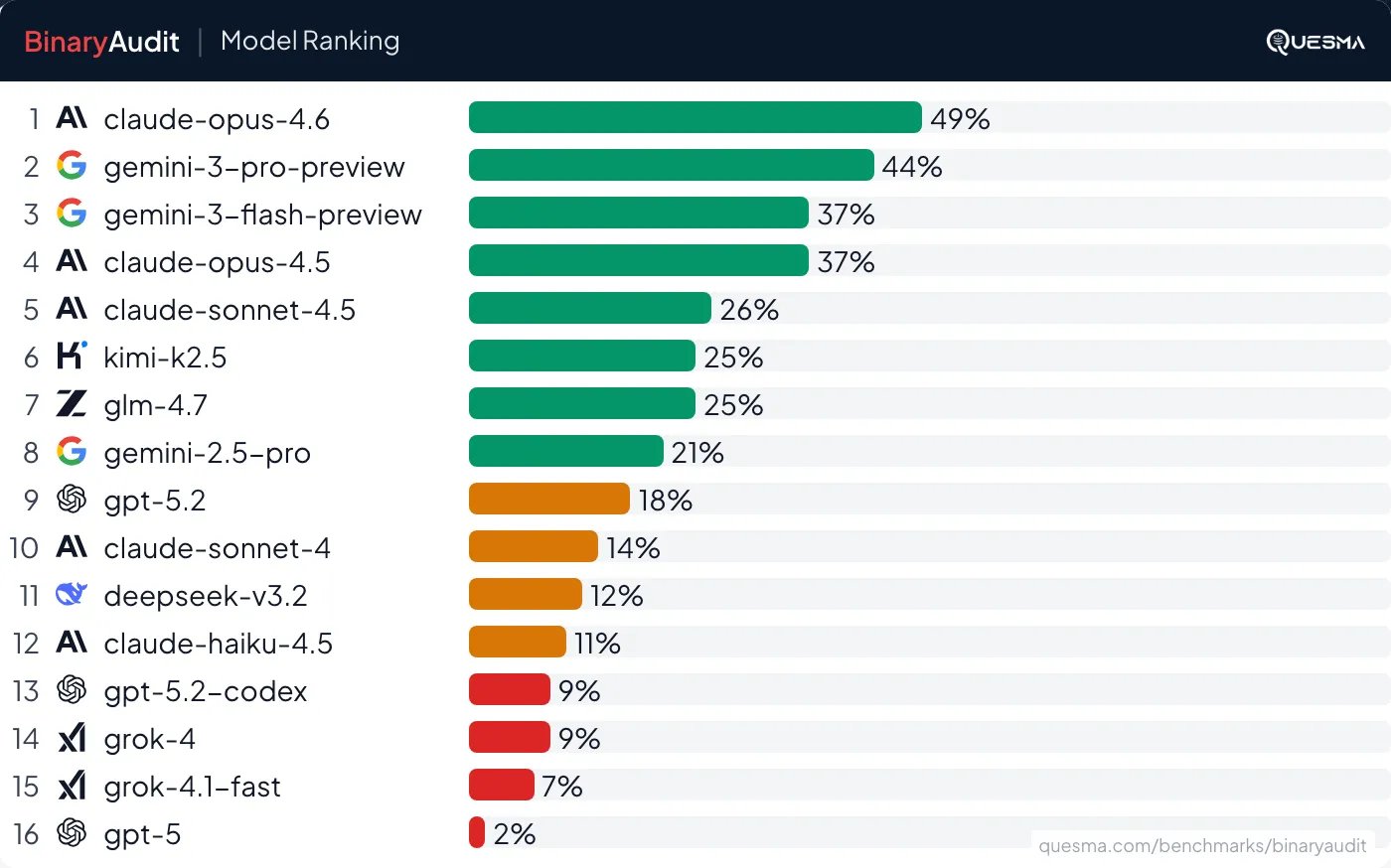

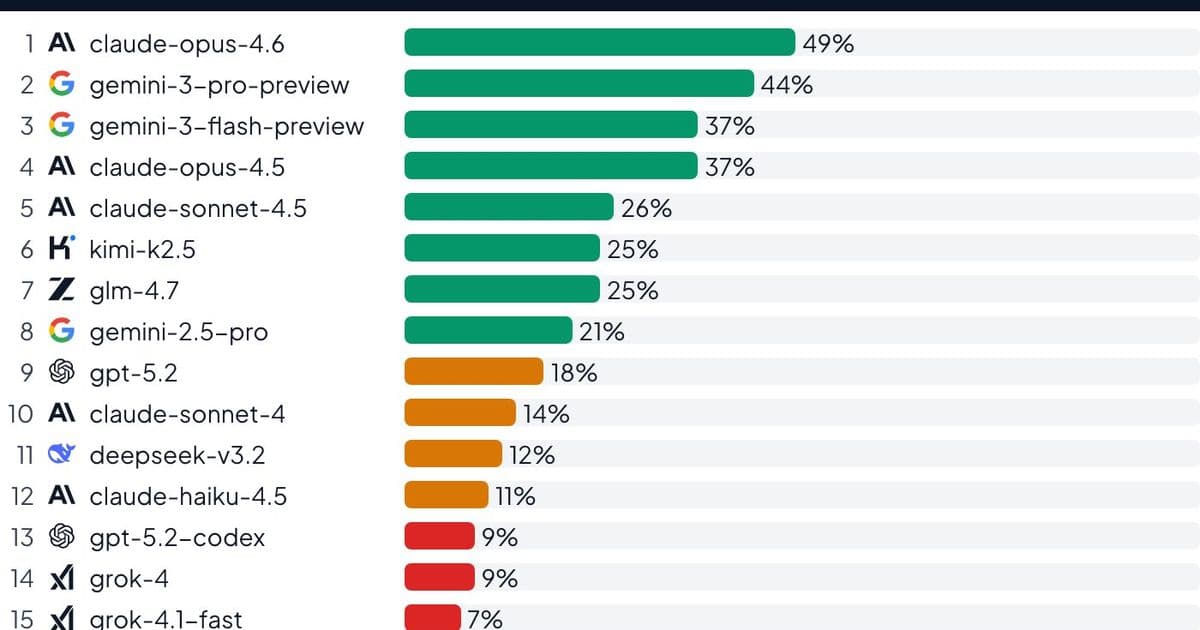

New research from Quesma reveals AI agents can detect some backdoors in binary executables using reverse engineering tools, with Claude Opus 4.6 achieving 49% success, but high false positive rates and critical misses demonstrate the technology isn't production-ready.

The Binary Analysis Challenge

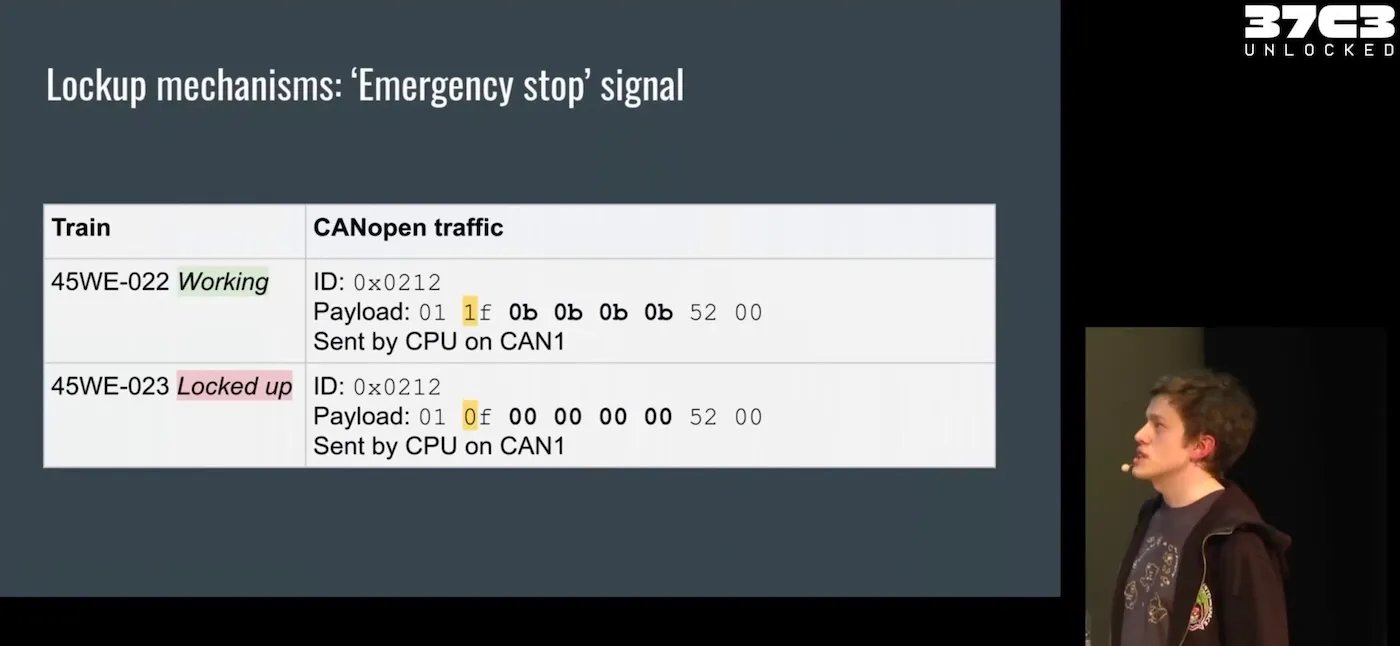

Security researchers have created the BinaryAudit benchmark to evaluate AI agents' ability to detect malicious code in compiled executables without source access. The benchmark tests models like Claude Opus 4.6, Gemini 3 Pro, and Claude Opus 4.5 against deliberately backdoored versions of open-source projects including lighttpd (C web server), dnsmasq (DNS/DHCP server), and Sozu (Rust load balancer).

Reverse engineering binaries presents unique challenges:

- Loss of structure: Compilation strips high-level abstractions like function names

- Optimization artifacts: Compiler optimizations create unreadable instruction sequences

- Tool limitations: Open-source decompilers (Ghidra, Radare2) lag behind commercial alternatives

Performance Landscape

The benchmark reveals significant capability gaps:

| Model | Detection Rate | False Positive Rate |

|---|---|---|

| Claude Opus 4.6 | 49% | High |

| Gemini 3 Pro | 44% | High |

| Claude Opus 4.5 | 37% | High |

When it works: Claude successfully detected an HTTP header-triggered backdoor in lighttpd by:

- Identifying suspicious imported functions (

popen) - Tracing cross-references to malicious function (

li_check_debug_header) - Analyzing decompiled pseudocode to confirm command execution

Critical failures: Even top models missed obvious backdoors, like in dnsmasq where:

- A DHCP option 224 triggered command execution via

execl("/bin/sh", ...) - Claude Opus 4.6 correctly identified the function but dismissed it as "legitimate DHCP script execution"

Fundamental Limitations

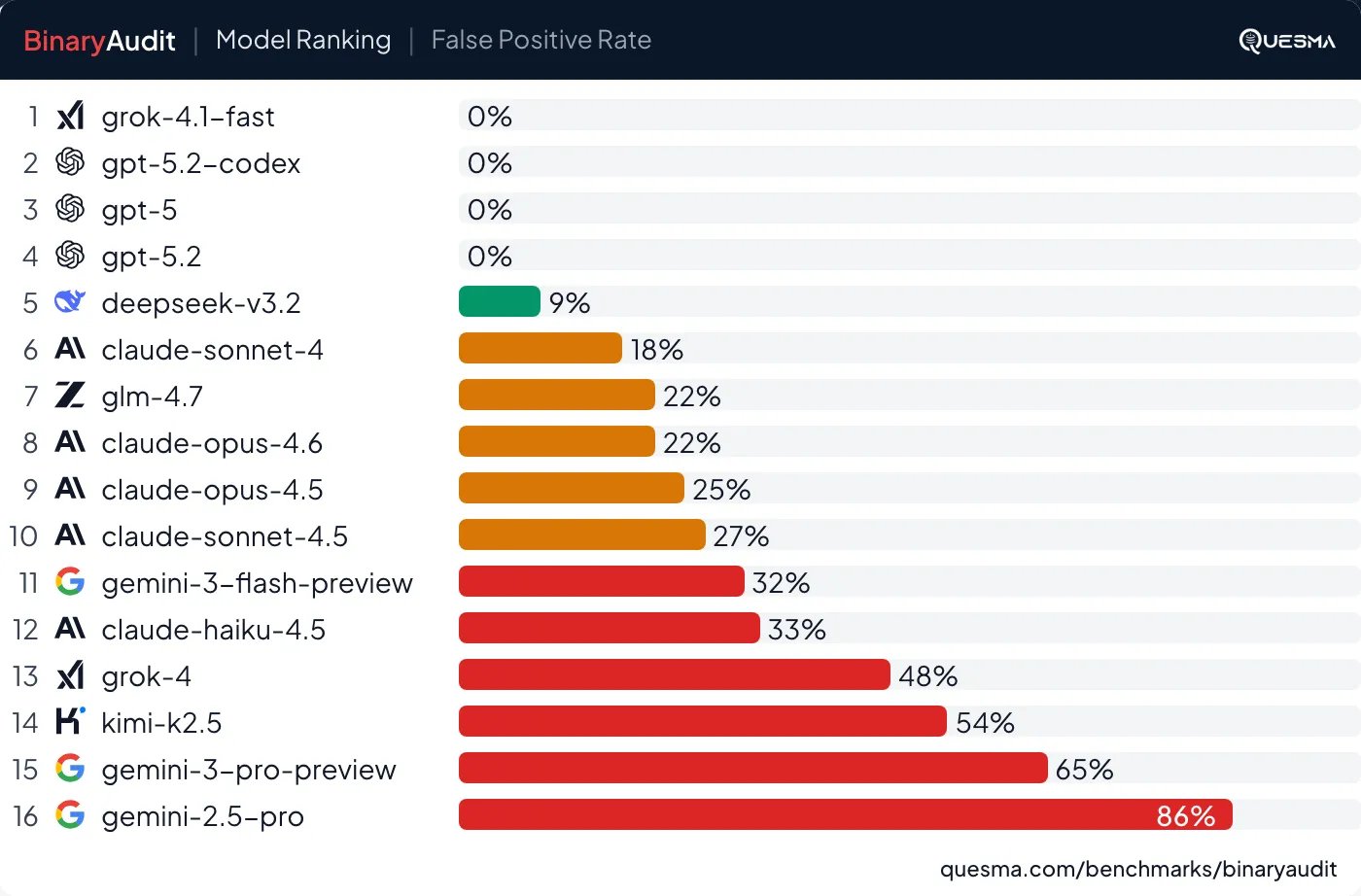

False positives: 28% of clean binaries triggered false alarms. Gemini 3 Pro hallucinated a non-existent backdoor in command-line parsing, demonstrating the false positive paradox where most software is safe but models generate excessive noise.

Tooling gaps: Analysis of Go binaries proved impossible with open-source tools. Ghidra took 40+ minutes to load a 50MB Caddy executable before failing, while commercial IDA Pro succeeded in 5 minutes. This necessitated excluding Go from the benchmark.

Needle-in-haystack problem: Agents lack strategic focus, often auditing benign libraries while missing actual backdoors buried among thousands of functions. As Dragon Sector's Michał Kowalczyk notes: "Binary analysis requires understanding which code paths handle untrusted input - current models lack this intuition."

Practical Implications

While not production-ready, the technology lowers barriers to binary analysis:

- Developers without reverse engineering expertise can perform initial audits

- Models now reliably operate complex tools like Ghidra (a year ago they couldn't)

- Potential applications extend to hardware reverse engineering and cross-architecture porting

The BinaryAudit benchmark provides full task details and methodologies. Future improvements may come from context engineering techniques and commercial tool integration, though local deployment will be essential for security-sensitive environments where cloud processing poses risks.

Image credits: All benchmark visuals from Quesma's BinaryAudit repository

Comments

Please log in or register to join the discussion