The tech industry's push to replace professionals with AI isn't about capability—it's about eliminating workers who have the professional autonomy to tell their bosses 'no.'

The promise that AI will replace doctors, lawyers, and programmers has become a standard refrain in tech circles. But the real story isn't about technological capability—it's about power dynamics in the workplace. When we examine which professions are being targeted for AI replacement, a pattern emerges: these are precisely the workers whose professional codes of conduct give them the authority to refuse unreasonable or dangerous orders from management.

The Professional's Oath vs. The Boss's Ego

A professional isn't just someone paid to do a job. In its more meaningful sense, a professional is someone bound by a code of conduct that supersedes both employer demands and state authority. Consider the Hippocratic Oath: a doctor who has sworn to "first do no harm" is literally duty-bound to refuse orders to harm patients, whether those orders come from hospital administrators, police officers, or judges.

This same principle applies across what we call "professions": lawyers, accountants, medical professionals, librarians, teachers, and certain engineering disciplines. Each field has its own code of conduct, policed by professional associations with the power to bar members for misconduct. These codes exist for a reason—they protect the public, maintain standards, and give professionals the ethical backbone to push back against harmful directives.

Now consider which professions AI evangelists claim will be replaced by chatbots in the near future. It's the exact same list.

The Centaur vs. The Reverse Centaur

To understand why this matters, we need to distinguish between two ways AI can be used in professional work. A "centaur" is a human assisted by technology—think of a psychotherapist using AI to transcribe sessions so they can refresh their memory about exact phrases while making notes. The AI serves the professional's judgment.

A "reverse centaur" is the inverse: a machine assisted by people. This is what happens when a psychotherapist monitors 20 chat sessions with LLM "therapists," ready to intervene if the AI starts telling patients to kill themselves. The human becomes an "accountability sink"—installed to absorb blame when the AI harms someone, but unable to truly help patients.

The difference is crucial. In the centaur model, the professional retains agency and responsibility. In the reverse centaur model, the professional becomes a human shield for algorithmic failures, their expertise reduced to damage control.

Why Bosses Are Easy Marks

If AI can't actually do these jobs, why are bosses so eager to buy the hype? The answer reveals a fundamental conflict in modern workplaces.

First, there's a practical dimension: many AI systems can perform the bureaucratic overhead of management—answering emails, delegating tasks, taking credit for successes while blaming failures on subordinates. If 90% of a boss's job is coordination and political maneuvering, an AI might indeed handle it.

But there's a deeper psychological dimension. Bosses are especially susceptible to AI sales pitches when the promise is to replace workers who can tell them to fuck off. This explains the enthusiasm for replacing professionals with chatbots: it would eliminate the only workers professionally required to refuse stupid or dangerous orders.

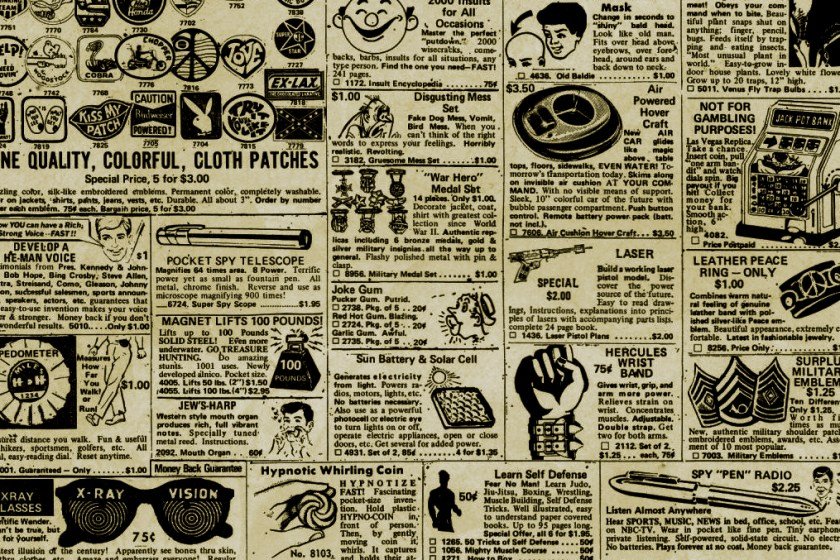

Consider screenwriters and actors. When a studio boss gives notes like "Give me ET, but make it about a dog, give it a love interest, and put a car chase in Act III," human writers will push back. They'll explain why those changes would ruin the story, demand the boss stop bothering them, and insist on creative autonomy. An LLM will cheerfully generate a terrible script to spec. The script's quality matters less than the fact that swapping writers for AI lets studio bosses escape ego-shattering conflicts with empowered workers.

The same dynamic plays out in programming. When tech companies faced a shortage of skilled developers, they lured talent with luxurious benefits, lavish pay, and collegial relationships. Engineering meetings allowed techies to tell bosses their strategies were stupid. Now that the supply of programmers has caught up with demand, bosses relish firing these "entitled" coders and replacing them with chatbots overseen by traumatized reverse centaurs who will never challenge authority.

The Union Connection

This pattern extends beyond white-collar professions. Bosses are equally eager to use AI to replace workers who might unionize: drivers, factory workers, warehouse workers. A union is fundamentally an institution that lets workers tell their boss to fuck off collectively. It's the organized expression of professional autonomy applied to labor.

The AI sales pitch promises a world where everyone—human and machine—follows orders to the letter and praises management for giving such clever orders. It's not about efficiency; it's about eliminating friction in the employer-employee relationship.

The Reality Check

AI salesmen aren't slick enough to hide their product's limitations. Chatbots can't actually perform professional work. They can't exercise judgment, maintain ethical boundaries, or take responsibility for outcomes. When a lawyer uses a chatbot to format a brief, that might work. When junior lawyers are required to write briefs at inhuman speed using AI, the result is briefs full of "hallucinated" citations—fabricated legal precedents that could destroy a case.

The problem isn't that AI is useless. It's that the business model driving AI adoption treats it as a replacement rather than an augmentation. Employers dream of reverse centaurs because they want machines that can't refuse orders, backed by humans who can be blamed for failures.

What This Means for Workers

The professional code of conduct—whether formal like a doctor's oath or implicit like a programmer's commitment to clean code—represents a form of workplace democracy. It gives workers the authority to say no when management demands something unethical, dangerous, or incompetent.

AI adoption in these fields isn't primarily about improving service or increasing efficiency. It's about dismantling the structures that give professionals autonomy. The chatbot doesn't need a Hippocratic Oath. It doesn't have a professional association that can revoke its license. It will generate whatever output the prompt demands, regardless of consequences.

This explains why the push for AI replacement is strongest in fields where professional autonomy has been most successfully defended. The tech industry's own programmers, with their history of engineering-led decision making and pushback against bad management ideas, are now prime targets for AI replacement. The goal isn't to improve code quality—it's to eliminate workers who can say "this technical strategy is stupid" and be heard.

The Broader Pattern

What we're witnessing isn't a technological revolution but a labor counter-revolution. The same forces that have spent decades weakening unions, outsourcing work, and creating gig economy precarity are now using AI as their latest tool to eliminate worker power.

The professional code of conduct, whether formal or informal, represents a last line of defense for worker autonomy. It's what gives a doctor the right to refuse to harm a patient, a lawyer the right to refuse to present a frivolous case, and a programmer the right to refuse to ship insecure code. AI doesn't have these rights, and more importantly, it doesn't have the judgment to know when to exercise them.

The real question isn't whether AI can replace professionals. It's whether we're willing to sacrifice professional autonomy for the convenience of management. Every time we replace a professional with a chatbot, we're not just changing how work gets done—we're changing who has the authority to say no when things go wrong.

The AI salesmen promise a world of perfect compliance and efficiency. What they're really selling is a world without accountability, where no one has the professional standing to refuse a harmful order. That's not progress. That's the erosion of the very structures that make professional work valuable in the first place.

The Path Forward

Workers in these fields face a choice: accept the reverse centaur model and become human shields for algorithmic failures, or organize to defend professional autonomy in the age of AI. This might mean developing new codes of conduct specifically for AI-assisted work, or strengthening existing professional associations to address algorithmic management.

The alternative is a future where professionals are reduced to monitoring AI systems, taking responsibility for their failures without having the authority to prevent them. In that world, the only people who can truly tell their bosses to fuck off will be the ones whose jobs haven't been automated yet—and their number is shrinking every day.

The battle over AI in professional fields isn't really about technology. It's about power, autonomy, and the right to refuse. And that's a battle worth fighting.

Comments

Please log in or register to join the discussion