Researchers developed an agentic AI system using ChatGPT-4o to monitor 3D prints and catch errors in real time, potentially reducing failure rates from 7% to near-zero and making additive manufacturing more competitive.

Researchers from Carnegie Mellon University's Department of Mechanical Engineering have developed an innovative system that uses multiple large language models to monitor and correct 3D printers in real time, potentially revolutionizing additive manufacturing quality control.

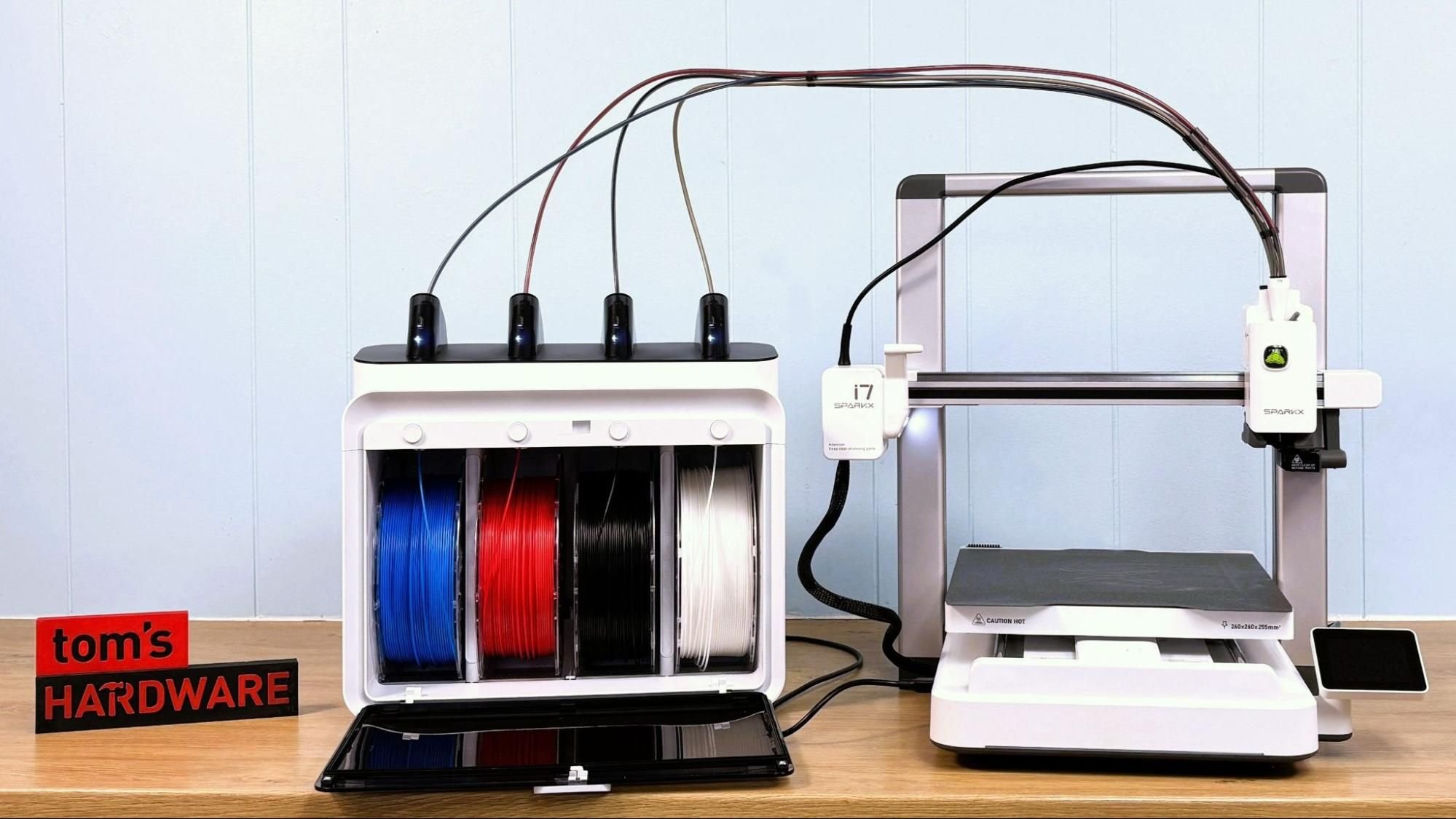

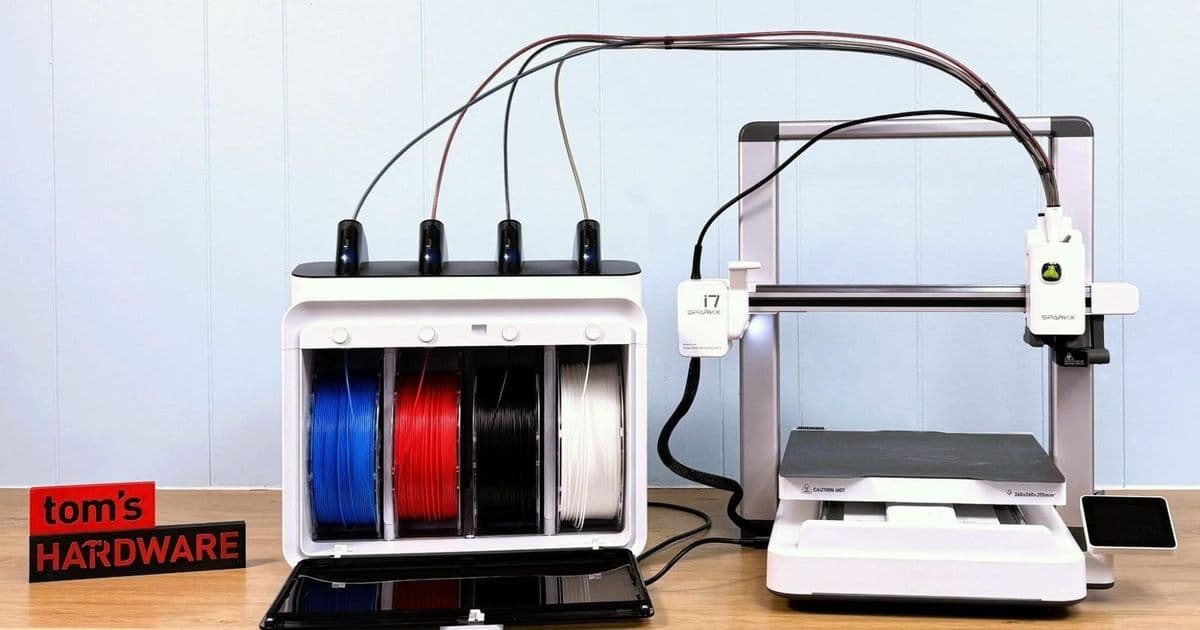

The system addresses a critical pain point in 3D printing: failure rates that remain stubbornly high despite decades of technological advancement. According to Prusa3D, approximately 7% of prints on their MMU2S printer fail completely, while another 19% require user intervention. This means nearly one in four prints encounters problems that demand human attention.

For casual hobbyists running a single printer, manual monitoring might be manageable. But for manufacturing operations, these failure rates represent significant waste and lost productivity. While manufacturing standards in the 1980s aimed for failure rates around 5%, modern expectations have tightened to approximately 0.1%. A 7% failure rate makes 3D printing less competitive compared to traditional manufacturing processes when quality and reliability are paramount.

How the AI Monitoring System Works

The Carnegie Mellon team's solution employs a sophisticated multi-agent architecture built around ChatGPT-4o, avoiding the need for custom-trained models. The system consists of four specialized agents working in concert:

Visual-Language Model Agent: Captures images after each printed layer and analyzes them for quality issues and defects. This agent serves as the system's eyes, detecting problems that might not be visible to the naked eye or that develop gradually during the printing process.

Printer Settings Analysis Agent: Examines current printer configurations to identify what adjustments are needed to address detected issues. This agent understands the relationship between printer parameters and print quality.

Solution Planner Agent: Creates actionable plans based on the analysis, determining the optimal sequence of corrections needed to salvage or improve the print.

Executor Agent: Interfaces directly with the 3D printer through its API to implement the planned corrections, making real-time adjustments to temperature, speed, extrusion, or other parameters.

A fifth Supervisor Agent oversees the entire process, ensuring information flows correctly between agents and that all decisions remain relevant and up-to-date throughout the printing process.

The Significance of Using Base ChatGPT-4o

What makes this system particularly noteworthy is its use of the base ChatGPT-4o model rather than specialized, custom-trained LLMs. The research team developed domain-specific, generalized structured prompts that enable the system to work effectively without requiring extensive training on specialized datasets.

This approach offers several advantages:

- Simplicity of implementation: Organizations can deploy the system without the overhead of training custom models

- Ease of improvement: Updates to the base model automatically benefit the system

- Flexibility: The same architecture can potentially work across different printer makes and models

- Cost-effectiveness: Avoids the expense of developing and maintaining custom AI models

Industry Impact and Future Implications

"The future is adaptive," said Associate Professor Amir Barati Farimani from Carnegie Mellon's Department of Mechanical Engineering. "The integration of LLMs into the 3D printing process represents a significant advancement. As these models evolve, their ability to reason over richer, multimodal data will unlock even more capabilities. For now, this work provides a foundation for truly intelligent and autonomous manufacturing systems, capable of achieving unprecedented levels of precision and reliability."

The technology could fundamentally change how 3D printing is used in manufacturing. By reducing failure rates from 7% toward the industry standard of 0.1%, additive manufacturing becomes far more competitive for production runs where quality consistency is critical.

In the near term, this means manufacturers could deploy 3D printing for more applications without the overhead of constant human monitoring. In the longer term, as the technology matures, printer cameras might feed directly into AI control loops, eliminating the need for manual babysitting entirely.

Current Limitations and Next Steps

While promising, the system still faces challenges before widespread adoption. The research demonstrates proof of concept, but real-world deployment will require:

- Integration with various printer APIs and control systems

- Testing across different materials and printing technologies

- Validation of the system's ability to handle complex, multi-stage prints

- Development of fail-safe mechanisms for when AI corrections don't work as intended

For now, users will still need to monitor their prints manually and intervene when problems arise. But the Carnegie Mellon system represents a significant step toward autonomous, intelligent manufacturing that could make 3D printing a more viable option for quality-critical production environments.

The research team's work suggests that the combination of computer vision, language models, and real-time control systems could address one of additive manufacturing's most persistent challenges. As AI models continue to improve their reasoning capabilities and ability to process multimodal data, systems like this could become standard features on industrial 3D printers within the next few years.

Comments

Please log in or register to join the discussion