Chrome’s new Gemini‑powered agent introduces a multi‑layered safety net—an independent User Alignment Critic, origin‑set gating, and user‑prompt confirmations—to guard against indirect prompt injection and data exfiltration. The rollout showcases how browser security can evolve alongside generative AI, blending deterministic rules with on‑device AI checks.

Chrome’s New Gemini‑Powered Agent: A Security Playbook

In a bold move that blends browser security with generative AI, Google’s Chrome team unveiled a suite of safeguards designed to protect users from the emerging threat of indirect prompt injection in its upcoming Gemini‑powered agent. The new architecture, detailed in a recent Google Security Blog post, layers deterministic checks, a dedicated alignment critic, and origin‑set isolation to keep user data and transactions safe while still delivering the convenience of an AI assistant.

The Threat Landscape

Indirect prompt injection occurs when malicious content—whether from a compromised site, an iframe, or user‑generated reviews—manipulates an LLM to take unintended actions. In a browser context, that could mean the agent initiating a bank transfer or exfiltrating credentials. Chrome’s 15‑year legacy of safeguarding users now extends to this new frontier.

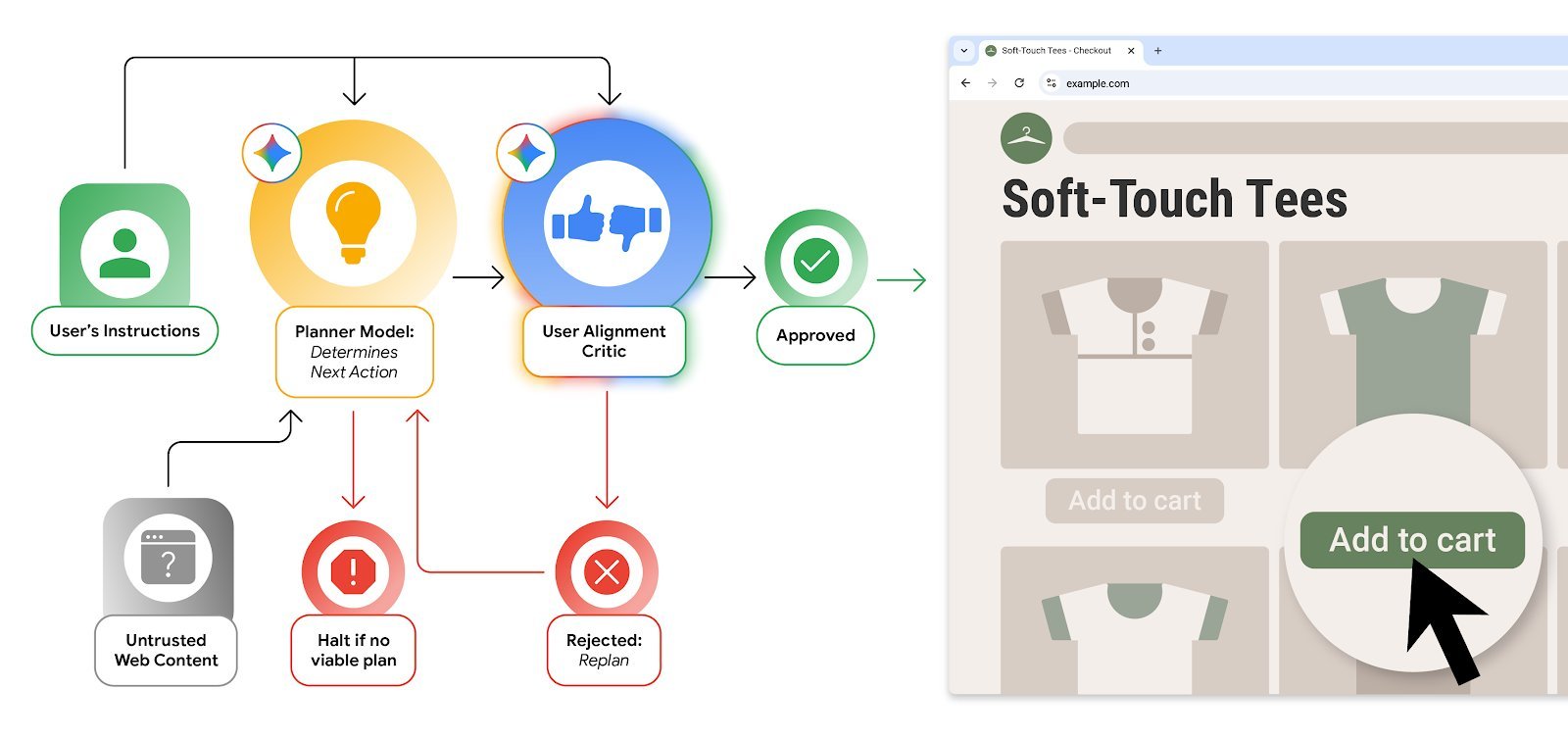

A Dual‑LLM Defense: The User Alignment Critic

The core of Chrome’s strategy is a User Alignment Critic, a lightweight Gemini instance that runs after the main planner has drafted an action. The critic receives only metadata about the proposed step—no raw page content—and votes to approve or veto it.

"The critic can see the action’s intent but not the untrusted web content that might poison it," explains Nathan Parker, a Chrome security engineer.

If the critic rejects an action, the planner is forced to re‑formulate the plan or hand control back to the user, creating a natural feedback loop that thwarts goal‑hijacking.

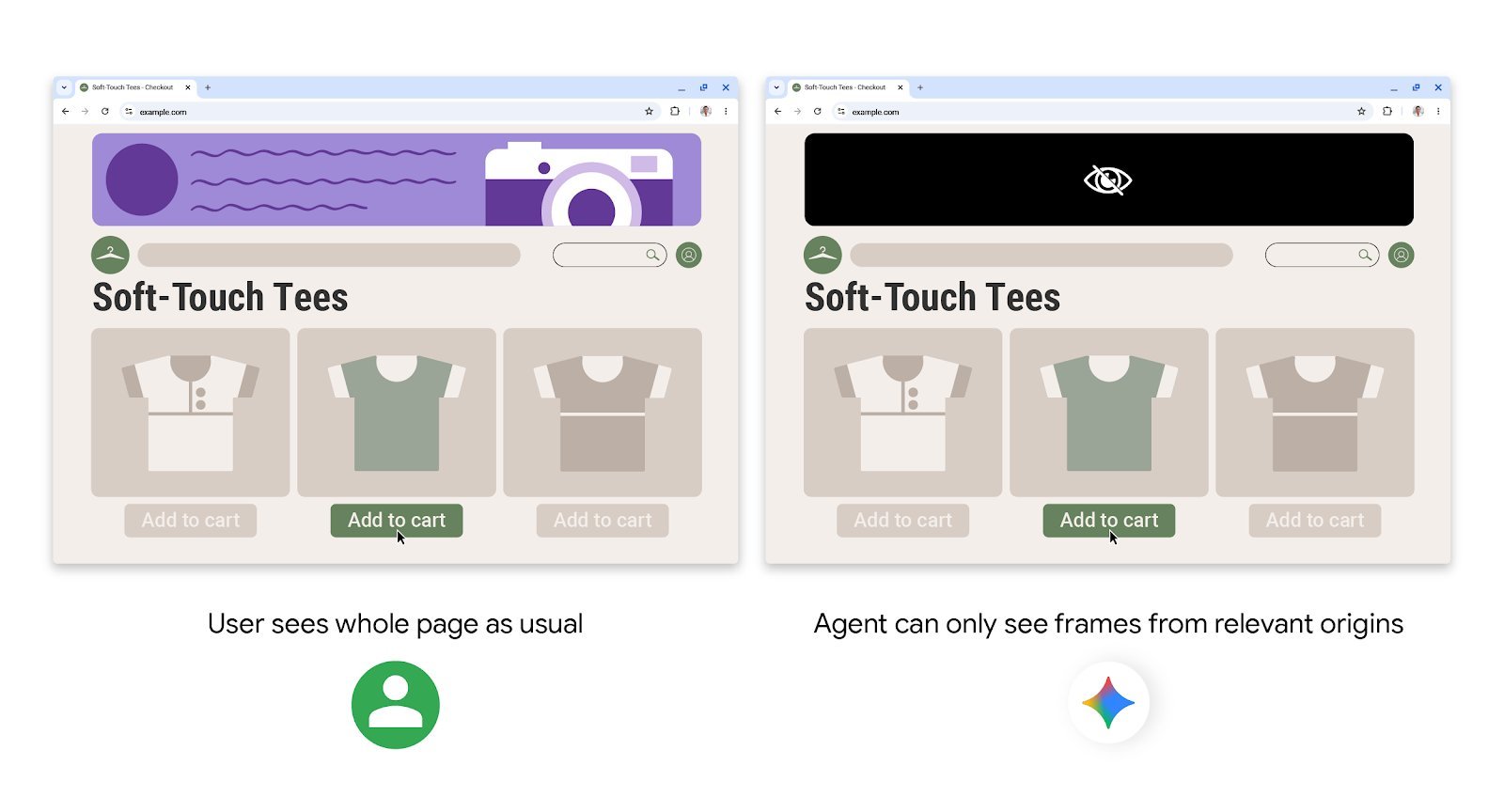

Origin‑Set Gating: Containing the Agent’s Reach

Because agents must cross site boundaries—e.g., gathering ingredients from one store and adding them to a cart on another—Chrome introduces Agent Origin Sets. These sets split origins into:

- Read‑only: The agent can consume content but cannot interact.

- Read‑writable: The agent may both read and perform actions.

A gating function, protected from untrusted input, determines which origins belong in each set for a given task. If the agent proposes a new origin, it must pass a User Alignment Critic check before being added.

Transparent, User‑Controlled Actions

Beyond automated checks, Chrome keeps the user in the loop for high‑stakes operations. The agent logs every step in a visible work log and pauses for confirmation before:

- Navigating to sensitive sites (banking, medical portals).

- Signing in via Google Password Manager.

- Completing payments or sending messages.

These deterministic guards are complemented by an on‑device prompt‑injection classifier that scans pages in real time, flagging content designed to trick the model.

Continuous Auditing and Community Collaboration

To validate the defenses, Chrome employs automated red‑teaming that generates sandboxed malicious sites and evaluates the agent’s resilience. The team also invites external researchers through an updated Vulnerability Rewards Program, offering up to $20,000 for breakthroughs that breach agentic security boundaries.

Looking Ahead

Chrome’s layered approach—combining deterministic policies, a trusted critic, and real‑time scanning—sets a new benchmark for browser‑based AI safety. While the field of web agents is still nascent, this architecture demonstrates that robust security can coexist with powerful generative capabilities.

As Gemini’s agentic features roll out, developers and security practitioners will watch closely to see how these safeguards perform in the wild and whether they can be adapted for other browser‑based AI deployments.

Source: Google Security Blog, “Architecting Security for Agentic Browsers,” 2025‑12‑08.

Comments

Please log in or register to join the discussion