ClickHouse has acquired Langfuse, the LLM engineering platform, in a move that brings together two complementary technologies. The acquisition aims to accelerate Langfuse's development while maintaining its open-source commitment and self-hosting capabilities.

ClickHouse has acquired Langfuse, the LLM engineering platform that helps teams monitor, evaluate, and iterate on production AI applications. The announcement, made on January 16, 2026, marks a significant consolidation in the LLM observability space.

What Actually Changed

For existing Langfuse users, the immediate answer is: nothing. The product, pricing, support channels, and roadmap remain unchanged. Langfuse stays open source under its current license, and Langfuse Cloud continues operating as-is. The entire Langfuse team is joining ClickHouse and will continue building Langfuse.

What changes is the operational backing. ClickHouse brings infrastructure expertise, customer success playbooks, and engineering resources that Langfuse, as an independent startup, would have needed years to develop.

The Technical Foundation

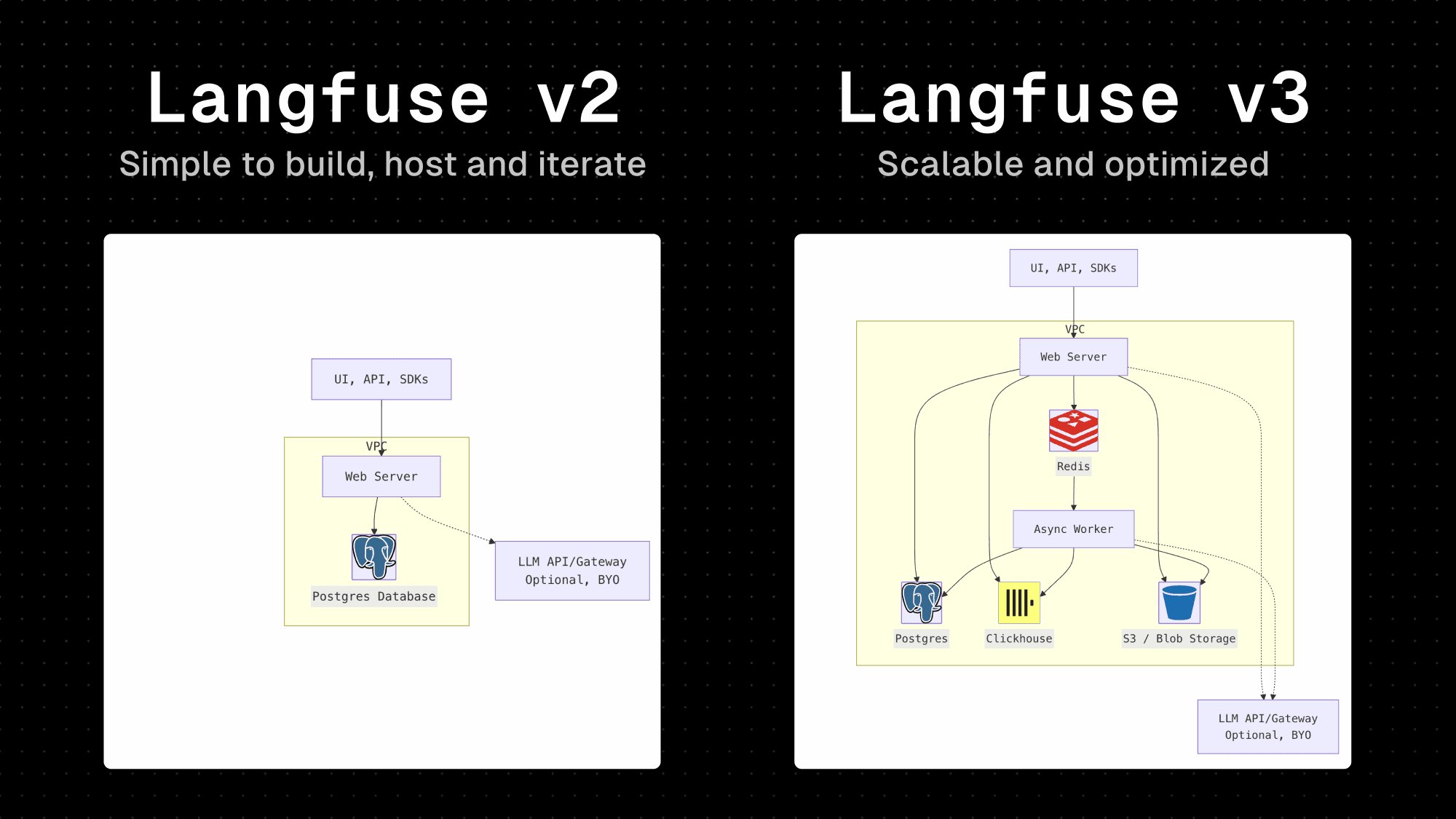

This acquisition didn't emerge from a cold outreach. Langfuse has been running on ClickHouse since the v3 release, which migrated the core data layer from Postgres to ClickHouse to handle high-throughput ingestion and fast analytical reads.

The migration addressed a critical bottleneck. As Langfuse adoption grew, Postgres couldn't efficiently support the workload: continuous trace ingestion from production LLM applications combined with real-time analytical queries for debugging and evaluation. ClickHouse's columnar storage and distributed architecture solved this.

Langfuse v3 architecture showing ClickHouse integration

The companies had already been collaborating organically. ClickHouse Cloud is a Langfuse customer, using it to optimize their own agentic applications. Langfuse customers, in turn, get introduced to ClickHouse when upgrading from v2 to v3. They've hosted joint meetups in Berlin, San Francisco, and Amsterdam.

Why This Makes Sense

LLM engineering platforms face unique infrastructure challenges:

Data volume: Production applications generate millions of traces, each containing prompts, responses, metadata, and user feedback. Storing and querying this efficiently requires specialized databases.

Query patterns: Engineers need to slice trace data by model version, prompt template, error type, or user segment—often combining these filters in ad-hoc ways during debugging sessions.

Real-time requirements: Unlike batch ML training monitoring, LLM debugging requires sub-second query responses to maintain iteration velocity.

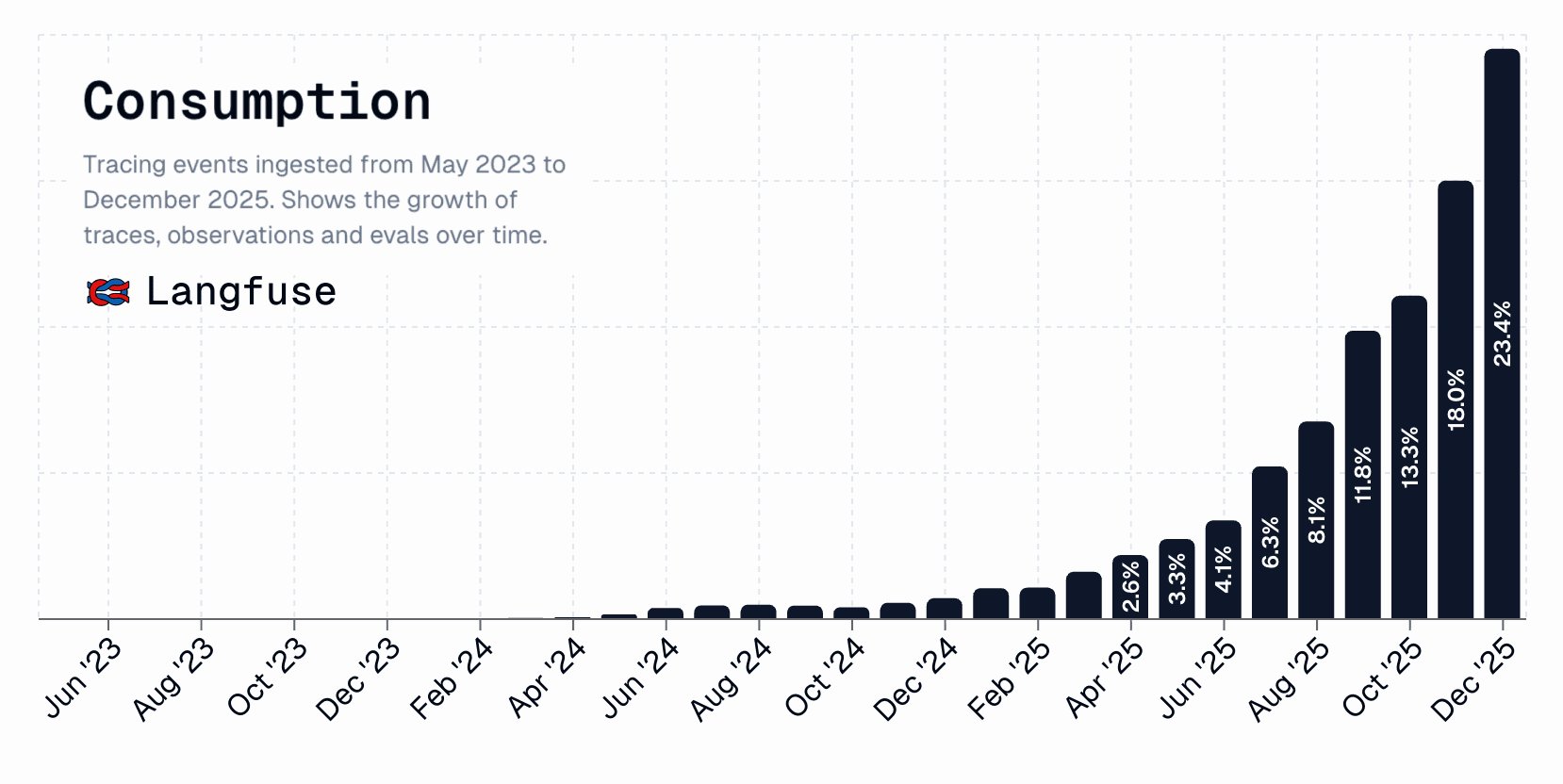

Langfuse consumption metrics interface

ClickHouse's expertise in high-performance analytics directly addresses these challenges. The acquisition means Langfuse can:

- Integrate ClickHouse features like compute-compute separation to reduce noisy-neighbor effects in multi-tenant Langfuse Cloud

- Access performance optimizations at the database level without waiting for public releases

- Leverage ClickHouse's experience running large-scale observability workloads

The LLM Engineering Problem

Langfuse started because its founders kept hitting the same wall while building agents during Y Combinator in early 2023. Demoing LLM applications is straightforward; running them in production is messy.

The problems are fundamental:

- Non-determinism: The same prompt produces different outputs, making debugging iterative rather than linear

- Evaluation gaps: Offline metrics (like BLEU or ROUGE) don't correlate well with production user satisfaction

- Iteration loops: Prompt changes require redeployment, A/B testing, and monitoring—each step adding friction

The initial solution was intentionally simple: tracing primitives that could be added to any LLM application with minimal code changes, plus evaluation tools that worked with production data. It ran on Postgres because shipping fast mattered more than theoretical scale.

That simplicity worked. Teams adopted it faster than expected, which forced the v3 ClickHouse migration to handle real production workloads.

What Gets Better

Performance and reliability: Direct collaboration with ClickHouse's database engineers means Langfuse can push performance boundaries further. For self-hosted deployments, this means better deployment templates and operational guidance.

Enterprise features: ClickHouse has experience with compliance requirements (SOC 2, HIPAA, etc.) and enterprise support models. Langfuse can accelerate these features without reinventing the wheel.

Scale: Large enterprises need to ingest traces from hundreds of LLM applications simultaneously. ClickHouse's infrastructure knowledge helps Langfuse support these deployments reliably.

What Stays the Same

The product philosophy remains unchanged:

- Open source first: The core platform stays OSS

- Self-hosting is first-class: Not an afterthought or enterprise-only feature

- Built for production: Not just for demos or offline experiments

The Broader Pattern

This acquisition reflects a maturation in the LLM tooling ecosystem. The early phase (2022-2024) saw proliferation of point solutions: prompt managers, evaluation frameworks, tracing tools. The current phase involves consolidation around integrated platforms backed by infrastructure companies.

We've seen similar patterns:

- Vector database companies acquiring embedding services

- Cloud providers launching managed LLM platforms

- Observability companies adding LLM-specific features

The Langfuse-ClickHouse deal is unique because it's infrastructure-native. ClickHouse isn't just providing capital; they're providing the exact technical foundation Langfuse needs to scale.

For Langfuse Users

If you're running Langfuse in production today:

Self-hosted: Your deployment doesn't change. The ClickHouse backend you're already using (if on v3) gets better optimization over time. New deployment templates and performance tuning guides are coming.

Langfuse Cloud: No immediate changes. The same team, same SLAs. Long-term, you'll benefit from ClickHouse's infrastructure improvements.

Open source: The repository (github.com/langfuse/langfuse) continues as before. Contributions and issues follow the same process.

Support: No changes to channels or response times. The team stays intact.

What's Next

The roadmap focuses on closing the loop from production data to model improvements:

- Production monitoring: Moving beyond offline evaluations to real-time quality tracking for live agent systems

- Iteration workflows: Tighter integration between tracing, labeling, and experimentation to reduce cycle time

- Scale: Supporting enterprise deployments with thousands of concurrent LLM applications

- Developer experience: Polishing UI/UX and documentation as complexity grows

The Bottom Line

This is an infrastructure play, not a product pivot. Langfuse keeps doing what it's doing—helping teams debug and improve LLM applications—just with more engineering leverage.

For the LLM engineering ecosystem, it signals that observability platforms need deep infrastructure integration to handle production scale. The winners will be those that can combine domain-specific product insights with performance engineering at the database level.

Langfuse users get a more reliable, faster platform without sacrificing the open-source values that made it attractive in the first place.

Related resources:

Join the discussion: GitHub Discussions | Enterprise inquiries

Comments

Please log in or register to join the discussion