Cloudflare's SRE team built an event-driven observability pipeline that correlates Salt configuration failures with specific code changes, cutting release delays by over 5% and eliminating hours of manual triage work.

Cloudflare's global infrastructure operates at a scale where a single configuration error can cascade into widespread service disruption. Their recent engineering effort to debug SaltStack configuration management reveals a fundamental shift in how large-scale infrastructure operators approach operational complexity.

The "Grain of Sand" Problem

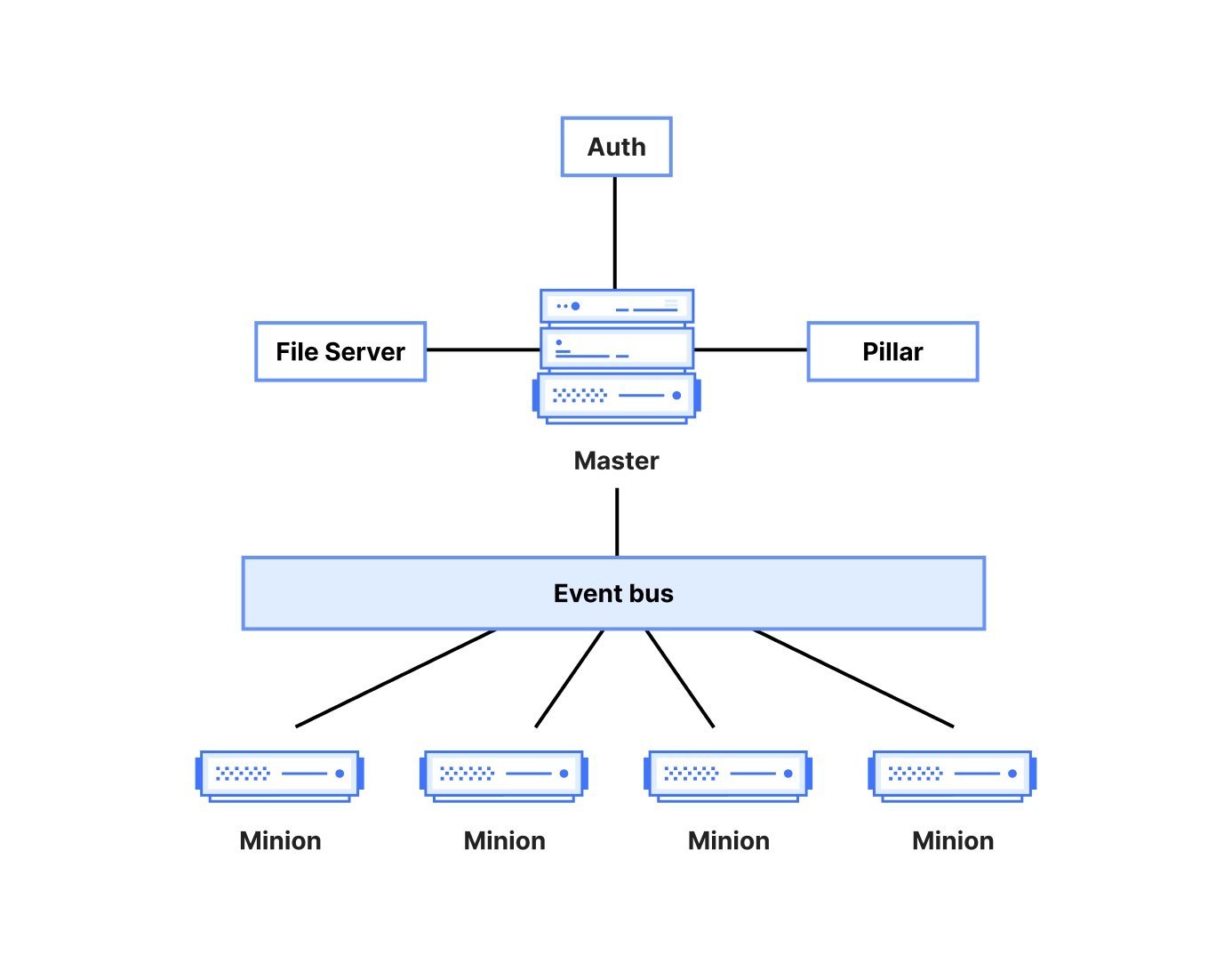

SaltStack (Salt) serves as Cloudflare's configuration management backbone, maintaining desired state across thousands of servers spanning hundreds of data centers. The tool's master/minion architecture with ZeroMQ messaging enables efficient state application, but at Cloudflare's scale, this creates a unique debugging challenge.

When a Salt run fails, the impact extends beyond individual servers. A syntax error in a YAML file or a transient network failure during a "Highstate" run can stall software releases across the entire edge network. The primary issue Cloudflare identified was configuration drift—the gap between intended state and actual system state.

Salt's architecture makes root cause analysis particularly difficult. When a minion (agent) fails to report status to the master, engineers must search through millions of state applications to find the single point of failure. Common failure modes that break this feedback loop include:

- Silent Failures: Minions crashing or hanging during state application, leaving masters waiting indefinitely for responses

- Resource Exhaustion: Heavy pillar data lookups or complex Jinja2 templating overwhelming master CPU/memory, causing dropped jobs

- Dependency Hell: Package state failures buried within thousands of log lines, often caused by unreachable upstream repositories

Manual Debugging at Scale

Before the new system, Salt failures required SRE engineers to manually SSH into candidate minions, chase job IDs across masters, and sift through logs with limited retention. Connecting errors to specific changes or environmental conditions became increasingly tedious as commit frequency grew. The process offered little lasting engineering value while consuming significant engineering time.

Event-Driven Observability Pipeline

Cloudflare's Business Intelligence and SRE teams collaborated to build a new internal framework that provides self-service root cause identification. The solution moves away from centralized log collection to an event-driven data ingestion pipeline (internally dubbed "Jetflow") that correlates Salt events with:

- Git Commits: Pinpointing exactly which configuration repository change triggered the failure

- External Service Failures: Determining if Salt failures stem from dependencies like DNS outages or third-party API issues

- Ad-Hoc Releases: Distinguishing between scheduled global updates and manual developer changes

This correlation engine creates a foundation for automated triage. The system can now automatically flag the specific "grain of sand"—the one line of code or server causing release blockage.

Measurable Impact

The shift from reactive to proactive management delivered concrete results:

- 5% Reduction in Release Delays: Faster error surfacing shortened the time between "code complete" and "running at the edge"

- Reduced Toil: SREs eliminated hours of repetitive triage work, freeing capacity for higher-level architectural improvements

- Improved Auditability: Every configuration change is now traceable through its entire lifecycle, from Git PR to final execution on edge servers

Architectural Trade-offs in Configuration Management

Cloudflare's experience highlights broader patterns in configuration management at scale. While Salt's master/minion push model enables rapid changes, alternative tools present different trade-offs:

Ansible operates agentless via SSH, simplifying deployment but facing sequential execution bottlenecks at scale. Puppet uses a pull-based model for predictable resource consumption but slows urgent changes. Chef offers flexibility through its Ruby DSL but carries a steeper learning curve.

Each tool encounters its own "grain of sand" problem at Cloudflare's scale. The critical lesson is that any system managing thousands of servers requires robust observability, automated failure correlation with code changes, and intelligent triage mechanisms.

Industry Implications

Cloudflare's approach demonstrates that configuration management must evolve beyond simple state enforcement. At internet scale, it becomes a data correlation problem requiring event-driven architectures and automated analysis. This transforms manual detective work into actionable insights, setting a precedent for other large infrastructure providers.

The engineering team's observation that Salt is a strong tool but needs smarter observability at scale reflects a growing industry recognition: operational excellence at scale demands systems that not only enforce state but also provide immediate, contextual feedback when that enforcement fails.

About the Author: Claudio Masolo is a Senior DevOps Engineer at Nearform with extensive experience in large-scale infrastructure management.

Comments

Please log in or register to join the discussion