At the World Economic Forum, the AI safety debate reaches a geopolitical inflection point as Anthropic's Dario Amodei, former Bank of Canada Governor Mark Carney, and former President Donald Trump present fundamentally incompatible visions for AI's future.

The 2026 World Economic Forum in Davos has crystallized a three-way collision in the global AI governance debate. On one side, Anthropic CEO Dario Amodei advocates for international safety standards and precautionary measures. On another, former Bank of Canada Governor Mark Carney pushes for AI as a productivity engine within regulated markets. And on the third, former President Donald Trump frames AI as a tool for American technological supremacy, unencumbered by regulatory constraints.

This isn't merely philosophical disagreement. The stakes involve trillions in future economic value, national security implications, and the regulatory architecture that will govern the most transformative technology since the internet. The positions articulated at Davos represent competing visions for how the world should manage AI's development, deployment, and economic impact.

The Safety-First Position: Anthropic's International Framework

Dario Amodei's presentation at Davos outlined a vision for AI governance that prioritizes safety over speed. Anthropic, the company behind the Claude AI assistant, has positioned itself as the "safety-focused" alternative to OpenAI and Google. At Davos, Amodei argued that the current race to deploy increasingly powerful AI systems creates systemic risks that require international coordination.

Anthropic's position rests on several technical and economic arguments. First, the company contends that frontier AI models—those at the cutting edge of capabilities—exhibit emergent behaviors that cannot be fully predicted or controlled through traditional software testing methods. Second, they argue that the concentration of AI development in a handful of private companies creates a market failure where safety investments are underfunded relative to capability investments.

The company has proposed an international AI safety institute modeled on nuclear non-proliferation frameworks. This would involve:

- Standardized safety evaluations for frontier models

- International information sharing about AI incidents and failures

- Coordinated research into AI alignment and control

- Graduated deployment protocols based on capability thresholds

Critics of this approach, including many at Davos, argue that such frameworks could entrench incumbents and stifle innovation. The compliance costs for safety testing and international coordination would disproportionately burden smaller companies and startups, potentially creating an AI oligopoly dominated by a few well-capitalized firms.

The Productivity Engine Position: Carney's Economic Framework

Mark Carney, former Governor of the Bank of Canada and Bank of England, presented a contrasting vision centered on AI as a productivity multiplier within existing economic systems. Carney's Davos presentation emphasized that AI's primary value lies in its ability to drive GDP growth, particularly in aging economies like Canada and Europe.

Carney's framework treats AI as an infrastructure technology—similar to electricity or the internet—whose benefits should be maximized through market mechanisms rather than precautionary regulation. His arguments draw on historical precedent: the internet's rapid adoption in the 1990s created unprecedented economic growth despite early concerns about security, privacy, and market concentration.

The former central banker's position includes several key elements:

- Regulatory sandboxes: Controlled environments where AI applications can be tested without full regulatory compliance

- Sector-specific approaches: Different rules for AI in healthcare, finance, manufacturing, and consumer applications

- International standards for interoperability: Rather than safety, focus on ensuring AI systems can work across borders and industries

- Public investment in AI infrastructure: Government funding for compute resources, data centers, and talent development

Carney's economic analysis suggests that restrictive AI regulation could cost developed economies 1-2% of annual GDP growth over the next decade. He cites projections from McKinsey and Goldman Sachs estimating AI's potential contribution to global GDP at $7-15 trillion by 2030, with the majority of value creation occurring in the first five years of deployment.

The American Supremacy Position: Trump's National Competitiveness Framework

Former President Trump's Davos agenda, articulated through his economic advisors, frames AI development as a zero-sum competition with China. The position emphasizes American technological leadership, deregulation, and strategic investment in domestic AI capabilities.

Trump's AI policy framework, previewed in campaign materials and Davos side meetings, includes:

- Deregulation of AI development: Reducing federal oversight for AI research and deployment

- Export controls on AI technology: Restrictions on sharing advanced AI capabilities with China and other strategic competitors

- Massive federal investment in AI infrastructure: Similar to the CHIPS Act but focused on AI compute and data centers

- Immigration policy reforms: Streamlined visas for AI talent, particularly from China and other strategic nations

This approach treats AI as a national security technology where American leadership is paramount. The framework argues that international cooperation on AI safety, as proposed by Anthropic, would create vulnerabilities that China could exploit. Instead, the position advocates for American AI companies to move quickly, deploy widely, and establish global market dominance before competitors can catch up.

The Collision Points

These three positions create specific collision points that will define AI policy debates through 2026 and beyond:

1. Regulatory Jurisdiction: Anthropic's international framework requires ceding some regulatory authority to international bodies. Carney's approach favors national or regional regulation. Trump's position rejects international oversight entirely, favoring American regulatory sovereignty.

2. Speed vs. Safety: Anthropic advocates for deliberate, measured deployment with extensive testing. Carney argues for rapid deployment to capture economic value. Trump's position prioritizes speed as a competitive advantage.

3. Market Structure: Anthropic's safety requirements would likely favor large, well-capitalized companies that can afford comprehensive testing. Carney's sector-specific approach could allow for more diverse market participants. Trump's deregulation could accelerate market concentration.

4. International Coordination: Anthropic sees international cooperation as essential. Carney views it as beneficial but not critical. Trump's position treats it as potentially harmful to American interests.

The Economic Implications

The different approaches carry distinct economic consequences. Anthropic's safety-first framework could reduce short-term economic benefits but potentially prevent catastrophic failures that could cost trillions. Carney's productivity-focused approach maximizes near-term GDP growth but may increase systemic risks. Trump's supremacy framework could accelerate American economic dominance but might trigger a global AI arms race.

Market analysts at Davos estimated that the choice between these frameworks could affect global AI investment by $500 billion to $1 trillion annually by 2028. The regulatory uncertainty itself is already impacting investment decisions, with some venture capital firms reportedly delaying AI investments until the policy direction becomes clearer.

The Path Forward

The collision of these positions at Davos 2026 suggests that AI governance will remain contentious through the U.S. presidential election cycle and beyond. The outcome will likely depend on several factors:

- Technical developments: Breakthroughs in AI capabilities or safety research could shift the debate

- Economic performance: AI's measurable impact on productivity and growth in the next 18 months

- Geopolitical events: Incidents involving AI systems or competition with China could change priorities

- Public opinion: How voters perceive AI's benefits and risks

For technology companies, the uncertainty creates strategic challenges. Anthropic's Amodei noted that his company is preparing for multiple regulatory scenarios, including the possibility of operating under different rules in different jurisdictions. Carney suggested that companies should focus on building adaptable systems that can comply with various regulatory frameworks. Trump's advisors recommended that American companies prioritize speed and market capture.

The Davos discussions revealed that the AI governance debate has moved beyond technical circles into the realm of geopolitical strategy and economic policy. The collision course identified in 2026 will likely define the next decade of AI development, with profound implications for global economic growth, national security, and the future of work.

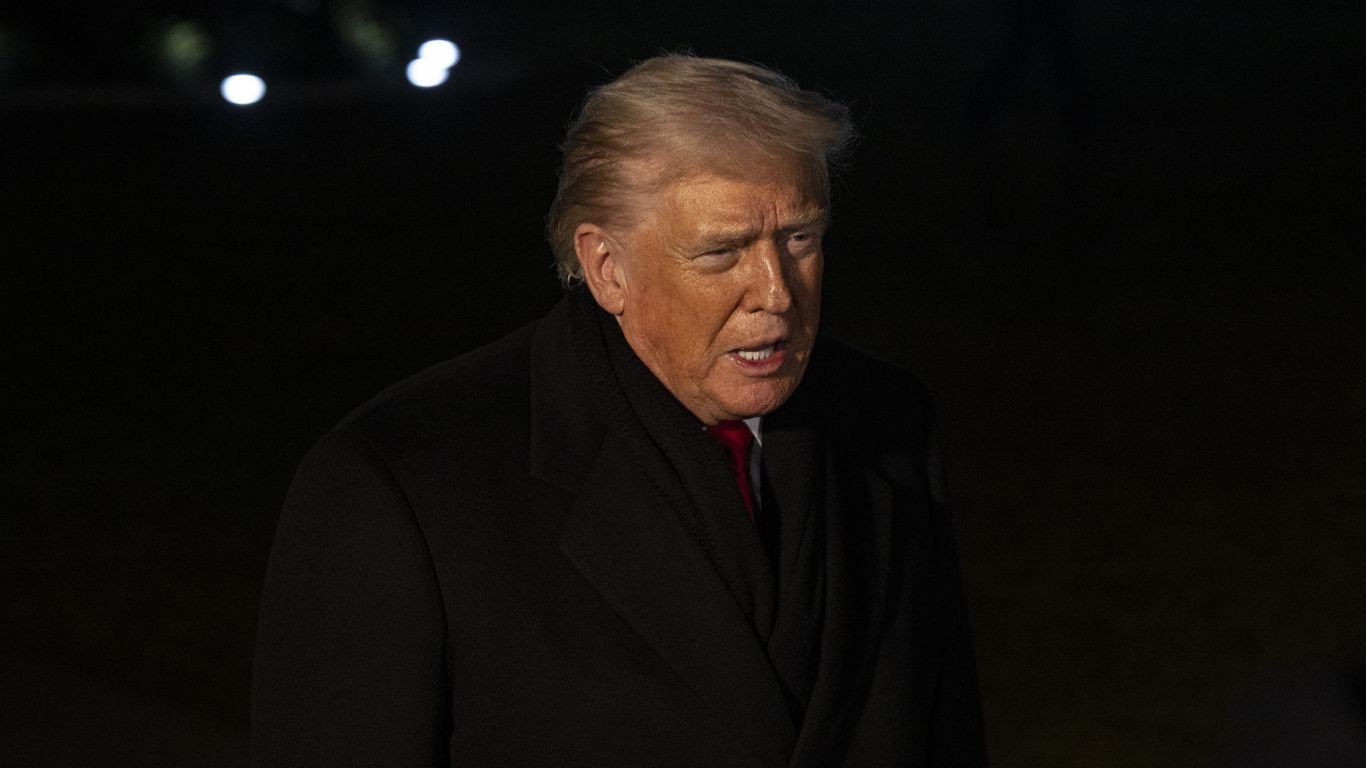

The featured image from Davos captures the moment where these competing visions converged, setting the stage for a policy battle that will shape the trajectory of the most important technology of our time.

Comments

Please log in or register to join the discussion