As AI research enters mainstream tech, poorly structured Jupyter notebooks and irreproducible experiments are no longer acceptable. Discover essential strategies for writing research code that's quick to set up, consistently reproducible, and modular enough for community adoption—featuring tools like uv, jsonargparse, and WandB.

For years, AI research code lived in obscurity—Jupyter notebooks sprawled across unversioned directories, experimental setups that only worked on specific laptops, and repositories that deterred collaboration. But as generative AI democratizes research, the stakes for code quality have skyrocketed. Akshit, an AI researcher, argues that writing maintainable, reproducible code is now the second-most critical skill for researchers, just behind research intuition. Here’s why it matters and how to achieve it.

The Three Pillars of Impactful Research Code

- Fast: Minimal setup (ideally zero-config), one-command execution

- Reproducible: Dependency-free consistency across environments

- Attractive: Modular architecture encouraging extension and reuse

🚀 Writing Fast Code

Setup Acceleration

Ditch Conda and virtualenv headaches. uv—a Rust-based Python package manager—revolutionizes setup:

uv run main.py # Installs dependencies automatically

No manual requirements.txt or environment activation. UV analyzes imports and resolves dependencies on-the-fly.

Experimentation Velocity

For hyperparameter tuning and multi-run experiments:

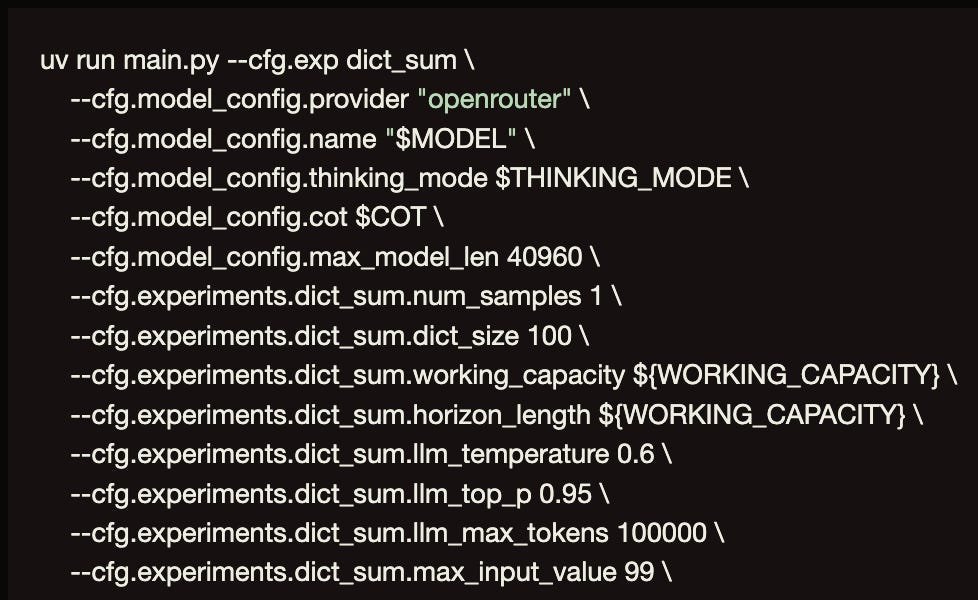

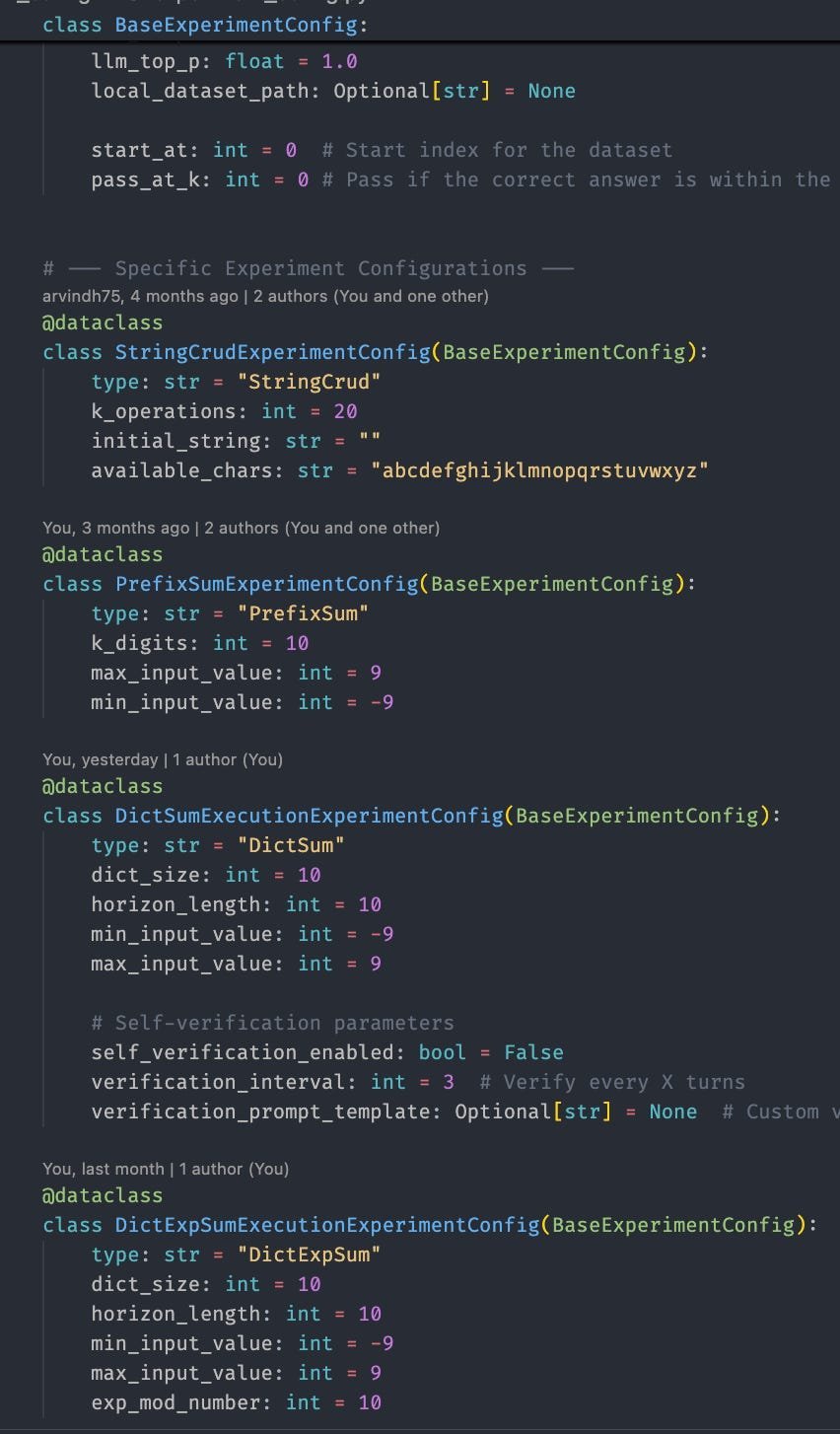

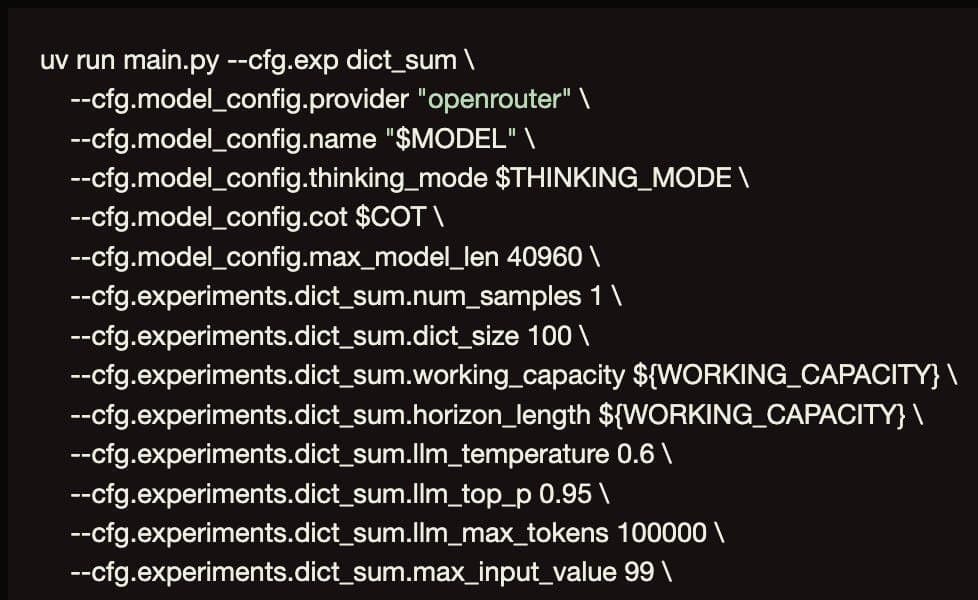

- Use jsonargparse for hierarchical configuration via dataclasses

- Wrap executions in shell script loops for batch processing

Hierarchical configs simplify complex experiments

Hierarchical configs simplify complex experiments

Example shell structure:

for MODEL in "gpt-4" "llama3"; do

for STRATEGY in "cot" "scratchpad"; do

uv run main.py --model $MODEL --strategy $STRATEGY

done

done

🔁 Ensuring Reproducibility

- UV’s lockfiles guarantee dependency consistency

- Rigorous pre-release testing with AI-assisted tools (Copilot/Cursor) to eliminate "works on my machine" bugs

- Containerization as a fallback for GPU/CUDA edge cases

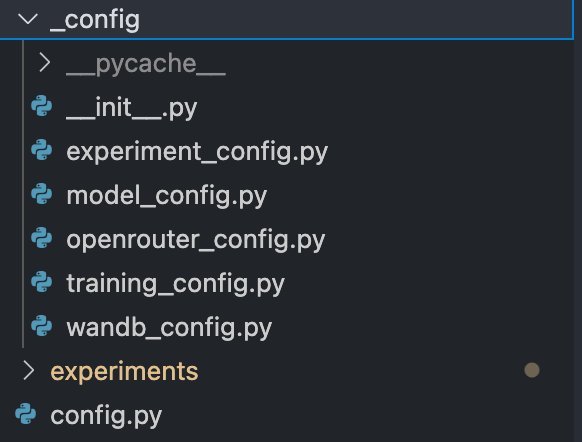

💡 Crafting Attractive Code

Structural Principles

- Single-entry orchestration (

main.pyortrain.py) - Modular inheritance: Base classes for models/evaluations with child-specific implementations

class BaseModel:

def train(self): ...

def eval(self): ...

class CustomLLM(BaseModel):

def train(self):

# Custom training logic

Inheritance simplifies extensibility

Inheritance simplifies extensibility

Operational Excellence

- Logging > print(): Use Python’s

loggingmodule for persistent, searchable outputs - WandB integration: Real-time experiment tracking across teams

- AI coding agents: Automate boilerplate (file structure, docstrings) to focus on high-value logic

Why This Matters

Reproducibility isn’t academic vanity—it’s what transforms papers into platforms. When Stable Diffusion released clean, modular code, it spawned thousands of derivatives. Your research’s impact hinges on others building atop your work. As Akshit notes:

"The lasting impact of any paper isn’t just its findings, but giving people the ability to reproduce and extend them."

Adopt these practices, and your code won’t just run—it’ll catalyze progress.

Source: Akshit's Substack

Comments

Please log in or register to join the discussion