Dropbox unveils its seventh-generation custom hardware platform—featuring Crush, Dexter, and Sonic for compute, database, and storage—plus new GPU tiers like Godzilla, all designed to tackle exabyte-scale demands and power AI innovations. The leap involved doubling rack power, optimizing thermal management, and deep supplier collaborations to overcome vibration and heat challenges. This architecture sets a new benchmark for performance-per-watt and scalability in hyperscale infrastructure.

Fourteen years ago, Dropbox began with a handful of servers. Today, it operates one of the world’s largest custom storage systems, managing exabytes of data across tens of thousands of servers. This evolution—from its pivotal 2015 'Magic Pocket' migration to on-premises infrastructure—has been driven by treating hardware as a strategic asset. Now, Dropbox is launching its seventh-generation platform, a testament to how co-designing hardware and software can unlock unprecedented efficiency for modern workloads, including AI-driven products like Dropbox Dash.

The Backbone of Scale: From Petabytes to Exabytes

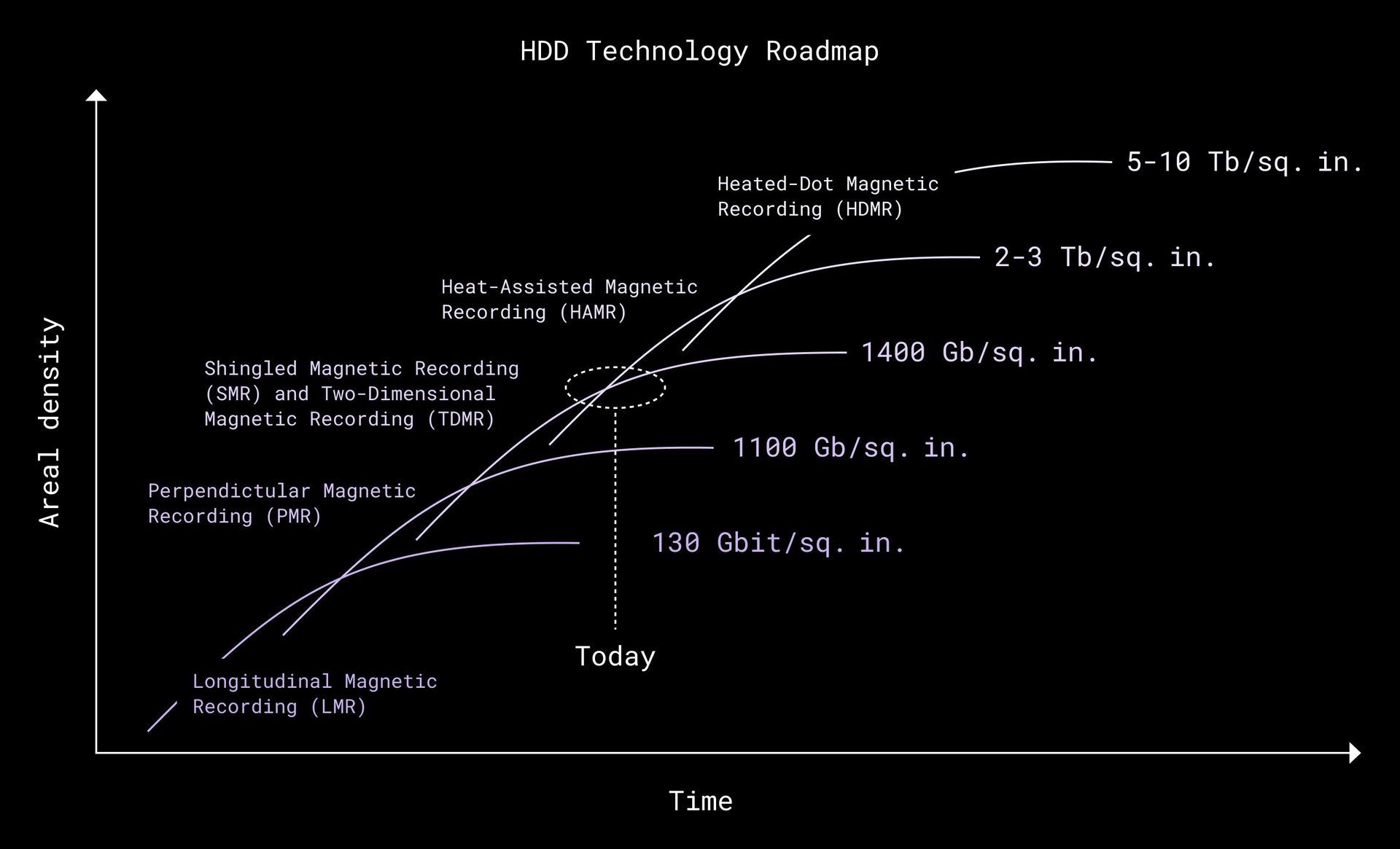

Dropbox’s journey started with 40PB in 2012, surged to 600PB by 2016, and now spans exabytes. This growth demanded radical innovation. The shift to custom hardware, including shingled magnetic recording (SMR) drives for higher density, allowed better cost control and performance. But as AI workloads exploded, Dropbox faced new bottlenecks: thermal limits, power constraints, and vibration-induced latency. The seventh-generation platform—Crush for compute, Dexter for databases, and Sonic for storage—emerged from three core design principles:

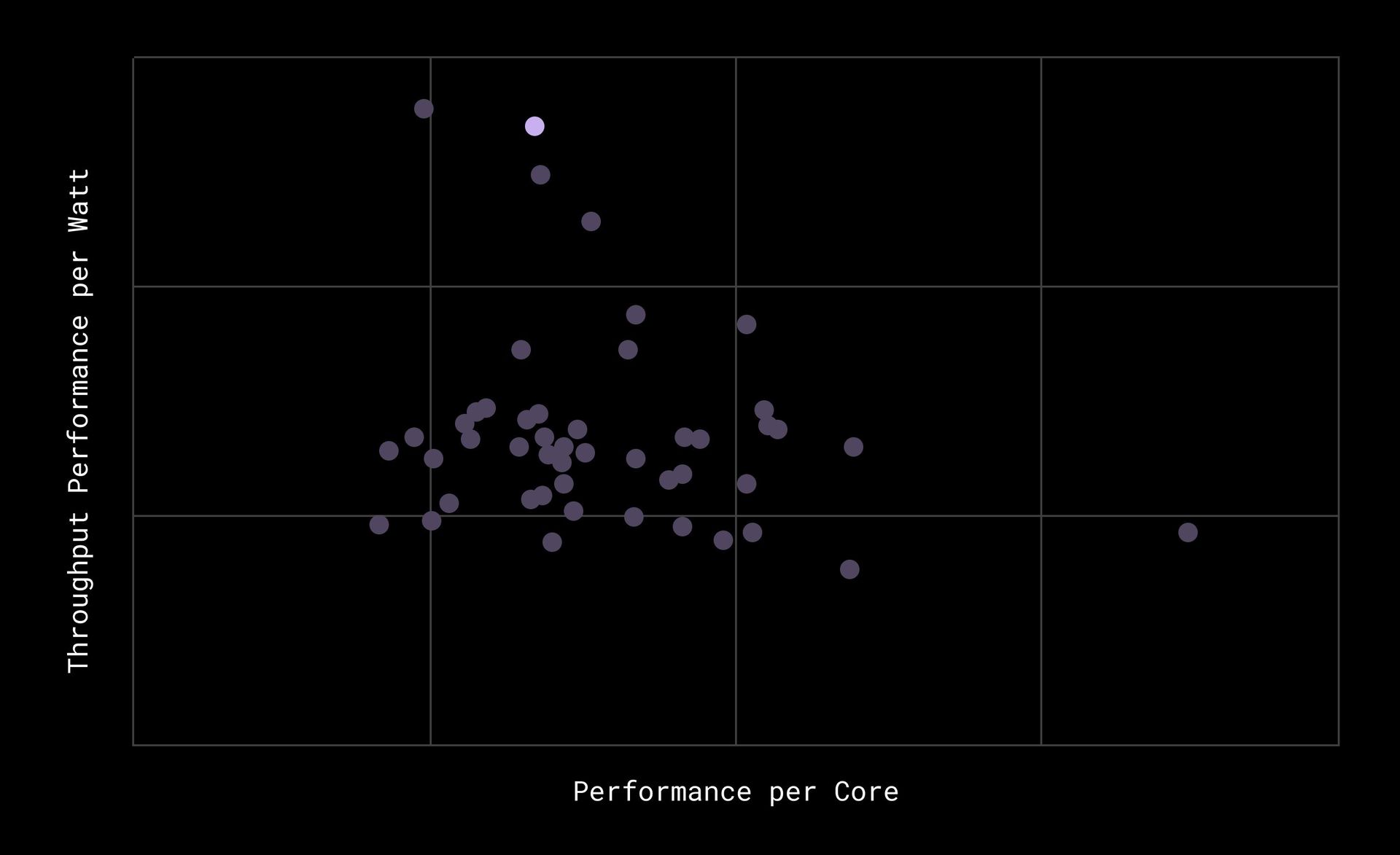

- Embracing Emerging Tech: With CPUs gaining cores and networks hitting 400G, Dropbox prioritized performance-per-watt. As one engineer noted, 'We didn’t max out specs blindly; we focused on what moves the needle for real-world services.'

- Supplier Partnerships: Collaborating early with vendors like Western Digital enabled access to high-density drives (e.g., 32TB SMR) and custom firmware to tackle acoustic and thermal issues.

- Software Co-Design: Involving software teams upfront ensured hardware supported specific needs, like GPU acceleration for AI inference in Dash.

Crushing Compute and Database Demands

At the heart of the compute upgrade is the Crush platform, shifting from AMD’s 48-core EPYC 7642 to the 84-core EPYC 9634 'Genoa' CPU. This delivered a 40% performance boost while maintaining a dense 1U form factor. Benchmarking over 100 chips, Dropbox selected for high throughput and per-core efficiency using SPECintrate metrics.

For databases, Dexter transitioned to a single-socket design, slashing replication lag by 3.57x. 'By consolidating compute and database platforms, we simplified our stack and eliminated scaling bottlenecks,' explains the team. This unified approach cut inter-socket delays and boosted instructions per cycle (IPC) by 30%.

Storage and GPUs: Density Meets Precision

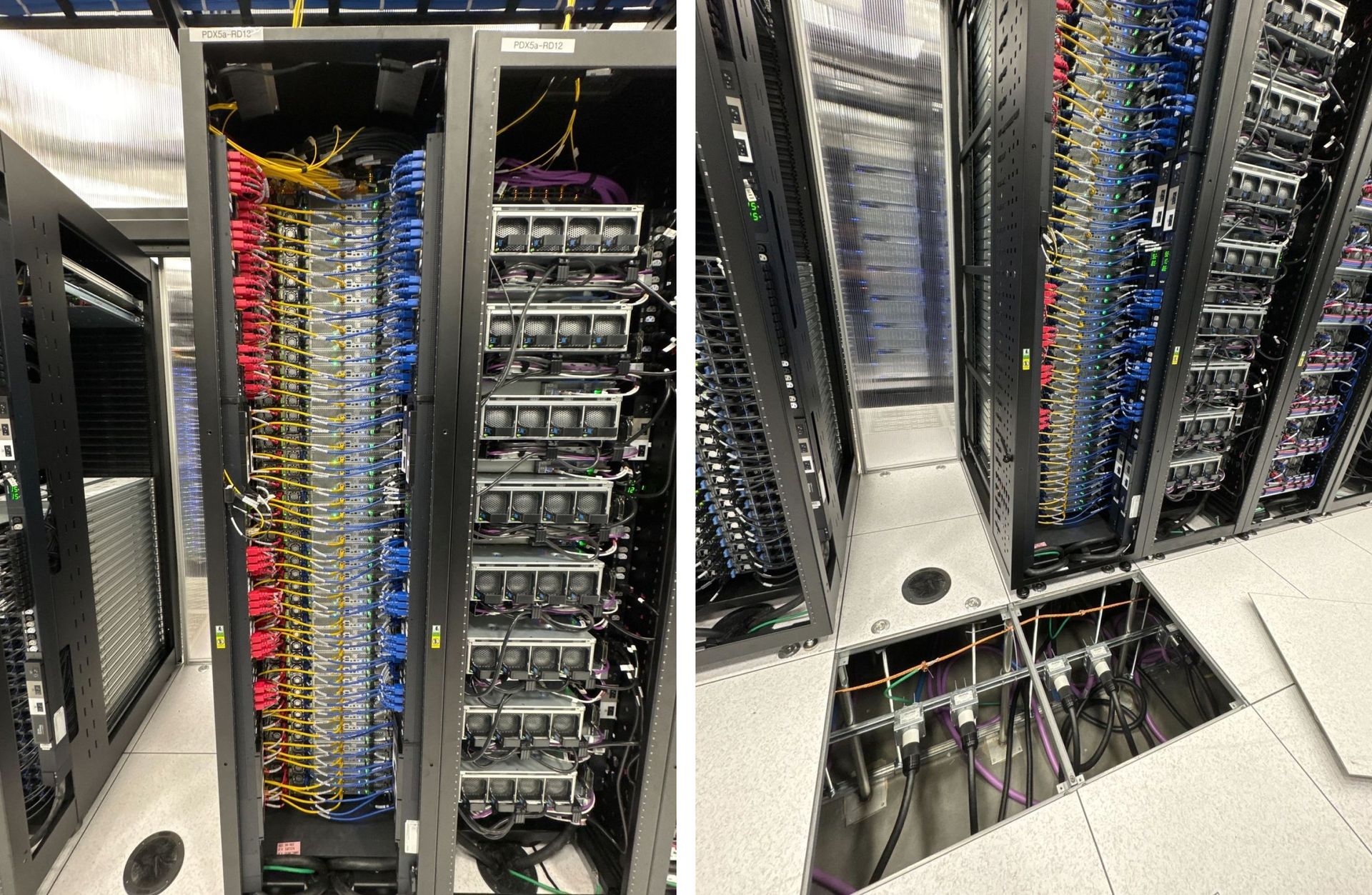

Storage saw the biggest leaps with Sonic, where drive capacities now exceed 30TB. But higher density amplified vibration risks—critical for SMR drives, where nanometer-level head precision is easily disrupted. Dropbox’s solution involved a co-developed chassis with enhanced dampening and airflow, allowing adoption of Western Digital’s Ultrastar HC690 drives.

Meanwhile, AI workloads demanded dedicated acceleration. Enter Gumby and Godzilla GPU tiers, designed for Dash’s video processing, LLMs, and real-time search. 'GPUs provide the parallelism and memory bandwidth CPUs can’t match economically,' the team emphasizes. Godzilla, with its eight-GPU setup, handles intensive tasks like generative AI, while Gumby offers a balanced tier for broader deployment.

Conquering Thermal and Power Frontiers

Power emerged as the universal bottleneck. With new CPUs and GPUs, rack power needs threatened to exceed 15kW limits. Dropbox’s fix? Quad PDUs per rack instead of two, effectively doubling capacity without infrastructure overhauls.

Thermal management was equally critical. Full-load testing drove optimizations in heatsinks, fan curves, and airflow—reducing temperatures to the ideal 40°C zone for drive longevity. The result: a 10% drop in power per petabyte, aligning with sustainability goals.

Lessons for the Next Wave

This generation isn’t just an upgrade; it’s a blueprint for hyperscale agility. Supplier collaborations granted early access to tech like 400G networking, while software-hardware co-design ensured platforms like Godzilla were AI-ready at launch. Looking ahead, Dropbox eyes heat-assisted magnetic recording (HAMR) for even greater densities and liquid cooling as power demands climb. For engineers, the takeaway is clear: infrastructure is no longer a support function—it’s the engine of innovation. As Dropbox scales, its hardware-first ethos demonstrates that in the age of AI, every watt and vibration matters.

Source: Adapted from Dropbox Tech Blog's Seventh-Generation Server Hardware.

Comments

Please log in or register to join the discussion