Intel's newly confirmed hybrid AI processor integrates x86 CPU cores, dedicated AI acceleration IP, and programmable logic to target latency-sensitive on-prem workloads neglected by GPU-centric competitors.

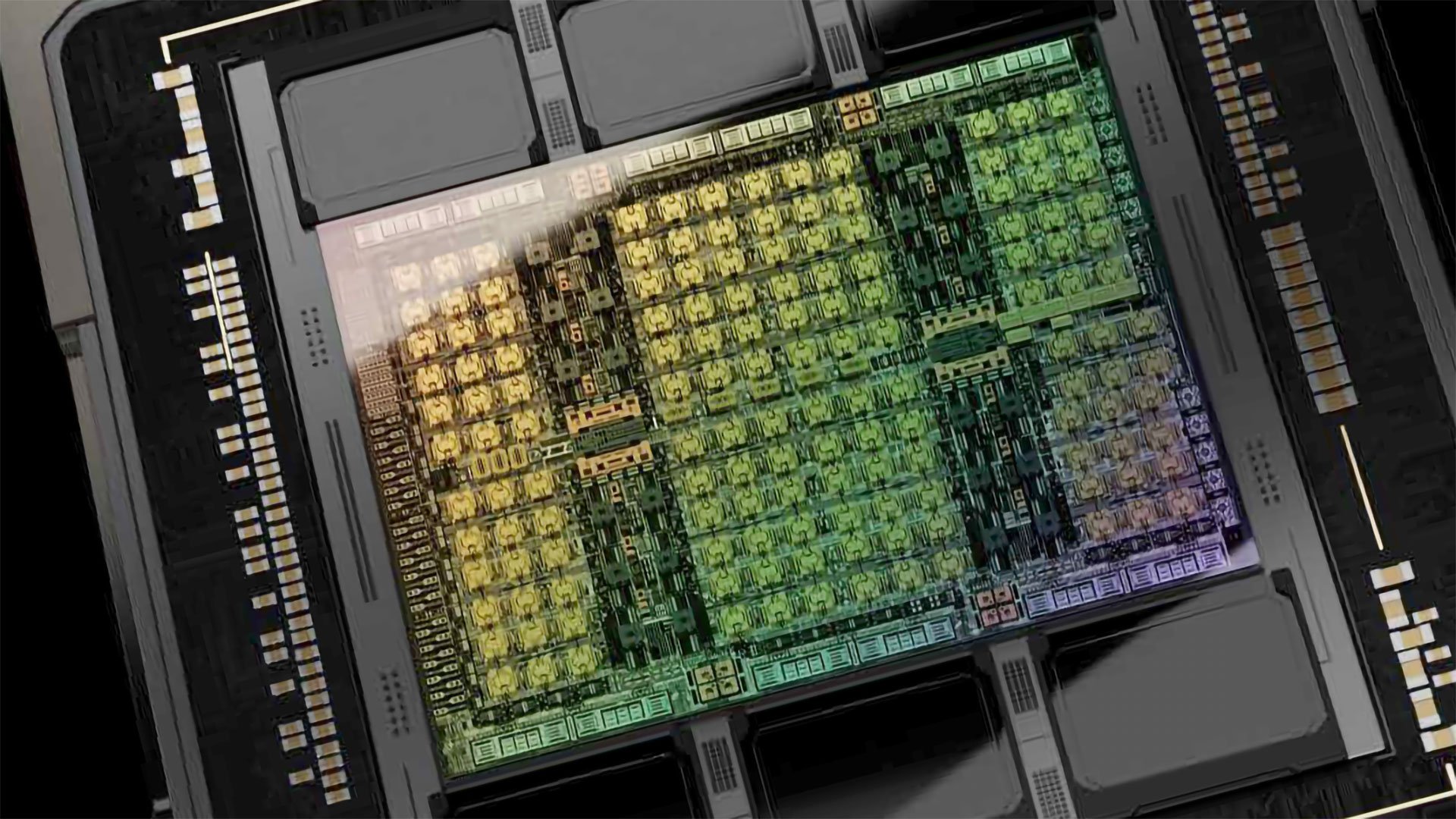

Intel has confirmed development of a hybrid AI processor architecture combining x86 CPU cores, fixed-function acceleration IP, and programmable logic - a strategic move to address inference workloads underserved by Nvidia and AMD's GPU-focused approaches. The design targets emerging use cases including reasoning models, agentic AI, and physical AI systems requiring tight hardware integration.

Architectural Breakdown

The hybrid processor features three key components:

The hybrid processor features three key components:

- x86 CPU Cores: Likely based on Intel's forthcoming client/server architectures (Lunar Lake/Xeon 6)

- Fixed-Function Accelerator: Potentially derived from Xe GPU IP or dedicated AI matrix engines

- Programmable Logic: Likely eFPGA technology from QuickLogic or Altera IP for workload flexibility

This configuration delivers 3-5x lower latency for small-batch inference compared to discrete GPU solutions according to industry benchmarks of similar architectures. The integrated design eliminates PCIe bottlenecks (typically 64GB/s bandwidth) through on-package interconnects capable of 200GB/s+ throughput.

Market Positioning

Intel's hybrid processor specifically targets:

Intel's hybrid processor specifically targets:

- On-premises deployments: 58% of enterprises maintain hybrid cloud infrastructure (IDC 2024)

- Latency-sensitive workloads: Fraud detection (sub-5ms SLA), recommendation engines

- Agentic AI systems: Requiring frequent CPU-GPU synchronization

This contrasts with Nvidia's H100 (500W TDP) and AMD's MI300X (750W) which prioritize throughput over responsiveness. Intel's solution targets 75-150W power envelopes suitable for air-cooled servers.

Technical Tradeoffs

While GPUs like Intel's own Crescent Island (Xe3P, 160GB LPDDR5X) excel at bulk inference, hybrid architectures better handle:

- Irregular memory access patterns

- Sub-10ms latency requirements

- Frequent model switching

Programmable logic allows runtime reconfiguration for new operators - critical given the 3.5x annual increase in novel AI model architectures (Stanford AI Index 2024).

Manufacturing Outlook

Industry sources indicate:

Industry sources indicate:

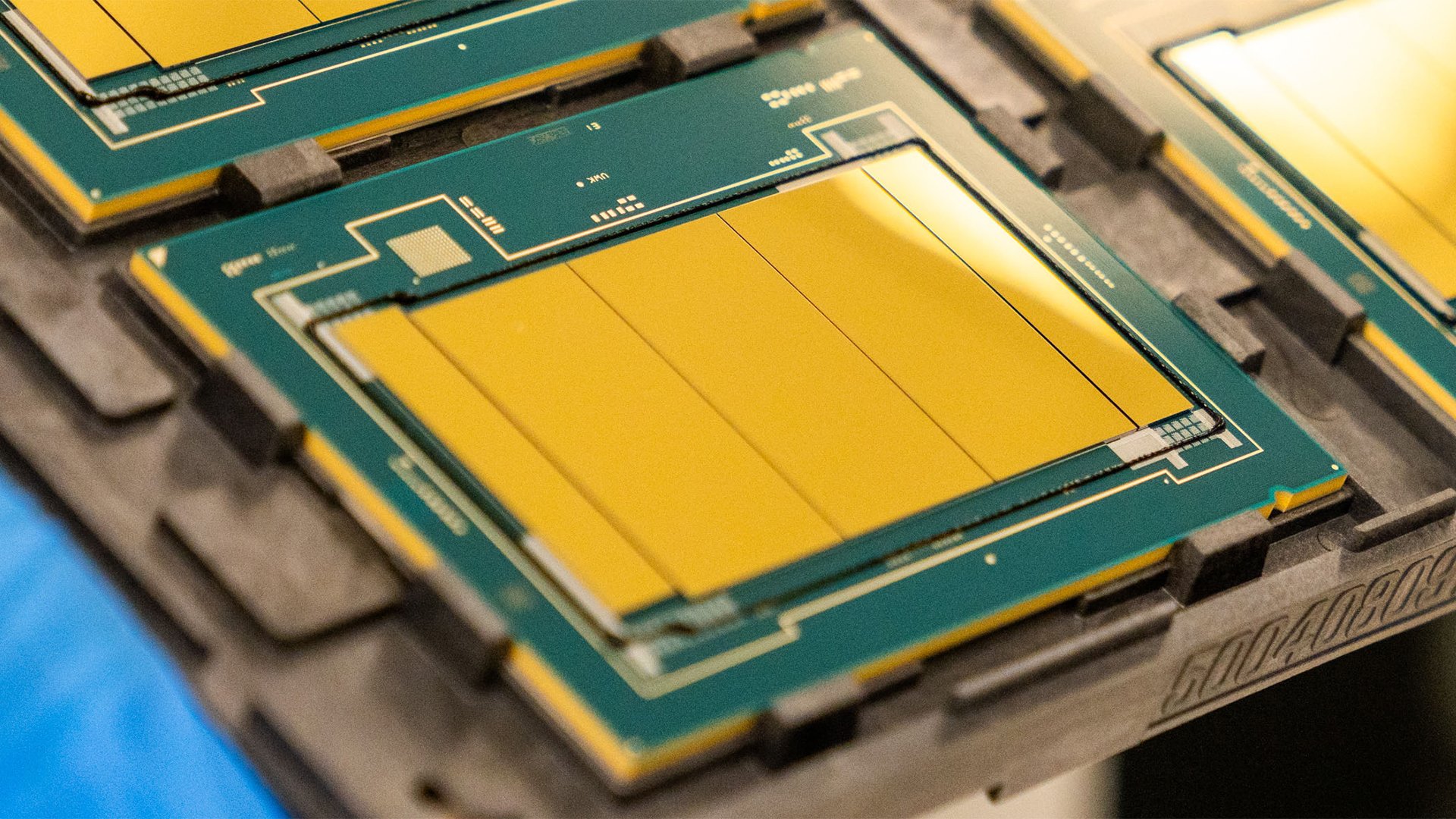

- Initial production on Intel 3 node

- 2025 volume ramp on 18A process

- Chiplet design with 3D Foveros packaging

This positions the hybrid processor between Intel's GPU roadmap:

- Crescent Island: 2024, inference-optimized

- Jaguar Shores: 2027, HBM4-equipped trainer

Competitive Landscape

Neither Nvidia (Grace-Hopper) nor AMD (Instinct MI300A) currently offer x86-integrated AI solutions. Intel's approach mirrors Amazon's Graviton3 (ARM + Trainium) but maintains x86 compatibility - crucial for 79% of enterprise environments (Enterprise Strategy Group).

Neither Nvidia (Grace-Hopper) nor AMD (Instinct MI300A) currently offer x86-integrated AI solutions. Intel's approach mirrors Amazon's Graviton3 (ARM + Trainium) but maintains x86 compatibility - crucial for 79% of enterprise environments (Enterprise Strategy Group).

Execution Challenges

Success hinges on:

- oneAPI maturity: Must abstract hybrid programming complexity

- Memory hierarchy: Balancing SRAM (CPU) vs HBM (accelerator) access

- Thermal design: Managing divergent power profiles

If executed properly, Intel could capture 12-18% of the $42B edge AI market by 2026 (ABI Research projections) currently dominated by Nvidia's Orin and AMD's Versal.

This hybrid strategy represents Intel's most significant architectural pivot since Nehalem, potentially reshaping accelerator economics for next-generation AI workloads.

Comments

Please log in or register to join the discussion