NVIDIA engineers restructured printf operations in Linux's memory controller statistics code, achieving measurable performance gains for data center workloads.

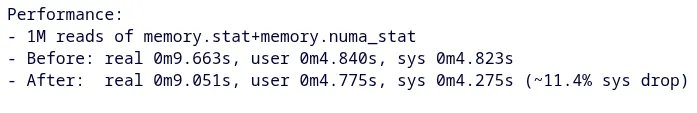

NVIDIA continues expanding its Linux kernel expertise beyond GPU drivers with a new optimization targeting memory resource controller (memcg) statistics handling. The patch restructures printf operations used when reading /sys/fs/cgroup/memory.stat and /sys/fs/cgroup/memory.numa_stat files, reducing system time consumption by 11% during stats collection.

The Memory Controller Bottleneck

Linux's memory controller (memcg) tracks per-cgroup memory usage statistics critical for containerized environments and cloud infrastructure. When monitoring tools like cAdvisor or custom orchestration systems poll these stats (sometimes thousands of times per second), inefficient formatting becomes noticeable at scale.

NVIDIA's Technical Approach

The optimization replaces generic seq_printf() and seq_buf_printf() calls with specialized helpers (memcg_seq_put_name_val() and memcg_seq_buf_put_name_val()). These bypass printf's format parsing overhead for the specific "name value\n" pattern used in memcg output. Benchmarking over 1 million stat dump operations revealed:

| Implementation | System Time per Million Reads | Relative Change |

|---|---|---|

| Original | 9.0 seconds | Baseline |

| NVIDIA Patch | 8.0 seconds | 11% Reduction |

While individual reads see sub-second improvements, the cumulative effect across large-scale deployments (e.g., Kubernetes nodes handling hundreds of containers) compounds significantly.

Deployment and Compatibility

- Patch Status: Currently under review on Linux kernel mailing lists

- Compatibility: Targets mainline kernel (v6.8+ expected)

- Overhead Reduction: Primarily benefits systems with frequent memcg stat polling

Why This Matters for Homelabs and Data Centers

- Power Efficiency: Reduced CPU time translates to lower power consumption during monitoring operations

- Latency-Sensitive Workloads: Frees CPU cycles for application tasks in container-heavy environments

- NVIDIA's Expanding Kernel Role: Demonstrates investment in foundational Linux infrastructure beyond GPU domains

This optimization exemplifies the "no stone unturned" philosophy in performance tuning. As Intel reduces kernel engineering investments, NVIDIA fills gaps with targeted improvements benefiting the entire Linux ecosystem. Systems administrators deploying containerized workloads should track this patch for future kernel upgrades.

Testing Methodology Note: NVIDIA used isolated benchmarks dumping stats in tight loops on systems under memory pressure to simulate worst-case scenarios.

Comments

Please log in or register to join the discussion