OpenAI's Open Responses specification introduces standardized agentic AI workflows, enabling developers to switch between proprietary and open-source models without rewriting integration code.

OpenAI has released Open Responses, an open specification designed to standardize agentic AI workflows and reduce API fragmentation across the rapidly evolving LLM ecosystem. The specification, supported by partners including Hugging Face, Vercel, and local inference providers, introduces unified standards for agentic loops, reasoning visibility, and internal versus external tool execution.

The core challenge Open Responses addresses is the current fragmentation in how different LLM providers handle multi-step agentic workflows. Developers today face the burden of writing custom wrappers and glue code to adapt their applications when switching between models from OpenAI, Anthropic, or open-source alternatives. This specification aims to eliminate that overhead by providing a common framework for agentic interactions.

Core Concepts: Items, Tool Use, and Agentic Loops

The specification introduces three fundamental concepts that form the foundation of agentic workflows:

Items serve as atomic units representing model input, output, tool invocations, or reasoning states. Examples include message, function_call, and reasoning types. The extensible nature of items allows providers to emit custom types beyond the base specification, enabling rich semantic representation of workflow states.

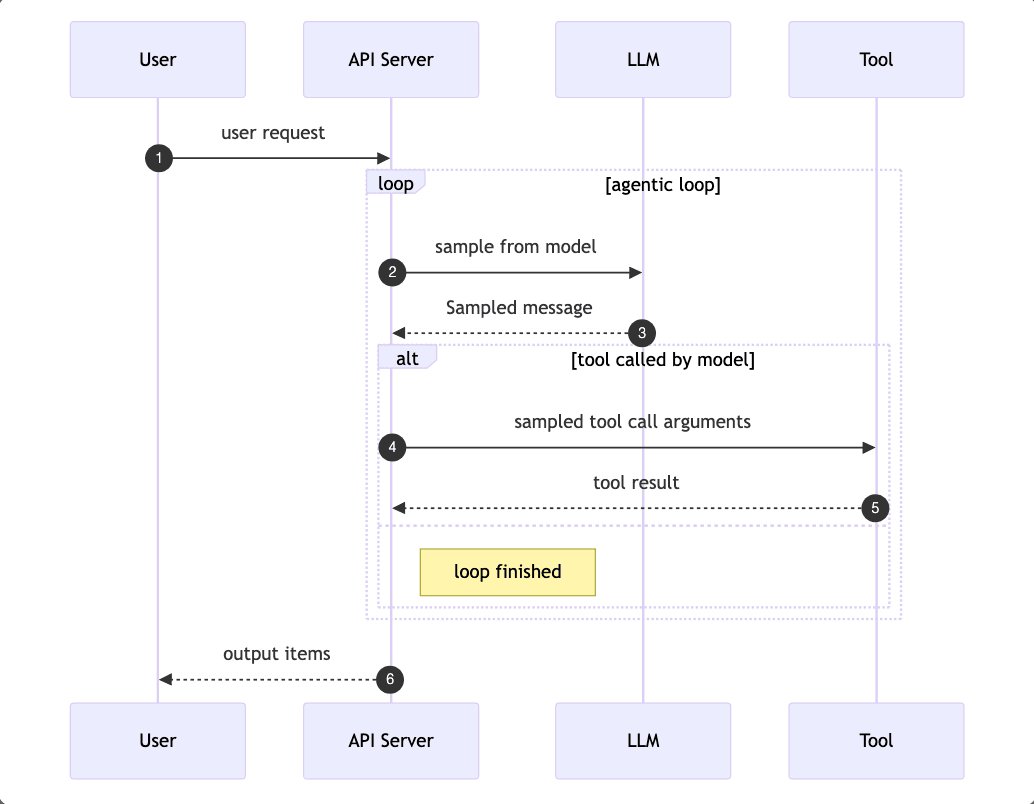

Tool use distinguishes between internal and external execution models. Internal tools execute directly within the provider's infrastructure, allowing the model to autonomously manage the entire agentic loop. This enables complex workflows like searching documents and summarizing findings before returning a final result in a single API round-trip. External tools, conversely, execute within the developer's application code, requiring the model provider to pause and request tool execution, with the developer handling the execution and returning output to continue the loop.

Agentic loops formalize the repeating cycles of reasoning, tool invocation, and reflection that characterize modern AI agents. By standardizing how these loops are managed, the specification enables model providers to process complex workflows within their infrastructure and return final results efficiently.

Reasoning Visibility and Provider Control

One of the most significant features is the reasoning item type, which exposes model thought processes in a provider-controlled manner. The payload can include raw reasoning content, protected content, or summaries, giving developers visibility into how models reach conclusions while allowing providers to control disclosure. This balances transparency with the need to protect proprietary reasoning patterns and prevent potential misuse of raw thought processes.

Early Adoption and Ecosystem Impact

The specification has already seen early adoption from key players in the AI ecosystem. Hugging Face, OpenRouter, and Vercel have committed to supporting the standard, while local inference providers like LM Studio, Ollama, and vLLM enable standardized agentic workflows on local machines. This broad support suggests the specification could become a de facto standard for agentic AI development.

The announcement has sparked discussion about vendor lock-in and ecosystem maturity. Rituraj Pramanik, an AI developer, noted the irony of building an "open" standard on top of OpenAI's API but emphasized the practical benefits: "The real nightmare is fragmentation; we waste so much time gluing different schemas together. If this spec stops me from writing another 'wrapper for a wrapper' and makes model swapping painless, you are solving the single biggest headache in agentic development."

Implications for Open Source and Local Models

AI developer and educator Sam Witteveen predicts significant implications for the open-source model landscape: "Expect frontier open model labs (Qwen, Kimi, DeepSeek) to train models compatible with BOTH Open Responses AND the Anthropic API. Ollama just announced Anthropic API compatibility too, meaning high-quality local models running with the ability to use Claude Code tools is not too far away."

This compatibility could enable developers to run sophisticated agentic workflows locally while maintaining access to the same tool ecosystems available in cloud-based solutions. The ability to switch between proprietary and open models without rewriting integration code represents a significant step toward a more open and competitive AI ecosystem.

Technical Implementation and Developer Experience

The specification supports multimodal inputs, streaming events, and cross-provider tool calling, addressing many of the technical challenges developers face when building agentic applications. By reducing the translation work required when switching between frontier models and open-source alternatives, Open Responses could dramatically simplify the development process.

Hugging Face has released a demo application allowing developers to see the specification in action, and the compliance test tool enables providers to verify their implementations against the standard. The schema and specification are available at the project's official website, providing a clear path for adoption.

Industry Context and Future Directions

The release of Open Responses comes amid growing recognition that the AI ecosystem needs standardization to achieve its full potential. As agentic AI systems become more sophisticated and widely deployed, the ability to seamlessly integrate different models and tools becomes increasingly critical.

The specification represents a pragmatic approach to standardization, building on existing patterns while providing a clear path for evolution. By focusing on the specific challenges of agentic workflows rather than attempting to standardize all aspects of LLM interaction, Open Responses targets a critical pain point in the developer experience.

For developers building production AI applications, the implications are significant. The ability to switch between models without rewriting integration code could accelerate experimentation and reduce vendor lock-in risks. For the broader ecosystem, increased standardization could lead to more robust tooling, better interoperability, and faster innovation as developers can focus on building applications rather than managing integration complexity.

As the specification gains adoption and more providers implement support, it could become a foundational element of the agentic AI landscape, enabling the kind of interoperability and openness that has characterized successful technology ecosystems in other domains.

Comments

Please log in or register to join the discussion