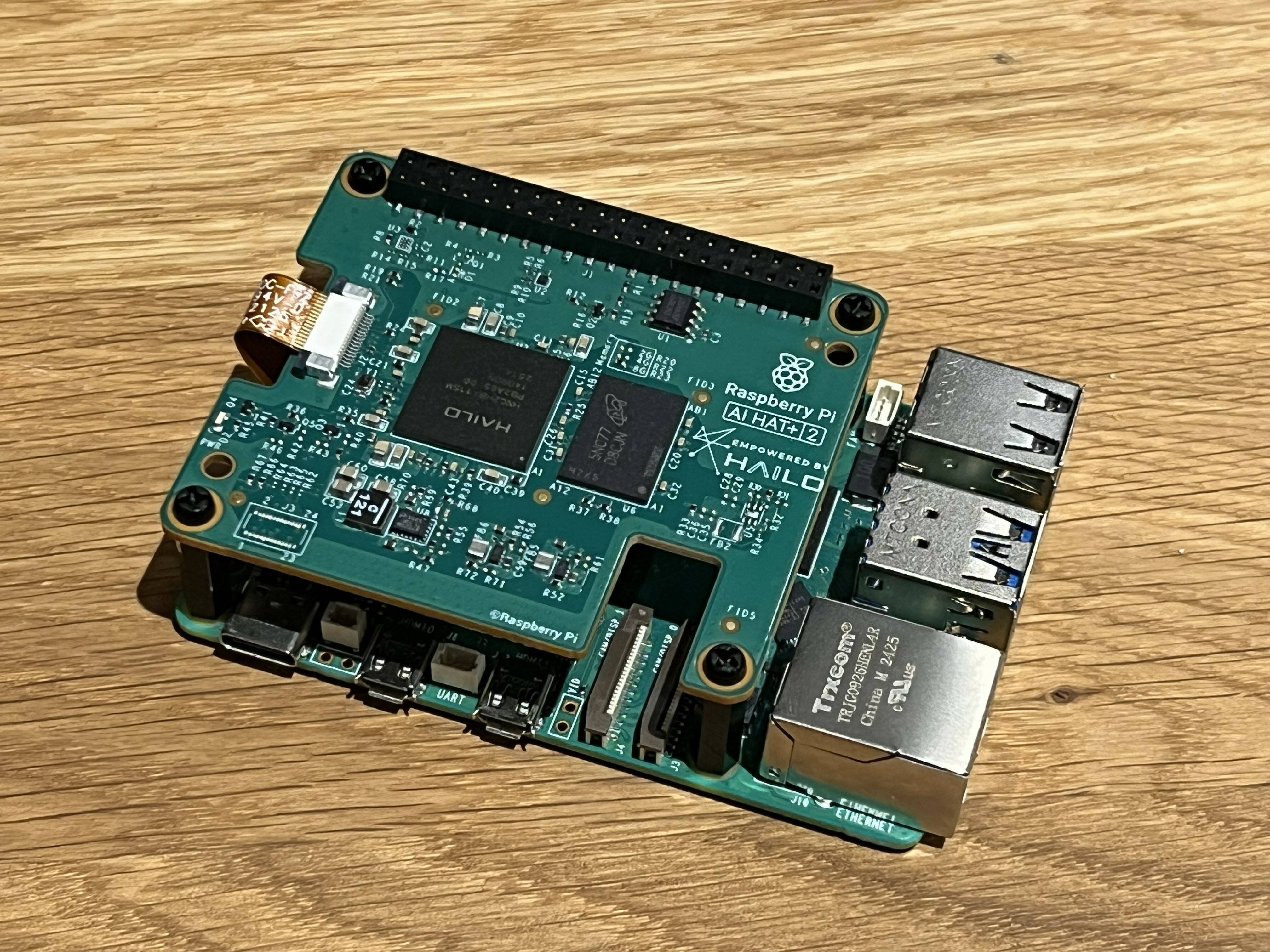

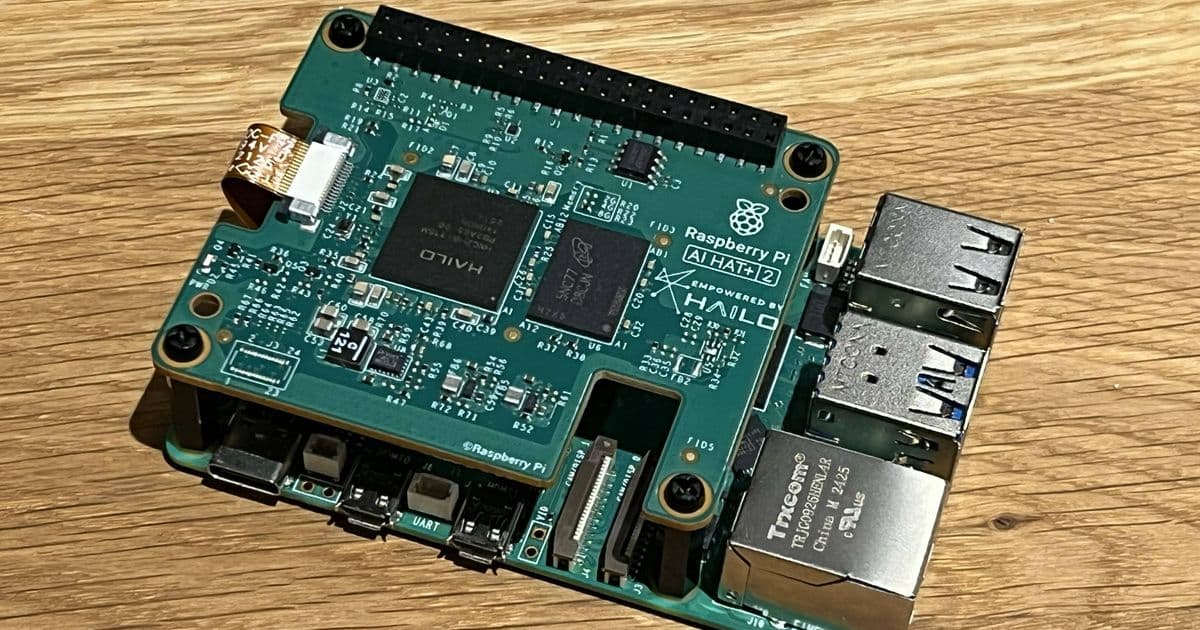

Raspberry Pi's new AI HAT+ 2 brings 40 TOPS of inference performance and 8GB of onboard memory to the Pi 5, targeting local AI workloads. While the hardware specifications are impressive for a $130 add-on, the practical utility depends heavily on specific use cases—particularly for local LLM processing versus the more common computer vision tasks.

Raspberry Pi has launched the AI HAT+ 2, a new add-on board that brings significant AI processing capabilities to the Raspberry Pi 5. The board features a Hailo-10H neural network accelerator delivering 40 TOPS (INT4) of inference performance, paired with 8GB of onboard RAM. This combination is specifically designed to accelerate large language models (LLMs), vision language models (VLMs), and other generative AI applications while offloading processing from the host Pi.

The hardware connects to the Pi's GPIO connector and communicates via the PCIe interface, similar to its predecessor. The 8GB of onboard memory is a notable addition, designed to prevent AI applications from consuming the Pi's main system memory. However, this specification raises questions about practical utility given the memory demands of modern AI models.

Hardware Specifications and Performance

The AI HAT+ 2 delivers 40 TOPS of inference performance, a substantial improvement over the previous AI HAT+ model's 26 TOPS. This performance is specifically optimized for INT4 precision, which represents a trade-off between accuracy and computational efficiency. For computer vision tasks, the performance remains at approximately 26 TOPS, meaning users focused solely on vision processing may not see significant benefits from upgrading.

The board includes an optional passive heatsink, though active cooling is strongly recommended given the thermal output of the Hailo-10H chip. Raspberry Pi provides spacers and screws to accommodate the board when using their official active cooler.

Software Integration and Model Support

Integration with the Raspberry Pi ecosystem is straightforward. The hardware is natively supported by rpicam-apps applications, and the company reports successful testing with Docker and the hailo-ollama server running the Qwen2 model. At launch, Raspberry Pi announced support for several models including DeepSeek-R10-Distill, Llama3.2, Qwen2.5-Coder, Qwen2.5-Instruct, and Qwen2.

Notably, all supported models except Llama3.2 are 1.5-billion-parameter models. This size is modest compared to cloud-based LLMs from OpenAI, Meta, and Anthropic, which range from 500 billion to 2 trillion parameters. Raspberry Pi acknowledges this disparity but emphasizes that these models work well within the hardware constraints for edge-based applications.

Practical Considerations and Use Cases

The fundamental question surrounding the AI HAT+ 2 is its practical utility. For users with computer vision requirements, the existing AI HAT+ or even the $70 AI camera may be more cost-effective options. The $130 price point makes the AI HAT+ 2 a significant investment that needs clear justification.

The primary use case appears to be local LLM processing. The 8GB of onboard RAM can handle model weights that would otherwise consume the Pi's system memory, though this advantage is tempered by the fact that Raspberry Pi 5 is available with up to 16GB of RAM. For many applications, simply purchasing a higher-memory Pi 5 might be more economical than adding the AI HAT+ 2.

Edge Computing Context

The AI HAT+ 2 fits into a broader trend toward edge AI computing, where processing occurs locally rather than in the cloud. This approach offers several advantages: reduced latency, improved privacy (data doesn't leave the device), and lower operational costs. For applications requiring real-time response or operating in environments with limited internet connectivity, local processing is essential.

However, the hardware constraints of edge devices like the Raspberry Pi 5 necessitate compromises. The supported models are significantly smaller than their cloud counterparts, which may limit the complexity of tasks they can handle. For example, while these models can perform text generation, summarization, and basic question-answering, they may struggle with more sophisticated reasoning or specialized domain knowledge.

Cost-Benefit Analysis

The AI HAT+ 2's $130 price represents a substantial portion of a Raspberry Pi 5 system's total cost. A complete setup including a Pi 5 (8GB), power supply, storage, and the AI HAT+ 2 could exceed $250. For comparison, cloud-based AI services offer access to much larger models for a per-use fee, though this introduces ongoing costs and data transmission requirements.

The decision between local and cloud processing depends on several factors:

- Data sensitivity: Local processing keeps sensitive data on-device

- Latency requirements: Local processing eliminates network delays

- Usage volume: High-volume processing may favor local hardware

- Model requirements: If you need larger models than 1.5B parameters, cloud services may be necessary

Future Developments

Raspberry Pi has indicated that larger models will be available in future updates. This suggests the company recognizes the current model size limitation and plans to expand capabilities as hardware and software optimizations allow. The Hailo-10H accelerator is designed for efficiency, which may enable larger models as software frameworks improve.

The broader ecosystem is also evolving. Frameworks like Ollama and specialized libraries are making it easier to run LLMs on edge hardware. As these tools mature, the value proposition of dedicated AI accelerators like the AI HAT+ 2 may become clearer.

Conclusion

The Raspberry Pi AI HAT+ 2 represents a capable piece of hardware for local AI processing, particularly for LLM workloads. Its 40 TOPS performance and dedicated memory make it a genuine option for edge AI applications. However, its practical value depends heavily on specific requirements.

For users focused on computer vision, existing solutions may be more appropriate. For those needing local LLM processing with models up to 1.5B parameters, the AI HAT+ 2 offers a viable solution. The decision ultimately comes down to whether the benefits of local processing—privacy, latency, and cost control—outweigh the limitations of smaller models and the hardware investment.

As the edge AI ecosystem continues to develop, products like the AI HAT+ 2 will likely find clearer use cases and more optimized software stacks. For now, it remains a specialized tool for specific applications rather than a general-purpose upgrade for all Raspberry Pi users.

Comments

Please log in or register to join the discussion