A developer's four-hour experiment creating a SQL client exclusively through AI-assisted 'vibe-coding' yields a functional desktop app but exposes critical concerns about maintainability. The Seaquel project demonstrates LLMs' coding capabilities while highlighting the risks of uncurated AI-generated codebases.

The emergence of "vibe-coding"—the practice of creating software exclusively through AI-generated code—promises effortless development. Skeptical of these claims, a developer with two decades of experience conducted an experiment: build a SQL database GUI called Seaquel entirely through LLM interactions without manually writing a single line of code.

The Vibe-Coding Process

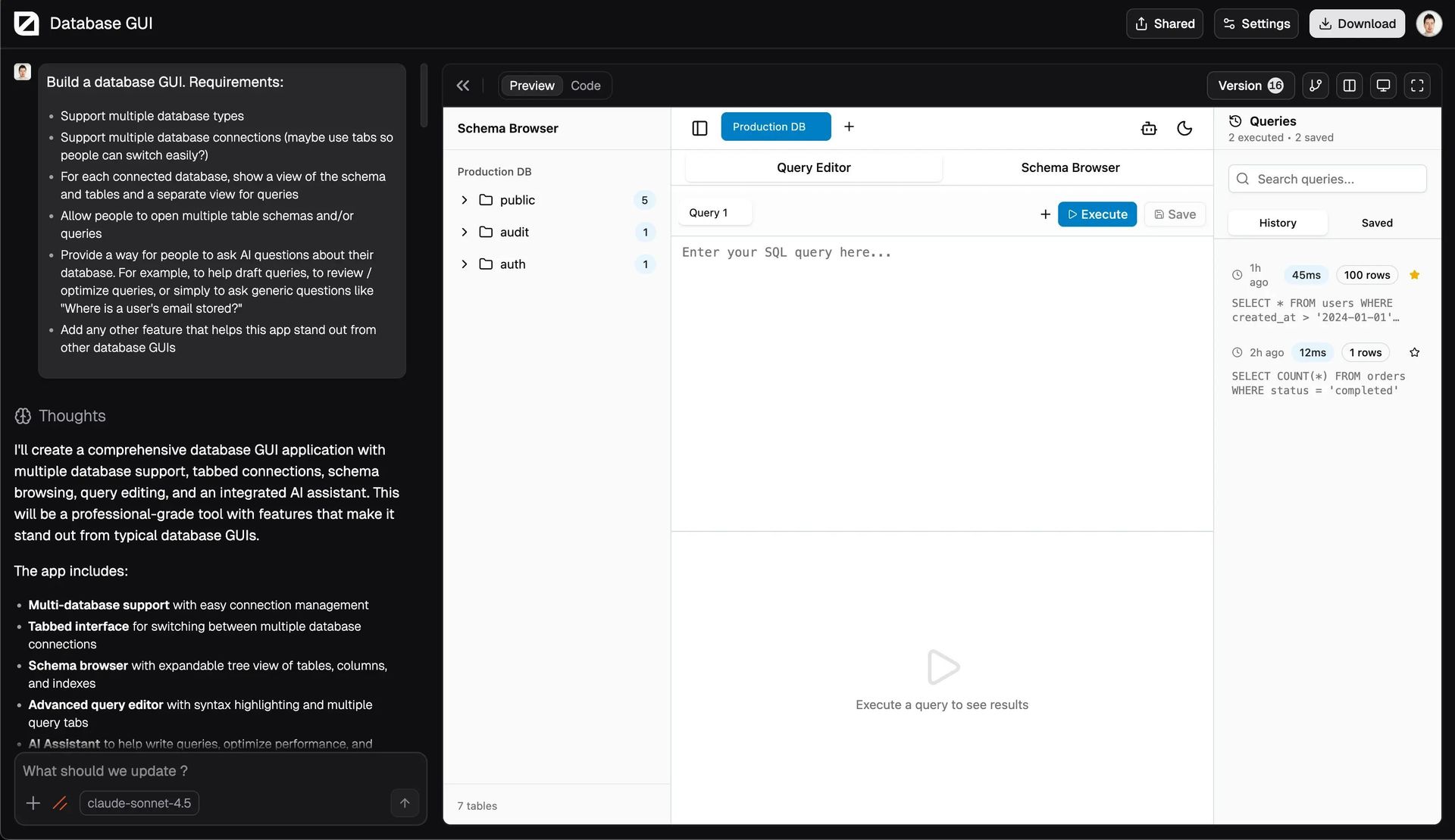

Using Svelte0.com for frontend generation, the developer fed AI systems increasingly specific prompts over 15 iterations to design a database client interface. The requirements included multi-database support, connection management via tabs, schema visualization, and AI-assisted query building.  shows the initial UI output using dummy data.

shows the initial UI output using dummy data.

"My laptop had been asleep for a while. Well rested, I woke it up and continued my chat-like conversation with Svelte0"

The project combined Tauri for desktop runtime efficiency and SvelteKit for the frontend. After setting up the project locally, the developer used Zed IDE's integrated LLMs (Claude) to replace dummy data with actual database connectivity:

- Added Tailwind CSS and shadcn-svelte

- Generated connection string handling

- Implemented live schema inspection

- Built SQL query execution

Within four hours, Seaquel could connect to databases, inspect schemas, and execute queries. AI even generated the product name, logo ("Seaquel" as an SEO-friendly variant of "Sequel"), and landing page at seaquel.app.

The Maintainability Paradox

Despite functional success, examining the generated code revealed significant issues. The desktop application's core logic resided in a single 1,007-line database.svelte.ts file—an architectural anti-pattern. The developer notes:

"My main beef with it... is that it's unmaintainable. Unmaintainable code leads to bugs that are hard to debug, hard to fix, and cause problems beyond slow team velocity."

While acknowledging the approach works for MVPs, the developer rejects claims that AI can adequately address complex concerns like security audits, memory leaks, or privacy violations in production systems. The experiment highlights a critical gap: LLMs generate functional code blocks but lack architectural awareness.

The Efficiency Trap

The project exposes the tension between AI's coding speed and technical debt accumulation. While vibe-coding enabled rapid prototyping, the monolithic output creates future scalability challenges. As LLM providers profit from token-based usage, the experiment suggests that human oversight remains essential for sustainable software—particularly where security, privacy, and long-term maintenance matter.

Seaquel's source code remains available for inspection on GitHub (GUI, website), serving as a case study in AI's current coding capabilities and limitations. As detailed in the original MooToday blog post, this experiment validates both the promise and perils of delegating development to large language models.

Comments

Please log in or register to join the discussion