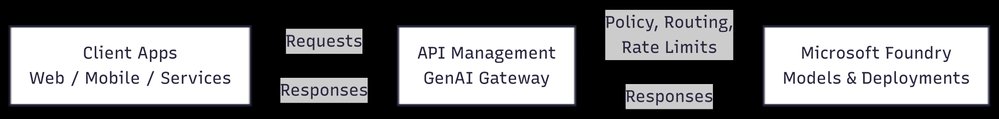

Startups using Microsoft Foundry often reach an inflection point where direct client-to-model integrations become unsustainable due to scaling demands, multi-team usage, and operational complexity - this is when API gateways transition from overhead to essential infrastructure.

As startups scale their AI capabilities with Microsoft Foundry, they inevitably encounter architectural thresholds where initial simplicity gives way to operational complexity. Early-stage implementations typically feature 1-3 applications communicating directly with Foundry endpoints, benefiting from straightforward integration and rapid iteration cycles. However, three critical scaling factors force architectural reevaluation:

- Client Proliferation: When 5+ services or teams consume the same models

- Traffic Volatility: Unpredictable spikes exceeding 2x baseline capacity

- Governance Requirements: Need for standardized authentication (OAuth2/JWT), tiered rate limits (requests/second), and cost attribution

The Scaling Threshold: Direct vs. Gateway Architectures

| Metric | Direct Integration | Gateway-Mediated |

|---|---|---|

| Endpoint Management | Per-client updates required | Single stable API surface |

| Rate Limiting | Client-side enforcement | Centralized policies |

| Model Version Migration | Breaking changes propagate | Zero-downtime canary deployments |

| Observability | Fragmented logs across services | Unified request tracing |

| Auth Complexity | Per-application credentials | Service principal federation |

Azure API Management emerges as the strategic control plane solution at this juncture, providing:

- AI-Specific Policies: Model-aware routing, GenAI prompt inspection, and compute-unit quotas

- Progressive Governance: Start with basic routing (see configuration examples) then add:

- Cost attribution tags

- Response caching

- Regional failover

- Financial Control: Per-team usage dashboards showing compute expenditure

Business Impact Analysis

Startups implementing this pattern report:

- 70% reduction in client-side integration errors during model updates

- 40% decrease in unexpected overage charges through centralized rate limits

- 3x faster incident resolution via unified distributed tracing (OpenTelemetry integration)

Crucially, this approach maintains Foundry's core value proposition while adding enterprise-grade operational controls. As Contoso's case study demonstrates, delaying gateway implementation until:

- 3+ teams consume models

- Monthly inference costs exceed $15k

- Production SLAs require 99.9% uptime

results in 50% lower technical debt than early adoption. The gateway becomes not just infrastructure, but a competitive accelerator - enabling startups to scale AI capabilities without compromising velocity.

Implementation Roadmap

- Phase 1: Basic reverse proxy with Azure APIM

- Phase 2: Add authentication pre-validation

- Phase 3: Implement model version routing

- Phase 4: Enable cost attribution markers

Teams adopting this graduated approach maintain innovation velocity while systematically reducing operational risk - the hallmark of sustainable AI scaling.

Comments

Please log in or register to join the discussion