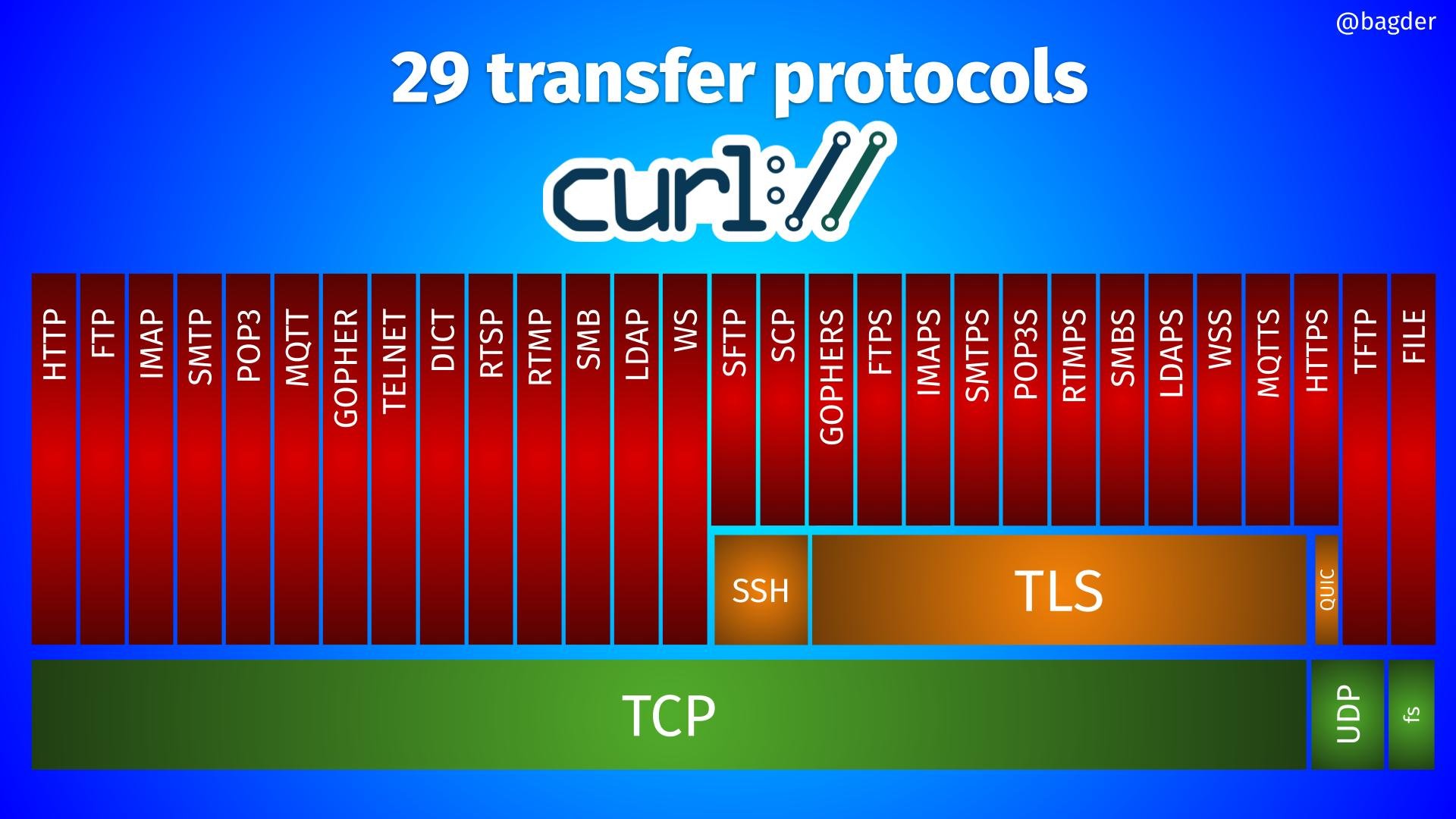

An analysis of how curl maintains memory efficiency despite continuous feature development, examining structural changes and allocation patterns over five years.

In software development, the gradual accumulation of resource consumption often occurs unnoticed until it becomes a critical problem. Daniel Stenberg's examination of libcurl's memory usage reveals a conscious, ongoing effort to balance feature expansion with resource constraints in one of the world's most widely deployed networking libraries. This case study illuminates how deliberate architectural choices and monitoring mechanisms can prevent the insidious creep of memory bloat.

Structuring the Battle Against Bloat

The curl team implemented a proactive safeguard in 2025: test case 3214 establishes fixed upper limits for fifteen critical struct sizes. This mechanism acts as an early warning system, requiring developers to consciously justify any structural expansion rather than allowing accidental growth through routine development. Such institutionalized constraints represent mature software stewardship, acknowledging that without explicit guardrails, entropy inevitably increases resource consumption.

The Five-Year Memory Landscape

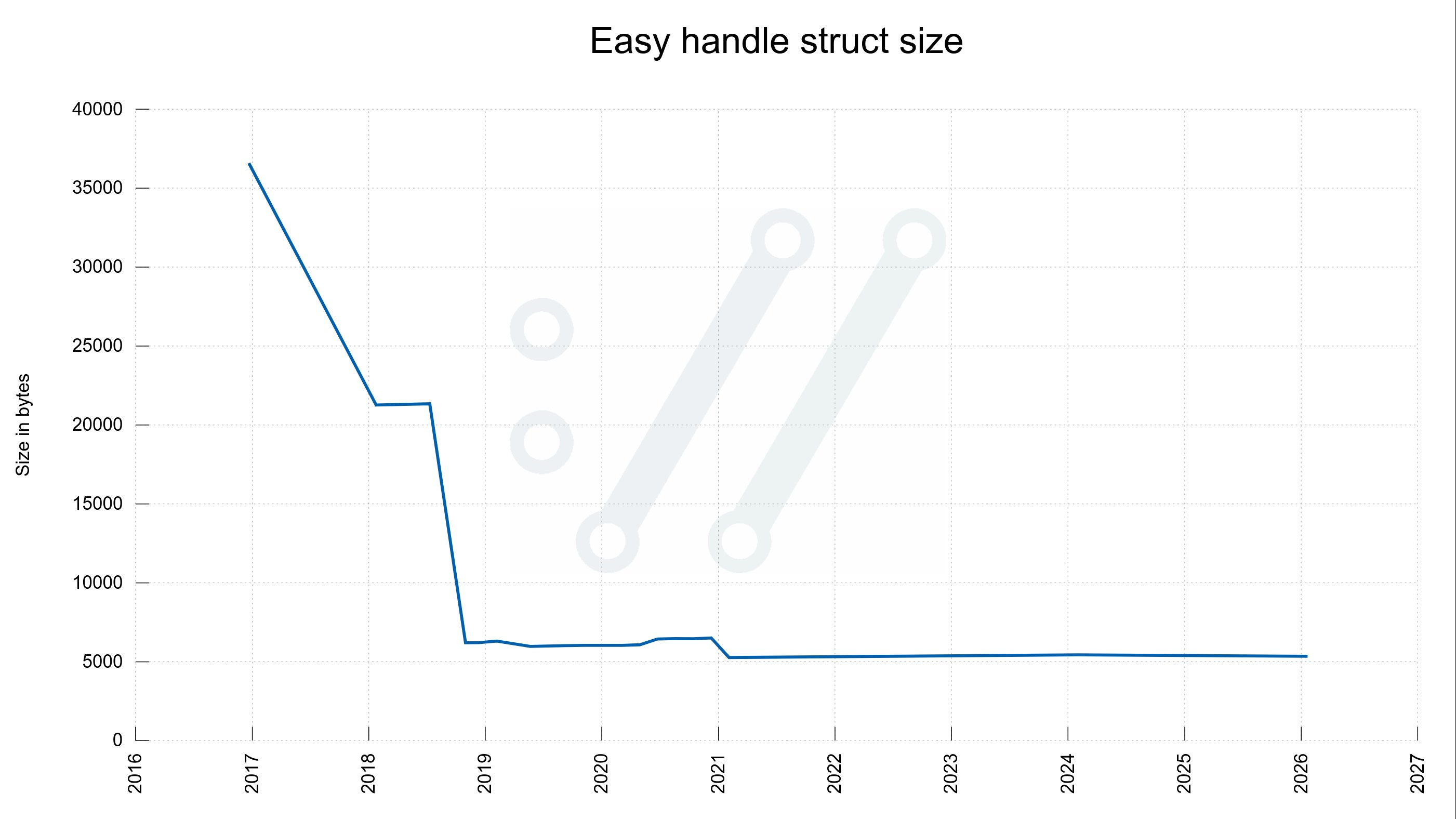

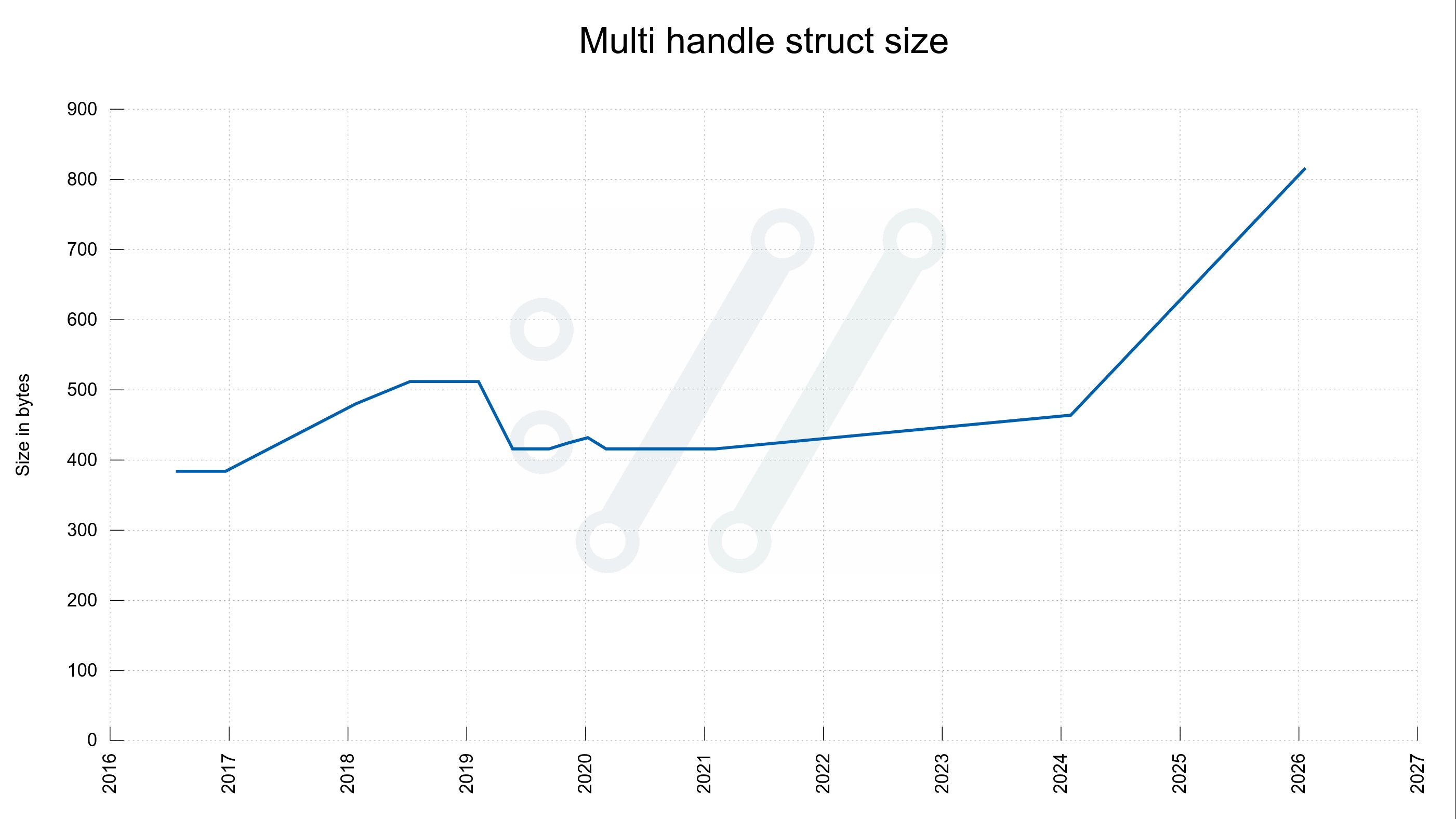

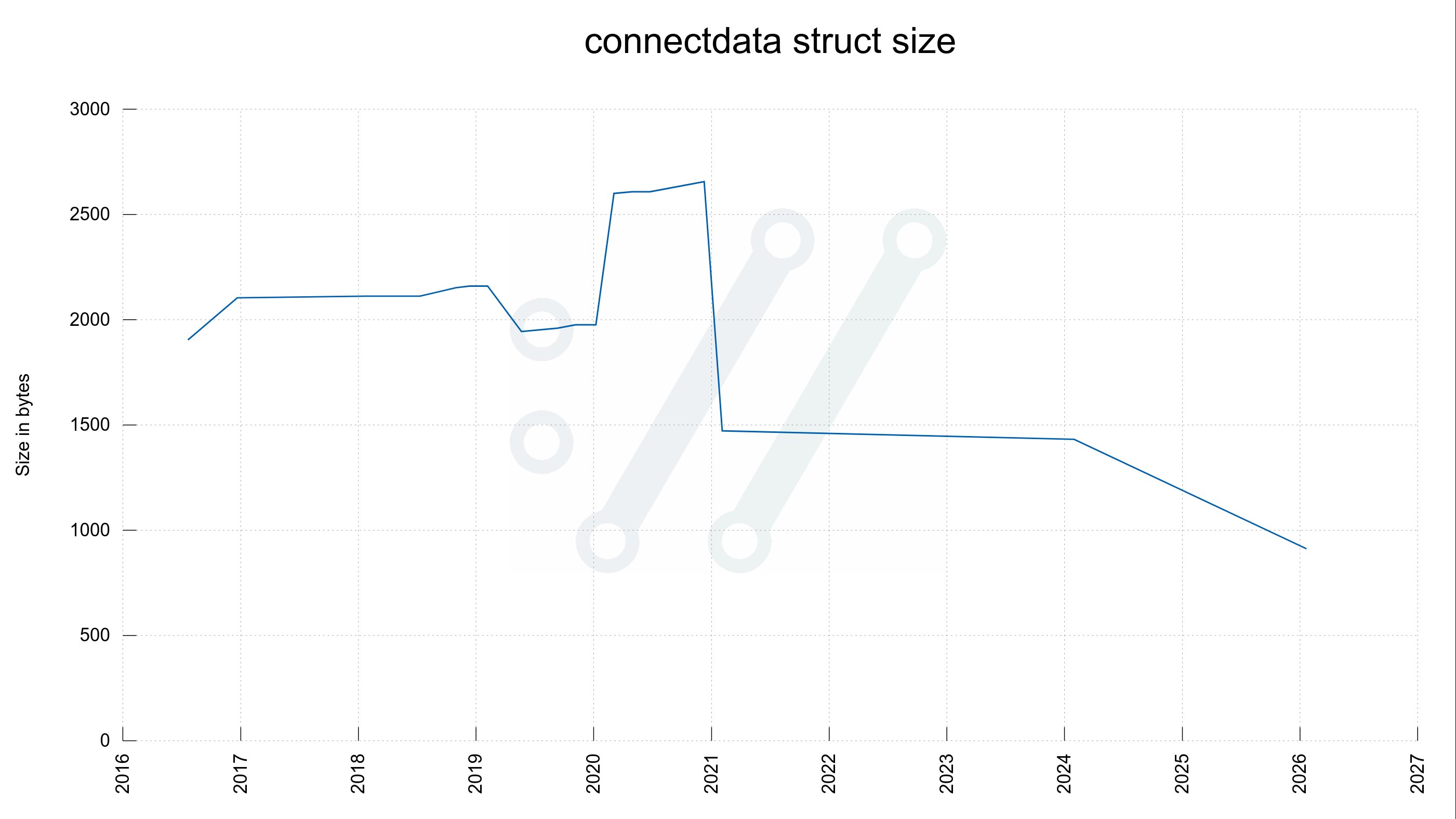

Comparative analysis between curl 7.75.0 (2021) and current development versions reveals nuanced structural evolution. Three core components show divergent trajectories:

- Multi handle: Grew from 416 to 816 bytes

- Easy handle: Modest increase from 5272 to 5352 bytes

- Connectdata: Significant reduction from 1472 to 912 bytes

The net effect demonstrates remarkable efficiency: a hypothetical scenario with 10 parallel transfers and 20 connections now consumes 72,576 bytes—exactly 10,000 bytes less than five years ago. This improvement occurs despite substantial feature additions including HTTP/3 support, improved HTTP/2 multiplexing, and enhanced security capabilities. The connectdata struct's shrinkage illustrates successful data structure optimization, where responsibilities were redistributed more efficiently across the architecture.

Allocation Patterns Under Microscope

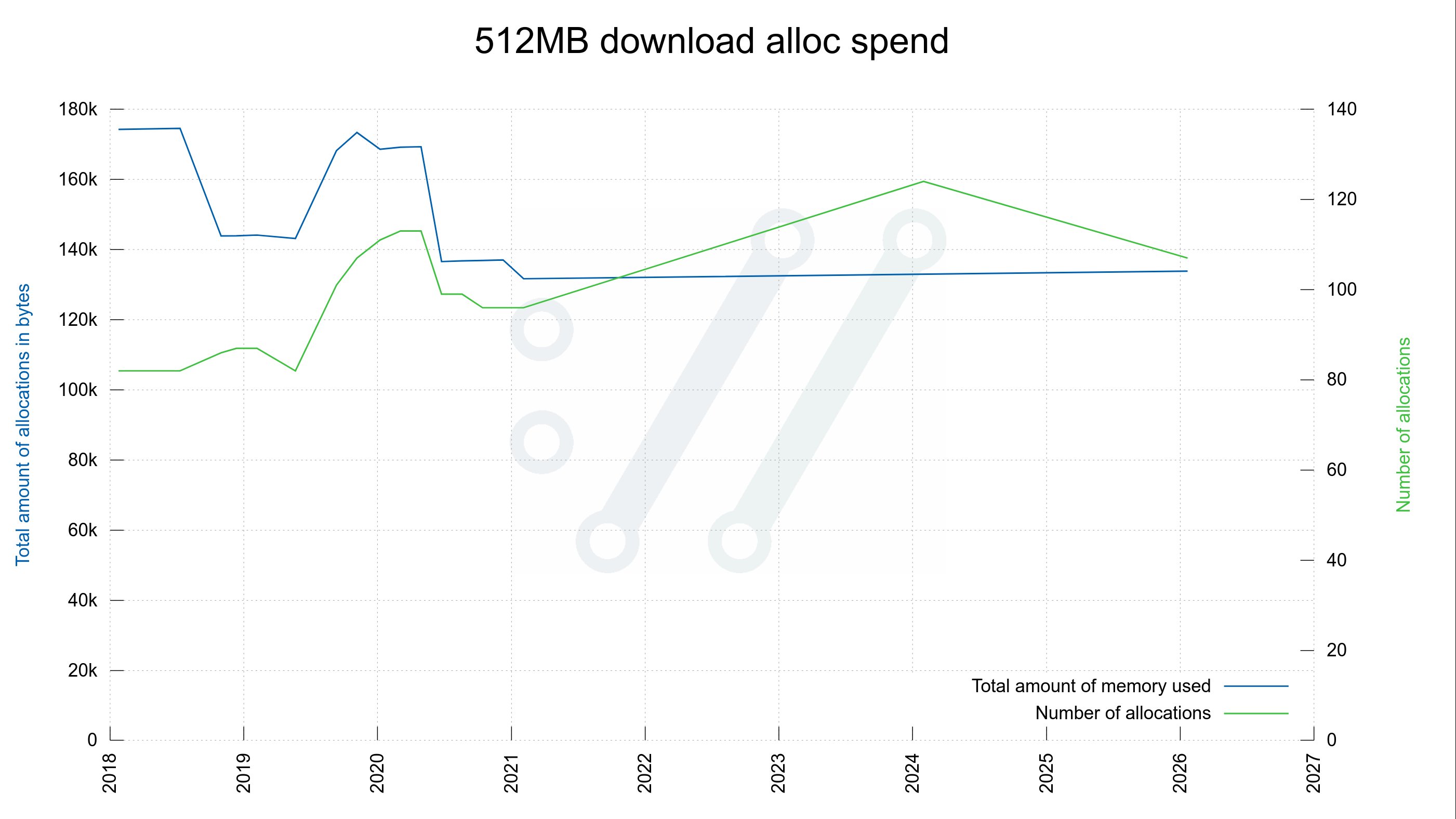

Benchmarking a 512MB HTTP download reveals subtle shifts in memory behavior:

| Metric | 2021 (7.75.0) | 2026 (dev) | Change |

|---|---|---|---|

| Allocations | 96 | 107 | +11% |

| Peak memory | 131,680 bytes | 133,856 bytes | +1.6% |

While allocation calls increased moderately, peak memory remains nearly equivalent—a testament to careful memory management practices. Parallel stress testing (20 concurrent downloads) shows linear scaling in allocation count but sublinear memory growth, suggesting efficient reuse of connection resources.

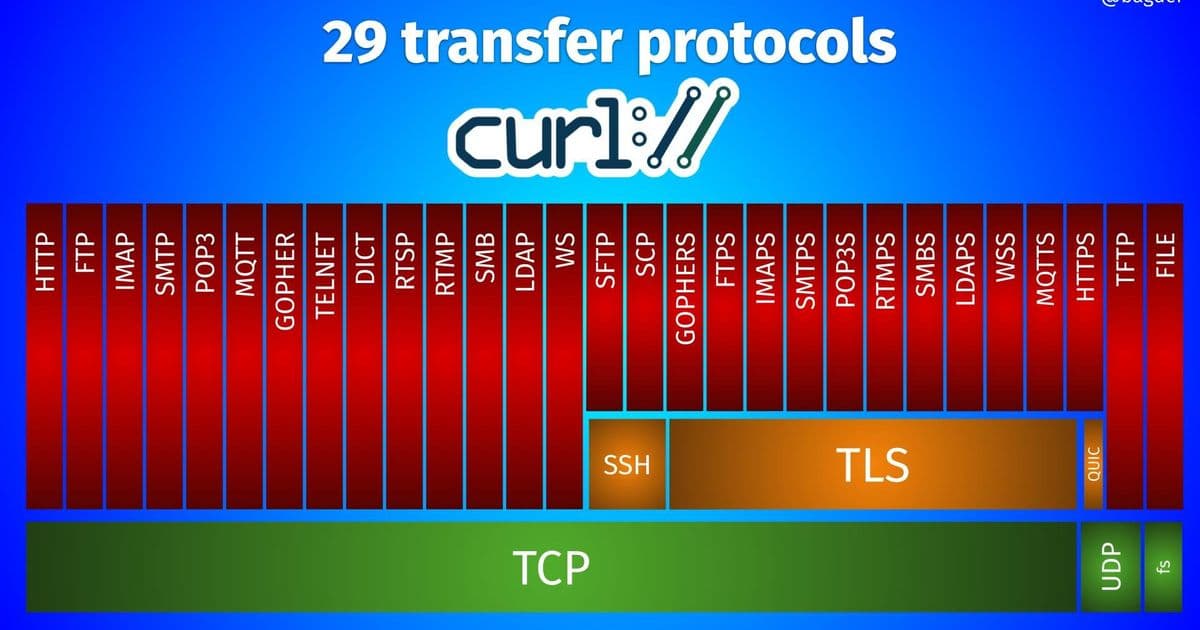

The TLS Wildcard

Stenberg rightly notes the analysis focuses on plain HTTP, acknowledging that TLS operations introduce external memory factors. OpenSSL and other cryptographic libraries operate outside curl's memory jurisdiction, creating variable overhead that can dwarf curl's internal allocations. This delineation of responsibility highlights the ecosystem nature of modern software, where optimization efforts must recognize component boundaries.

Philosophical Implications

This case exemplifies sustainable software evolution. The 1.6% memory increase over five years represents an exceptionally favorable exchange rate for accumulated functionality. More significantly, it demonstrates how:

- Quantitative benchmarks prevent subjective degradation assessments

- Structural decomposition enables targeted optimization

- Institutionalized checks counteract entropy

In an era where developers often prioritize velocity over efficiency, curl's approach offers a counter-model: continuous improvement without compromising core efficiency values. The project maintains compatibility with embedded systems while scaling to cloud environments, proving that resource consciousness needn't impede capability expansion.

The slight allocation increase warrants monitoring but doesn't undermine curl's achievement. As Stenberg concludes: "We offer more features and better performance than ever, but keep memory spend at a minimum." This statement encapsulates the delicate equilibrium all long-lived systems must maintain—a philosophy extending far beyond networking libraries to the fundamental challenge of sustainable software evolution.

Comments

Please log in or register to join the discussion