A developer's quest to optimize a Dart lexer uncovered an unexpected truth: processing thousands of small files incurs crippling syscall overhead that dwarfs computational gains, explaining why package managers like pub.dev use archive formats.

When optimizing performance, we instinctively focus on computational bottlenecks—algorithms, data structures, compiler optimizations. Yet a recent optimization journey reveals how infrastructure concerns can eclipse even significant computational improvements. After developing an ARM64 assembly lexer that processed Dart code 2.17× faster than Dart's official scanner, benchmark results revealed a sobering truth: the computational improvement was nearly irrelevant. Input/output operations consumed 80% of total processing time when scanning 104,000 files.

The Syscall Tax

The benchmark involved reading 1.13GB of Dart source across 104,000 files. While the custom lexer processed files in 2,807 ms versus the official scanner's 6,087 ms, disk I/O consumed 14,126 ms—five times longer than lexing itself. Despite using an NVMe SSD capable of 5-7 GB/s throughput, actual read speeds plateaued at 80 MB/s. The culprit wasn't storage hardware but operating system overhead: each file required open(), read(), and close() syscalls, totaling over 300,000 system calls. Each syscall imposes 1-5 microseconds of context-switching penalty between user and kernel space, plus filesystem metadata operations. Cumulatively, this "syscall tax" consumed seconds before any substantive work occurred.

Archives as Antidote

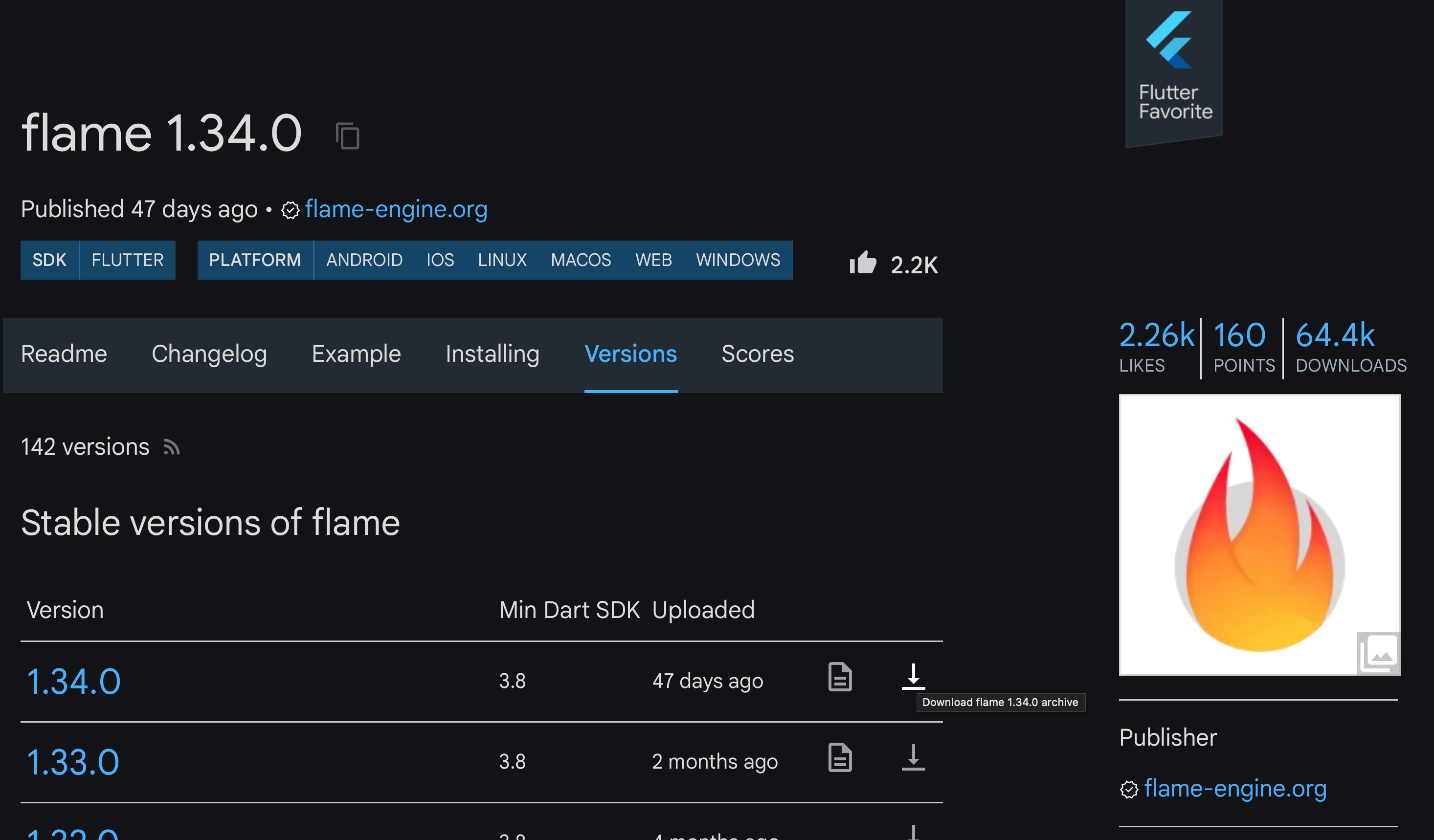

Recognizing pub.dev's use of tar.gz archives inspired an experiment: repackaging the 104,000 files into 1,351 compressed archives. Results were transformative:

| Metric | Individual Files | tar.gz Archives | Improvement |

|---|---|---|---|

| I/O Time | 14,525 ms | 339 ms | 42.85× |

| Data on Disk | 1.13 GB | 169 MB | 6.66× compression |

| Total Time | 17,493 ms | 7,713 ms | 2.27× |

Compression reduced disk reads by 84%, but the dominant gain came from slashing syscall volume from 300,000 to ~4,000. Sequential archive reads leveraged SSD capabilities while minimizing context switches. Though gzip decompression added 4,507 ms, net performance still doubled.

Comments

Please log in or register to join the discussion