As AI agents gain access to our email accounts, security experts warn of a dangerous combination of untrusted content, sensitive information, and external communication that creates unprecedented vulnerabilities.

The promise of AI assistants handling our overflowing inboxes is undeniably appealing. Imagine an intelligent agent that reads all your emails, decides which ones to ignore, drafts responses for your approval, and even manages your calendar autonomously. For anyone drowning in digital communication, this sounds like salvation.

But beneath this convenience lies a security nightmare that's already unfolding.

The Lethal Trifecta of Agentic Email

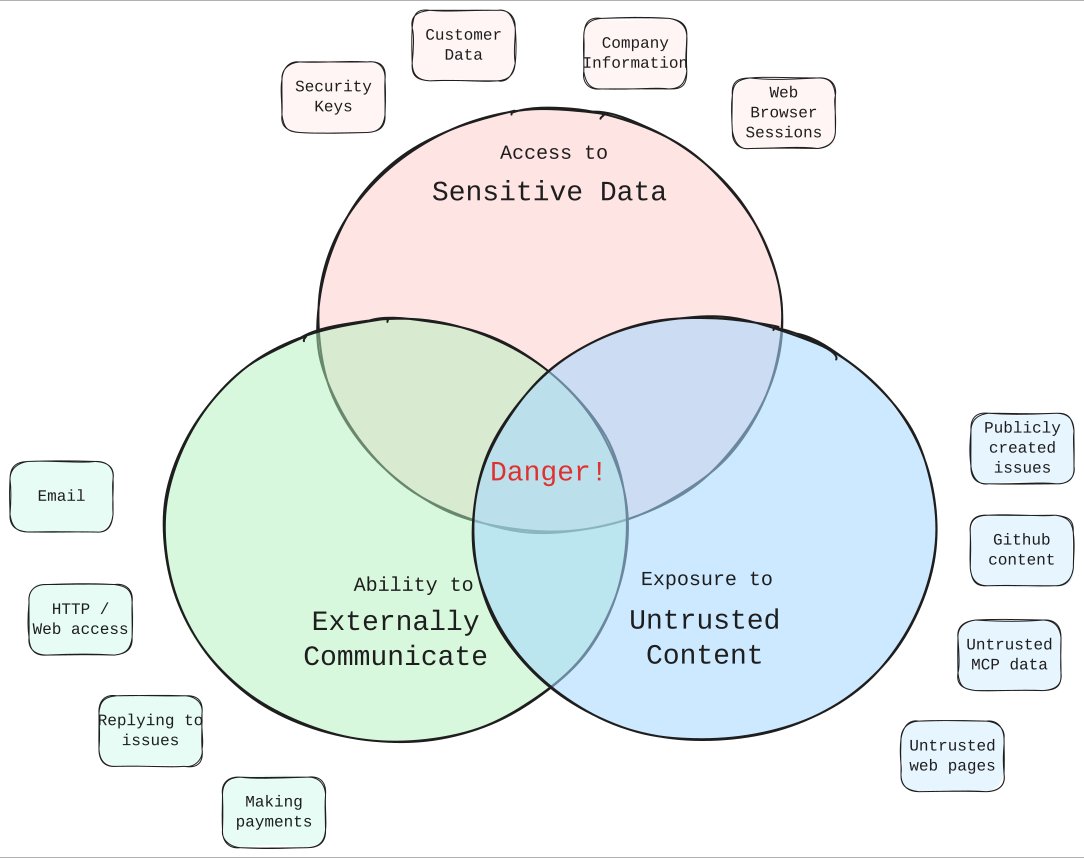

When you give an AI agent direct access to your email account, you're creating what security experts call "The Lethal Trifecta": untrusted content, sensitive information, and external communication all converging in one vulnerable system.

Email isn't just another communication tool—it's the central nervous system of our digital lives. It contains everything from casual conversations to financial statements, password resets to legal documents. An agent with email access has unprecedented context about your life, work, and relationships.

The problem is compounded by what we know about current AI systems: they're surprisingly gullible. An agent can be tricked into revealing sensitive information, executing harmful actions, or becoming a vector for attacks that bypass traditional security measures.

Real-World Scenarios That Keep Security Experts Awake

Consider this scenario: an attacker crafts an email that appears to come from you, instructing your agent to forward all password reset emails to a different address. The agent, following instructions, complies—and suddenly the attacker has control of multiple accounts.

Or imagine a sophisticated phishing attack that exploits the agent's trust in email content. Unlike humans who might spot suspicious patterns, agents can be manipulated through carefully crafted prompts that appear legitimate.

What makes this particularly concerning is that we're hearing reports of very senior and powerful individuals implementing these systems without fully understanding the risks. When high-profile targets are compromised, the consequences ripple far beyond individual inconvenience.

The Password Reset Vulnerability

One of the most alarming attack vectors involves email-based password reset workflows. Since many services use email as the primary method for account recovery, compromising an agent's email access can lead to a cascade of account takeovers.

An attacker could send an email that appears to be from the legitimate user, claiming they've forgotten their password and need the reset emails forwarded. The agent, lacking the human judgment to recognize social engineering, might execute this request without question.

A Safer Alternative: The Read-Only Box

Some security-conscious users are implementing a more cautious approach. By placing the agent in a "box" with only read-only access to emails and no internet connectivity, they significantly reduce the attack surface.

In this configuration, the agent can still analyze emails and draft responses, but it cannot execute actions directly. Instead, it outputs its work to a text file for human review. This eliminates the external communication vector while preserving much of the analytical benefit.

This approach transforms the threat from a full trifecta to just two elements—still risky, but no longer in the "danger zone." It's a classic security trade-off: reduced capability for significantly improved safety.

The False Sense of Security

Perhaps most concerning is that we haven't yet seen major security breaches from agentic email systems. This absence of incidents creates a dangerous complacency. Just because attackers aren't actively exploiting these vulnerabilities today doesn't mean they won't tomorrow.

Security researchers worry we're living in a false sense of security, waiting for the inevitable moment when sophisticated attackers realize the goldmine they've been handed.

The Responsibility of Implementation

Anyone considering agentic email needs to approach it with eyes wide open. The convenience of automated email management must be weighed against the very real possibility of security breaches that could compromise not just your data, but your entire digital identity.

The technology is advancing faster than our understanding of its security implications. What seems like a helpful assistant today could become tomorrow's security disaster.

Looking Forward

As AI agents become more capable and more integrated into our daily workflows, we need to develop security frameworks that account for their unique vulnerabilities. The traditional security models designed for human users don't adequately address the risks of autonomous agents with access to sensitive systems.

Until we have more robust security measures specifically designed for agentic systems, the safest approach may be to keep our most sensitive communications firmly in human hands—at least until we can trust our digital assistants as much as we trust ourselves.

For those who do proceed with agentic email, implementing strict access controls, monitoring agent behavior, and maintaining human oversight for sensitive actions isn't just good practice—it's essential for survival in an increasingly hostile digital landscape.

Comments

Please log in or register to join the discussion