On January 22, 2026, an automated routing policy configuration error at Cloudflare's Miami data center caused an IPv6 route leak that lasted 25 minutes, impacting both Cloudflare customers and external networks. The incident reveals how even sophisticated network automation can produce unexpected failures when policy logic interacts with BGP's complex routing rules.

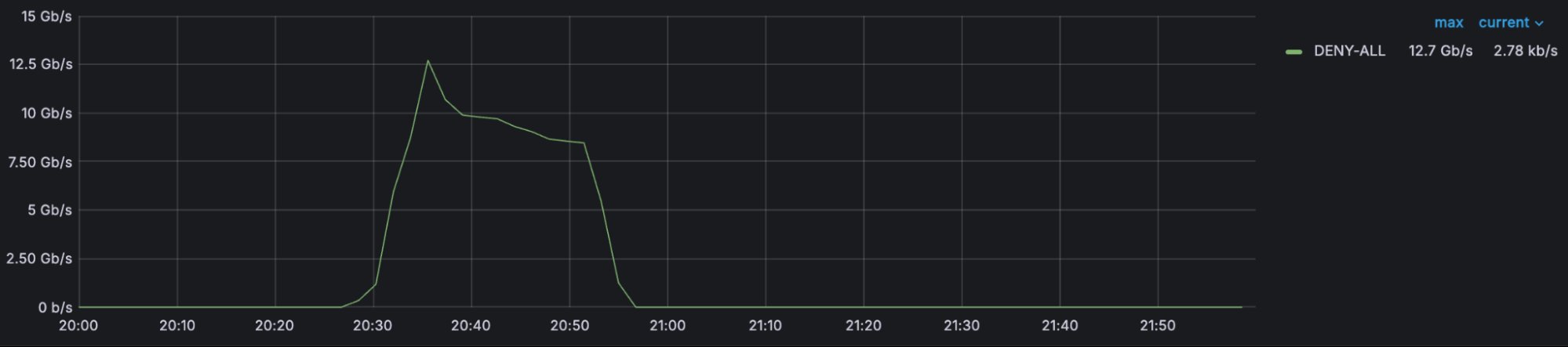

On January 22, 2026, at 20:25 UTC, a seemingly routine configuration change at Cloudflare's Miami data center triggered an unexpected cascade of BGP route advertisements. The change, intended to remove IPv6 prefixes for a Bogotá data center from various routing policies, instead created a policy that was too permissive, causing Cloudflare to inadvertently advertise internal IPv6 routes to transit providers and peers. The route leak lasted 25 minutes, causing congestion on backbone links between Miami and Atlanta, elevated packet loss for some Cloudflare customer traffic, and higher latency across these connections. Additionally, firewall filters on Cloudflare's routers discarded approximately 12Gbps of traffic ingressing the Miami router for non-downstream prefixes.

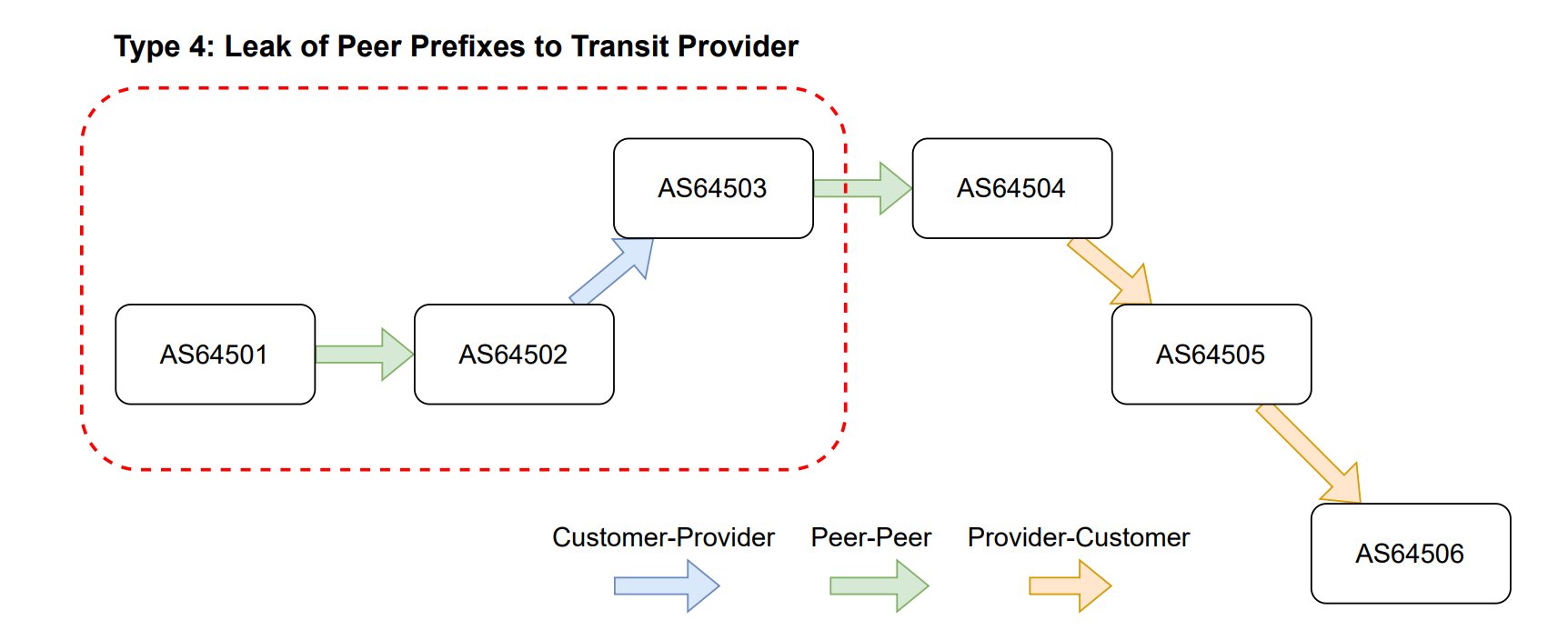

This incident represents a rare occurrence where Cloudflare itself caused a route leak, rather than documenting one. The company has previously written extensively about route leaks, even maintaining a record of such events on Cloudflare Radar for public education. According to RFC7908, the incident constituted a mixture of Type 3 and Type 4 route leaks, where a network redistributed routes received from peers to both other peers and upstream providers, violating the fundamental valley-free routing principle that governs BGP path selection.

The Configuration Error

The problematic change was part of Cloudflare's network automation system, designed to manage BGP policies across their infrastructure. The specific modification aimed to remove IPv6 prefixes associated with their Bogotá data center (BOG04) from multiple export policies. The diff showed deletions across several policy statements including those for transit providers like Cogent, Level3, and Telia, as well as peer policies.

While the change appeared straightforward—simply removing a prefix list from various policies—it created an unintended consequence. After the deletions, the remaining policy terms became too permissive. Specifically, the policy statement 6-TELIA-ACCEPT-EXPORT contained a term that matched routes of type "internal" and accepted them for export. In Juniper's JunOS and JunOS EVO operating systems, the route-type internal match criterion doesn't only match true internal BGP (IBGP) routes; it matches any non-external route type, including routes learned from internal sources.

This subtle semantic difference in the routing policy language proved critical. The policy, now stripped of its specific prefix list, defaulted to matching all internal-type routes and accepting them for advertisement. Consequently, all IPv6 prefixes that Cloudflare redistributes internally across its backbone were suddenly eligible for external advertisement to BGP neighbors in Miami.

The Ripple Effect

When the automation pushed this configuration at 20:25 UTC, a series of unintended BGP updates were sent from Cloudflare's AS13335 to its peers and providers in Miami. These updates were captured by route collectors and can be analyzed using tools like monocle or RIPE BGPlay. The monocle output reveals the problematic AS paths, such as 64112 22850 174 3356 13335 32934 for prefix 2a03:2880:f077::/48.

This path shows that Cloudflare (AS13335) took a prefix received from Meta (AS32934)—a peer—and advertised it to Lumen (AS3356), an upstream transit provider. This violates BGP's valley-free routing rules, which dictate that routes learned from peers should only be readvertised to downstream (customer) networks, not laterally to other peers or upward to providers.

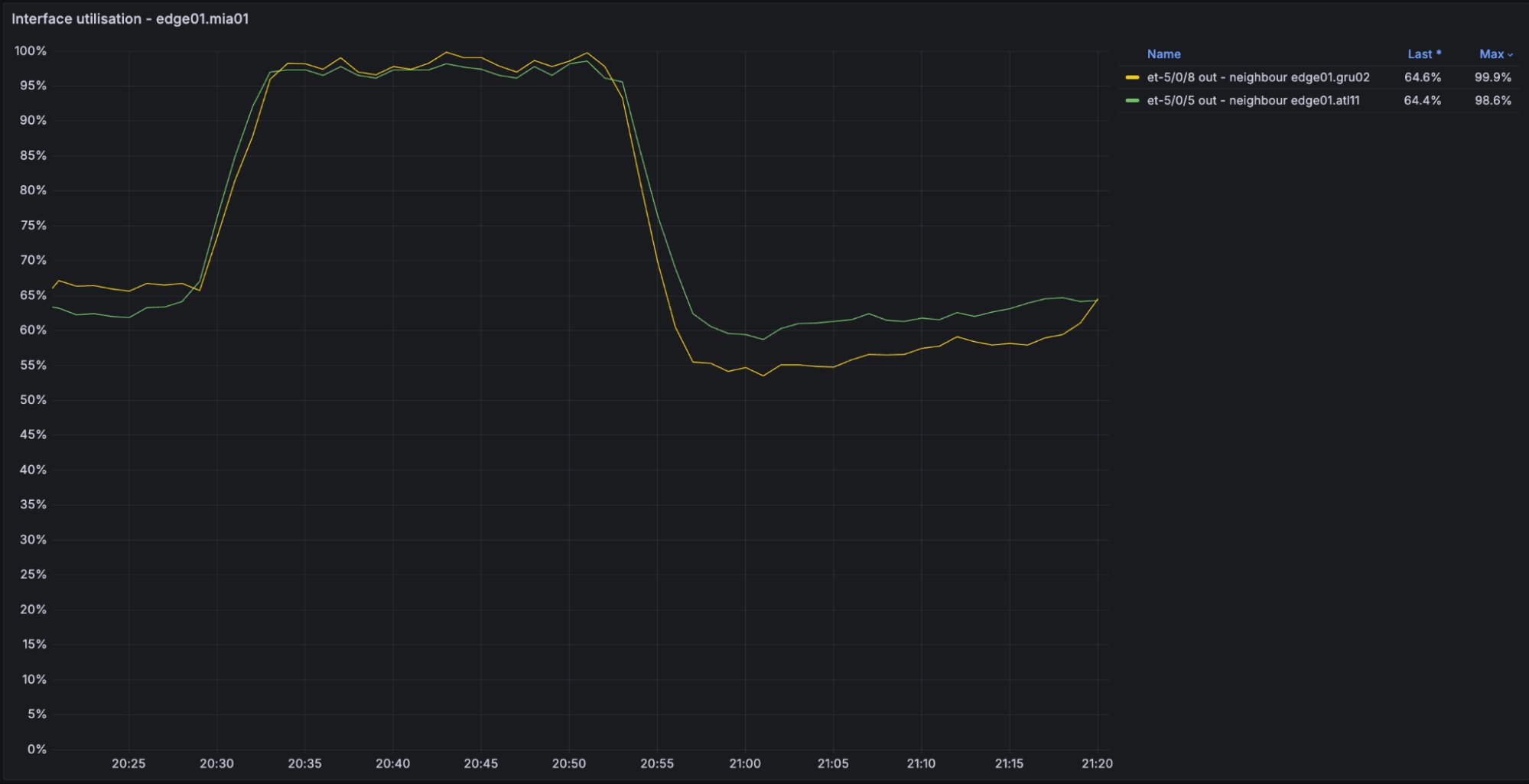

The unintended traffic redirection caused significant congestion on Cloudflare's backbone between Miami and Atlanta. The graph shows the spike in utilization during the incident window. This congestion resulted in elevated packet loss for Cloudflare customer traffic and increased latency for traffic traversing these links. Additionally, the networks whose prefixes were leaked experienced traffic being discarded by Cloudflare's firewall filters, which are designed to only accept traffic destined for Cloudflare services and its customers.

Timeline and Response

The incident unfolded over a tightly compressed timeline:

- 19:52 UTC: The change that triggered the routing policy bug was merged into Cloudflare's network automation code repository

- 20:25 UTC: Automation ran on the single Miami edge router, resulting in unexpected advertisements to BGP transit providers and peers (impact begins)

- 20:40 UTC: Network team began investigating unintended route advertisements from Miami

- 20:44 UTC: Incident was formally raised to coordinate response

- 20:50 UTC: The bad configuration change was manually reverted by a network operator, and automation was paused for the router to prevent reoccurrence (impact stops)

- 21:47 UTC: The triggering change was reverted from the code repository

- 22:07 UTC: Automation was confirmed healthy to run again on the Miami router without the routing policy bug

- 22:40 UTC: Automation was unpaused on the single Miami router

The 25-minute impact window demonstrates both the speed at which automation can propagate errors and the effectiveness of Cloudflare's incident response procedures in identifying and containing the issue.

Pattern Recognition: Echoes of 2020

This incident bears striking similarities to Cloudflare's 2020 outage, which was also caused by a routing policy misconfiguration. In both cases, seemingly minor changes to policy statements created overly permissive rules that allowed internal routes to be advertised externally. The 2020 incident involved IPv4 routes, while this 2026 incident specifically affected IPv6 traffic.

The recurring nature of these incidents highlights a fundamental challenge in network automation: policy languages like Juniper's JunOS provide powerful matching capabilities, but the semantics of terms like route-type internal can be counterintuitive. When combined with the complexity of BGP's path selection logic, even small configuration changes can have outsized effects on routing behavior.

Prevention Strategies

Cloudflare has identified multiple areas for improvement, both immediate and long-term, to prevent similar incidents:

Immediate Technical Fixes

Patching the Automation Failure: The specific failure in the routing policy automation that caused the route leak is being patched to prevent this exact scenario from recurring.

BGP Community Safeguards: Implementing additional BGP community-based safeguards in routing policies that explicitly reject routes received from providers and peers on external export policies. BGP communities allow networks to tag routes with metadata that can be used for policy decisions.

CI/CD Pipeline Validation: Adding automatic routing policy evaluation into CI/CD pipelines that specifically checks for empty or erroneous policy terms. This would catch cases where policy modifications leave overly permissive default rules.

Improved Change Detection: Enhancing early detection of issues with network configurations and the negative effects of automated changes before they propagate widely.

Broader Industry Initiatives

RFC9234 Implementation: Validating routing equipment vendors' implementation of RFC9234 (BGP roles and the Only-to-Customer Attribute). This RFC defines BGP roles that can prevent route leaks at the local AS level, independent of routing policy configuration. Cloudflare is preparing to roll out this feature.

RPKI ASPA Adoption: Encouraging the long-term adoption of RPKI Autonomous System Provider Authorization (ASPA). ASPA provides cryptographic validation of AS path relationships, allowing networks to automatically reject routes containing anomalous AS paths indicative of route leaks.

The Broader Context

This incident occurs within a larger conversation about routing security on the internet. Route leaks remain a persistent threat to internet stability, often caused by misconfigurations rather than malicious intent. The complexity of BGP, combined with the distributed nature of internet routing, makes these incidents difficult to prevent entirely.

Cloudflare's transparency in documenting this incident aligns with their previous approach to sharing lessons learned from network failures. By providing detailed technical analysis, configuration examples, and timeline data, they contribute to the collective understanding of how routing failures occur and how they might be prevented.

The incident also underscores the trade-offs inherent in network automation. While automation reduces human error and enables rapid, consistent configuration management, it can also propagate errors at machine speed. The challenge lies in building validation systems that can catch subtle semantic errors in policy languages before they reach production.

Looking Forward

For network operators and engineers, this incident serves as a reminder of several key principles:

Policy Language Semantics Matter: Understanding the precise meaning of policy match terms is critical. What appears to be a narrow match might actually be quite broad.

Test Changes in Isolation: Even seemingly safe changes should be tested in environments that closely mirror production, with careful monitoring of BGP advertisements.

Defense in Depth: Multiple layers of validation—automated checks, peer review, staged rollouts—can help catch errors before they cause widespread impact.

Community Standards: Industry-wide standards like RFC9234 and ASPA offer promising paths toward more robust routing security, but adoption requires collective effort.

The route leak incident of January 22, 2026, ultimately serves as both a cautionary tale and a learning opportunity. It demonstrates how sophisticated automation systems can still produce unexpected failures when interacting with complex protocols like BGP. More importantly, it shows how transparency and detailed post-mortem analysis can turn a failure into valuable knowledge for the broader networking community.

For those interested in the technical details, Cloudflare's previous writings on route leaks provide additional context, and tools like monocle offer ways to analyze BGP routing data. The incident reinforces that internet routing security remains a work in progress, requiring ongoing vigilance, collaboration, and innovation from the entire networking community.

Comments

Please log in or register to join the discussion