A developer reflects on the predictable arc of using AI for coding, from initial amazement to the realization that agent-generated code, while plausible in isolation, often creates a coherent facade over a foundation of slop. The conclusion is a return to manual coding for quality, integrity, and ultimately, speed.

The journey into AI-assisted coding follows a familiar script. You start with a simple task, are impressed, then escalate to a larger one. The amazement grows, and soon you're drafting posts about job displacement. If you've moved past this initial phase, you're part of the minority that has actually used AI for substantive work, not just weekend experiments.

For serious engineers, the pattern continues. You become convinced you can feed the model ever-larger tasks, perhaps even that dreaded refactor everyone avoids. This is where the illusion begins to fray. On one hand, the model's grasp of your intent feels uncanny. On the other, it produces errors and makes architectural decisions that clash with the shared understanding you thought you'd established. Anger at the model is futile, so the blame shifts inward: "My prompt was under-specified."

This leads to the spec-driven development phase. You meticulously draft design documents in tools like Obsidian, spending significant time detailing features with impressive precision. The belief solidifies: "If I can specify it, it can build it. The sky's the limit." Yet this approach fails, mirroring a fundamental flaw in treating AI as a mid-level engineer. In a real company, a design doc is a living document, evolving through discovery and implementation. Handing a static spec to an engineer and leaving for vacation is a recipe for disaster. An AI agent lacks the capacity to evolve a specification over weeks as it builds components. It makes upfront decisions and rarely deviates, often forcing its way through problems rather than adapting its plan.

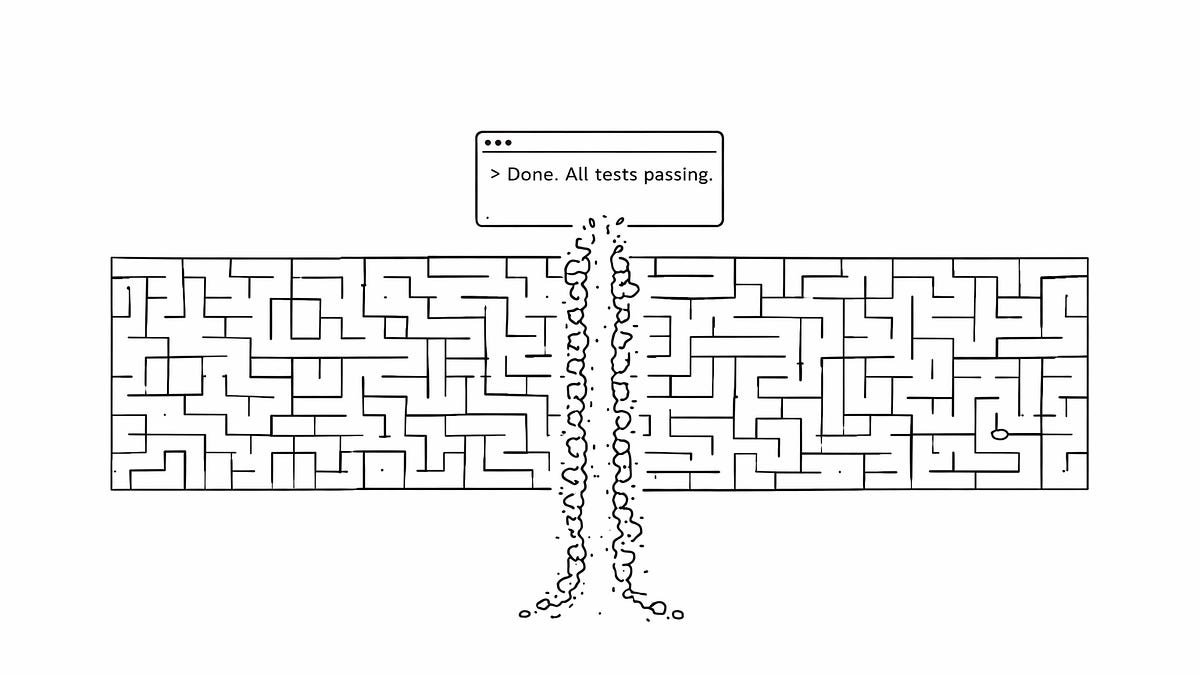

The insidious part is the facade. The code the agent writes looks plausible and impressive as it's generated. It even passes muster in pull requests, as both you and the model are trained on what a "good" PR looks like. The truth only emerges when you step back and read the entire codebase from start to finish. There, you find what we once hoped was a temporary artifact of early models: pure, unadulterated slop.

This realization is bewildering. You reviewed every line. Where did the gunk come from? The answer lies in the agent's scope. It writes units of change that are consistent in isolation and faithful to the immediate prompt. But it has no respect for the whole, for structural integrity, or even for neighboring patterns. The AI tells a compelling story, much like a writer "vibecoding" a novel. It produces a few paragraphs that are syntactically correct and internally coherent, even picking up on character idiosyncrasies. But when you read the entire chapter, it's a mess—disjointed from the preceding and proceeding sections, making no sense in the overall narrative.

After months of accumulating highly-specified agentic code, the conclusion was unavoidable: "I’m not shipping this shit." Charging users for it felt dishonest. Promising to protect their data with it felt like a lie. The integrity of the product was compromised.

The surprising outcome is a return to manual coding for most tasks. When you price in everything—not just tokens per hour, but the cost of review, refactoring, debugging, and maintaining architectural coherence—hand-writing code proves faster, more accurate, more creative, and more efficient. The initial promise of AI as a productivity multiplier is seductive, but the hidden tax on quality and long-term maintainability can make it a net loss. The consensus that AI will replace the need to write code overlooks the fundamental difference between generating text that looks like code and engineering a coherent system.

The path from novice to experienced AI coder is almost a rite of passage. The initial shock of capability gives way to a more nuanced understanding of its limitations. The key isn't to abandon the tool, but to recalibrate its role. It can be excellent for prototyping, generating boilerplate, or exploring ideas. But for building the core of a system—where architectural decisions compound and every line contributes to a fragile whole—the human hand, with its understanding of context and consequence, remains irreplaceable. The future isn't about AI writing all the code; it's about knowing when to let the model write and when to take the keyboard back. The most productive engineers will be those who can navigate this boundary, using AI to amplify their strengths while guarding against the slop that lies just beneath a plausible surface.

This isn't a rejection of AI, but a maturation of its use. The initial hype cycle focused on what the model could do. The next phase will focus on what it should do. The slop problem isn't just about messy code; it's about the erosion of shared understanding between the developer and the system they're building. When you write by hand, you are forced to think through every decision, to hold the entire architecture in your mind. This friction is not a bug; it's a feature. It's the process that builds intuition and ensures the final product is more than the sum of its parts. The agent, for all its speed, cannot replicate this holistic understanding. It can generate text, but it cannot generate wisdom.

In the end, the return to manual coding is a bet on quality over quantity, on coherence over plausibility. It's an acknowledgment that the most important metric isn't lines of code produced, but the reliability and integrity of the system delivered. For those of us building products for real users, that's a bet worth making.

Comments

Please log in or register to join the discussion