A new systematic approach to agentic AI development addresses the prototype-to-production gap through standardized life cycles, version-controlled artifacts, and behavioral testing methodologies tailored for nondeterministic systems.

Core Architecture Patterns for Production-Ready Agents

Modern agentic systems require fundamentally different development practices than traditional software. The ISO/IEC 5338:2023 standard (ISO AI Lifecycle) establishes formal processes for managing autonomous system risks, while three architectural patterns dominate production implementations:

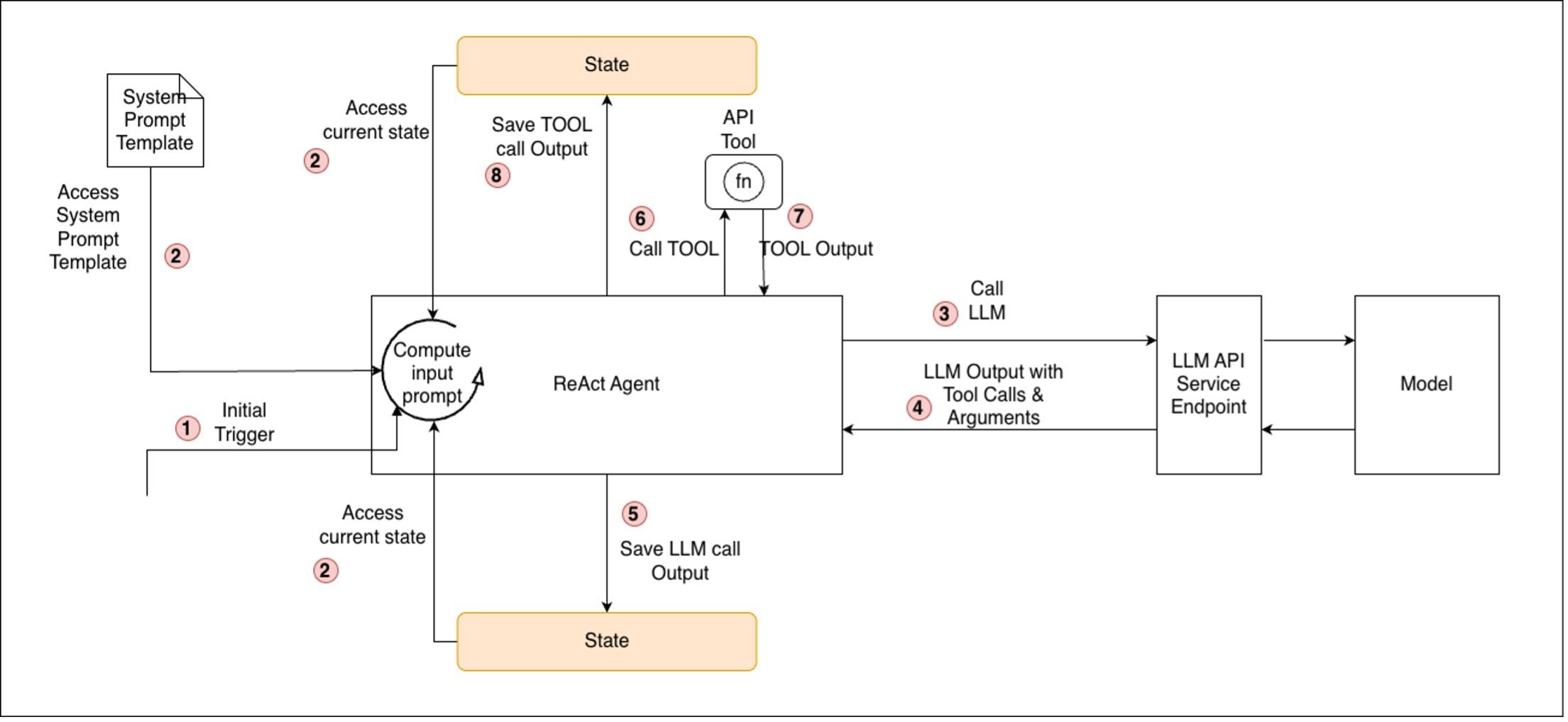

ReAct Loop Architecture (Figure 1):

- Implements reasoning/action/observation cycles

- 5-8x latency increase over single-shot LLM calls

- Requires strict loop termination conditions (max iterations/confidence thresholds)

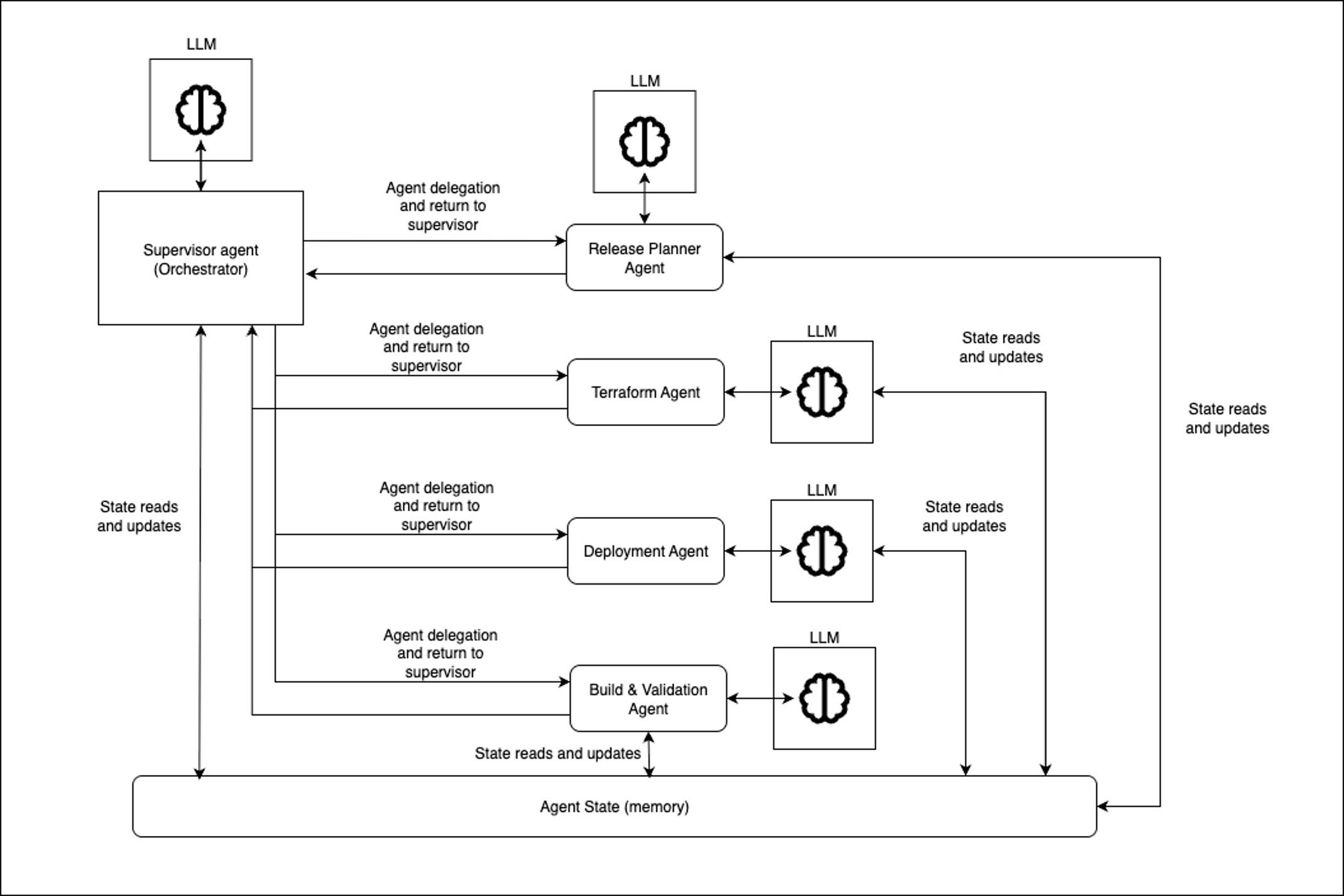

Supervisor-Worker Hierarchy (Figure 2):

- Central planning agent coordinates specialized workers

- Adds 300-500ms overhead per delegation

- Anthropic's research system demonstrates 32% accuracy improvement

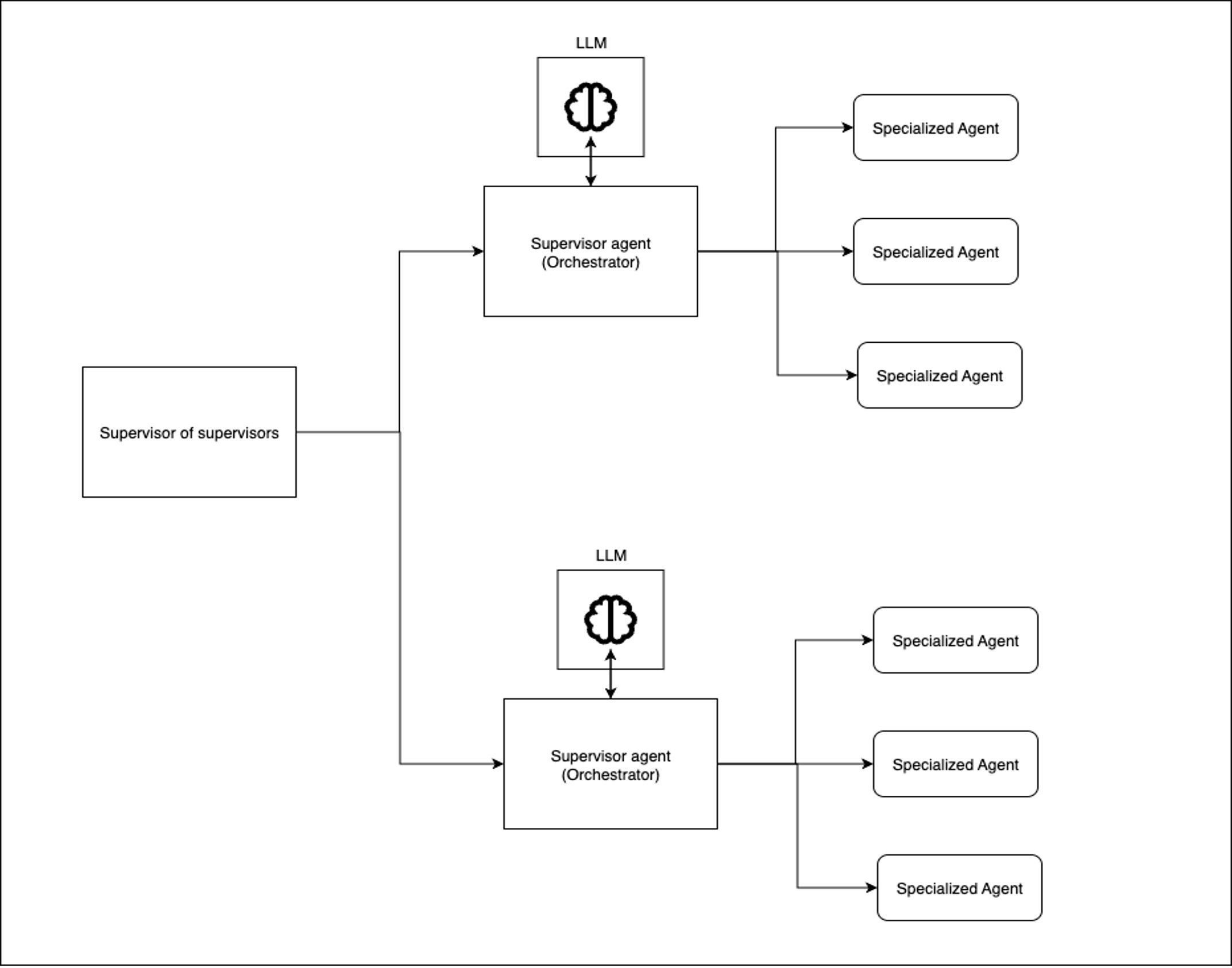

Hierarchical Multi-Team Orchestration (Figure 3):

- Regional supervisors manage domain-specific agents

- Adds 2-3x complexity over flat architectures

- E-commerce fulfillment case study shows 22% throughput gain

Infrastructure-as-Code Requirements

Agentic systems introduce five version-critical components requiring GitOps treatment:

| Component | Versioning Strategy | Failure Risk Without Control |

|---|---|---|

| Prompt Templates | Semantic diffing + A/B deployment | 63% behavioral drift (RisingWave) |

| Tool Manifests | Schema versioning + dependency mgmt | Broken tool chains |

| Policy Configs | Policy-as-Code with OPA | Compliance violations |

| Memory Schemas | Backward-compatible migrations | Data corruption |

| Evaluation Datasets | Snapshot versioning | Metric degradation |

The Model Context Protocol (MCP) standardizes tool integration via JSON-RPC 2.0, reducing agent-tool coupling by 70% in early adopters like JPMorgan Chase's COiN system.

Behavioral Testing Framework

Traditional unit testing fails for nondeterministic agents. Production systems require:

- Golden Trajectories: LangSmith-captured execution traces (example) serving as behavioral baselines

- Metamorphic Testing: Validating input/output relationships rather than fixed outputs

- Chaos Injection: Simulating 40% tool failure rates with tools like Chaos Monkey for AI

Microsoft's research shows property-based testing with Hypothesis finds 3.8x more critical bugs than unit testing alone in agentic systems.

Deployment Considerations

- Hybrid Orchestration: Combine sequential/concurrent patterns using Azure's Orchestrator SDK

- Cold Start Mitigation: Pre-warm agent pools using historical golden trajectories

- Token Budgeting: Enforce strict per-request token limits (avg. 15k tokens/agent task)

- Human Escalation Gates: Implement four-tier oversight model with <100ms handoff

Benchmarks show properly instrumented agents achieve 99.95% uptime versus 92.3% for ad-hoc implementations, proving structured development's operational impact.

Image credits: All diagrams sourced from original research papers and documentation referenced in the article.

Comments

Please log in or register to join the discussion