For developers accustomed to deterministic systems, AI represents a fundamental shift from executing instructions to evaluating possibilities. This article explores how AI functions as an architectural layer with its own boundaries, failure modes, and responsibilities—requiring a new mental model that complements rather than replaces traditional software patterns.

For most Java and web developers, the systems we work on tend to follow a familiar pattern. A request comes in. Code runs. A response goes out. Even in larger enterprise setups—Spring services, Oracle databases, Kafka pipelines—the underlying assumption is usually the same: Given the same input, the system behaves the same way.

AI-powered systems start to stretch this assumption. Not because they are unreliable, but because they are built around a different way of producing results. Recognizing this difference helps when thinking about how AI fits into modern application architecture.

This shift is not really about using AI to generate code faster. It's more about understanding AI as another part of the system—one that has boundaries, failure modes, and responsibilities, similar to APIs, databases, or message brokers.

Traditional Software: Deterministic Execution

It helps to start with what's already familiar. In a typical Java web application:

- Business rules are defined explicitly

- Control flow is predetermined

- Outputs are predictable

- Errors surface through exceptions or validation failures

At an architectural level, it often looks like this:

| Aspect | Traditional Web Systems |

|---|---|

| Core behavior | Deterministic execution |

| Logic location | Code (services, rules engines) |

| Input handling | Strictly validated |

| Output | Predictable and repeatable |

| Failure mode | Errors, exceptions |

This model has worked well because:

- System behavior is easy to reason about - You can trace through code and understand exactly what will happen

- Tests can assert exact outcomes - Unit and integration tests verify specific outputs

- Debugging follows clear cause-and-effect paths - Stack traces and logs point directly to the problem

AI systems don't replace this model. They tend to exist alongside it, serving a different purpose.

AI Systems: Reasoning Based on Likelihood

AI-powered systems—especially those built around large language models—don't operate by following fixed instructions step by step. Instead, they look at the information provided and determine what response is most likely to be useful in that context.

One way to think about the difference:

- Traditional software answers: "What should I do?"

- AI systems answer: "What response makes sense here?"

Because of this:

- The same input may not always result in the exact same output

- Context plays a larger role than predefined paths

- Outputs are based on likelihood rather than certainty

From an architectural point of view:

| Aspect | AI-Powered Systems |

|---|---|

| Core behavior | Reasoning based on likelihood |

| Logic location | Model + orchestration layer |

| Input handling | Context-heavy |

| Output | Usually correct, not guaranteed |

| Failure mode | Degraded or unclear responses |

This doesn't mean the system behaves randomly. It means the system is making judgments instead of executing rules. For developers used to strict control flow, this difference can feel familiar—not because it's incorrect, but because it addresses a different kind of problem.

Why This Isn't a Step Backwards

At first glance, AI systems can feel harder to trust:

- Outputs aren't exact

- Testing isn't always binary

- Behavior may vary slightly

At the same time, they handle scenarios where traditional systems often struggle. AI systems tend to work well for:

- Interpreting ambiguous or unstructured input - Natural language, images, audio

- Connecting information across many sources - Synthesizing from disparate data

- Supporting decisions when rules are incomplete - Filling gaps in business logic

They are generally not suitable replacements for:

- Financial calculations

- Authorization logic

- Transactional consistency

That separation is an architectural choice, not a limitation of tooling. In practice, many systems benefit from:

- Deterministic software for control and correctness

- AI systems for interpretation and decision support

A Practical Mental Model

One simple way to frame the shift is:

Traditional software executes instructions. AI software evaluates possibilities.

Both approaches can coexist within the same application. AI doesn't replace backend systems. It changes where and how certain decisions are made.

Once that distinction is clear, many AI architecture discussions become easier to follow.

What This Series Is Really About

This series is not focused on:

- Prompt techniques

- Model comparisons

- Replacing Java with AI

It is more about:

- Mapping familiar concepts to newer ones - How does an AI service compare to a REST API?

- Understanding how architecture is evolving - Where do traditional boundaries bend?

- Exploring how existing skills carry forward - What patterns from distributed systems apply?

Each part will relate new ideas back to systems many developers already know—REST APIs, databases, events, and observability.

What's Next

In Part 2, we'll look at how system boundaries change when outputs are no longer guaranteed—and why well-defined interfaces still matter in AI-powered systems. This is where traditional API thinking starts to bend, without completely breaking.

Debugging AI Systems: A Practical Example

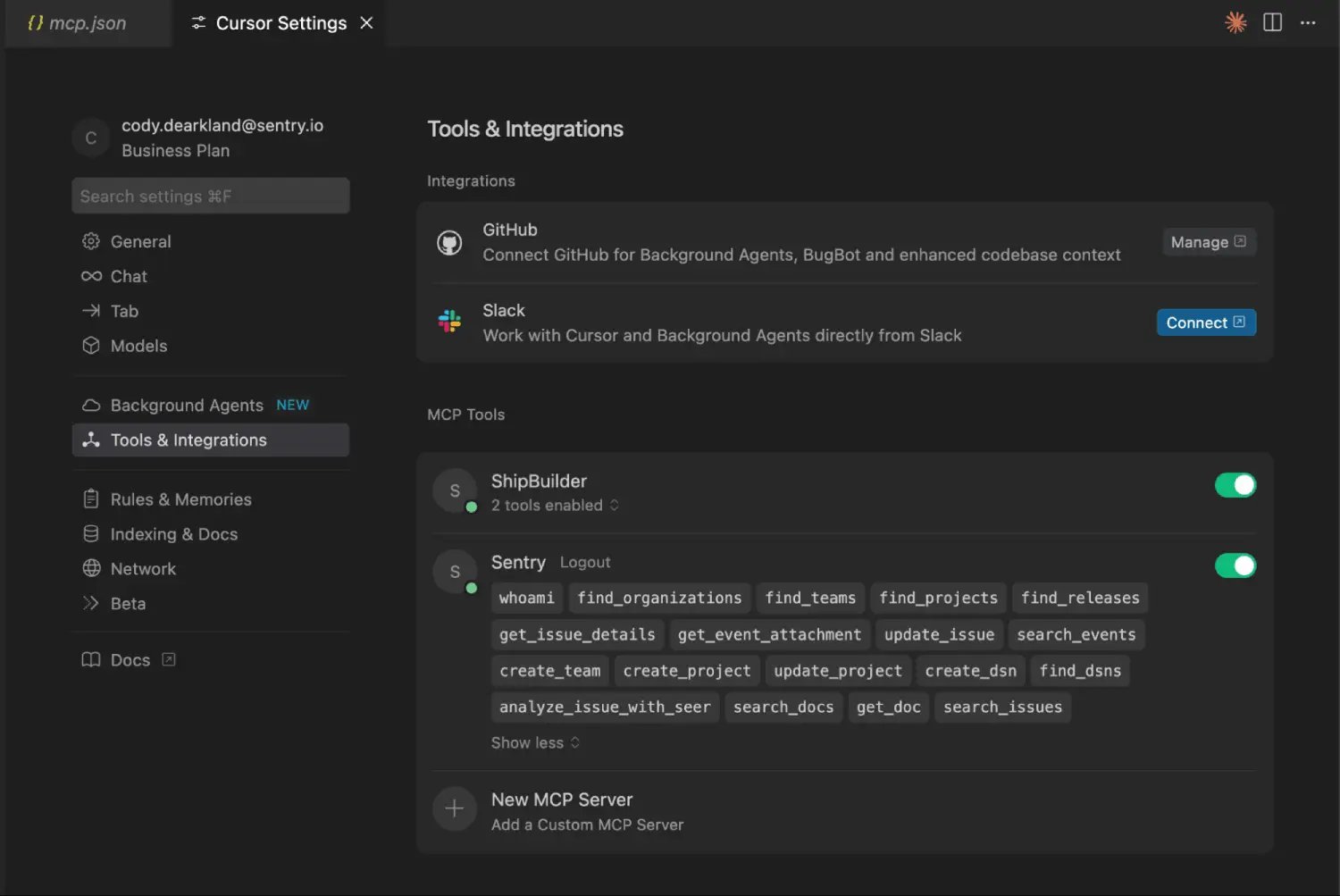

When working with AI-powered systems, traditional debugging approaches often fall short. Consider a scenario where an AI service is generating inconsistent responses for similar inputs. Unlike traditional software where you can trace through deterministic code paths, AI systems require different investigative approaches.

This is where tools like Sentry become particularly valuable. Sentry's new MCP (Model Context Protocol) integration allows AI systems to directly investigate production issues, understand their impact, and suggest fixes based on actual context—no more copying and pasting error messages or trying to describe distributed tracing setups in chat.

The Sentry MCP tutorial demonstrates how AI can participate in its own debugging process, creating a feedback loop where the system helps diagnose its own behavior. This represents a shift from AI as a black box to AI as a component with observable boundaries.

Architectural Implications

Understanding AI as an architectural layer means recognizing that:

- AI services have failure modes - They can produce degraded responses, hallucinate, or fail to respond

- AI services need boundaries - Clear interfaces for input/output, rate limiting, and fallback mechanisms

- AI services require monitoring - Different metrics than traditional services (confidence scores, token usage, latency distributions)

- AI services need testing strategies - Statistical testing, A/B comparisons, and human-in-the-loop validation

These considerations mirror how we approach other distributed system components. Just as we design for database failures, network partitions, and service timeouts, we must design for AI-specific failure modes.

Bridging Traditional and AI Systems

The most effective architectures often combine both approaches:

- Traditional layers handle business rules, data validation, and transactional consistency

- AI layers handle interpretation, generation, and decision support

- Orchestration manages the handoffs between these layers

For example, a customer support system might:

- Use traditional software to validate user authentication and authorization

- Pass structured context to an AI service for intent recognition and response generation

- Apply business rules to validate AI outputs before delivery

- Log all interactions for audit and improvement

This pattern leverages the strengths of each approach while mitigating their weaknesses.

The Developer's Role Evolves

As AI becomes an architectural layer, developer responsibilities expand:

- Prompt engineering becomes part of interface design

- Model evaluation becomes part of testing strategy

- Cost optimization becomes part of performance tuning

- Ethical considerations become part of security reviews

These aren't replacements for traditional skills—they're additions. The developer who understands both deterministic systems and probabilistic AI systems will be better equipped to design robust architectures.

Conclusion

AI is no longer just a tool we call from our code. It's becoming an architectural layer with its own characteristics, requirements, and failure modes. By recognizing this shift, we can apply familiar distributed systems thinking to AI-powered applications.

The key insight is that AI doesn't replace traditional software architecture—it extends it. The same principles that make distributed systems reliable (clear boundaries, observability, graceful degradation) apply to AI systems, even if the implementation details differ.

In the next part, we'll explore how to design these boundaries effectively, ensuring that AI systems integrate smoothly with the deterministic components that remain essential for business logic and data integrity.

Comments

Please log in or register to join the discussion