Anthropic launches Claude for Healthcare, enabling U.S. subscribers to securely analyze health records through integrations with HealthEx and Function platforms.

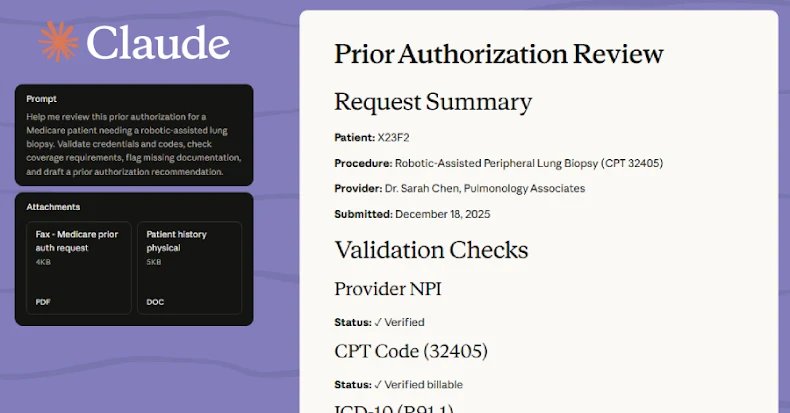

Anthropic has launched Claude for Healthcare, positioning its AI assistant as a medical information interpreter for U.S. subscribers. This move comes just days after OpenAI's ChatGPT Health announcement, accelerating the race to deploy AI in sensitive healthcare environments.

Claude Pro and Claude Max subscribers can now grant the AI access to their lab results and health records through integrations with HealthEx and Function, with Apple Health and Android Health Connect support rolling out this week. When connected, Claude can:

- Generate plain-language explanations of medical terminology

- Identify patterns across health metrics

- Prepare questions for doctor appointments

- Summarize complex medical histories

"The aim is to make patients' conversations with doctors more productive," Anthropic stated, while emphasizing that Claude "is not a substitute for professional healthcare advice."

Security Architecture

Privacy protections include:

- Opt-in data sharing: Users explicitly choose what information to share

- Instant revocation: Permissions can be edited or disconnected anytime

- Training exclusion: Health data isn't used to train Claude's models

- Contextual disclaimers: Outputs include reminders to consult professionals

The system aligns with Anthropic's Acceptable Use Policy, which mandates human review by qualified professionals before implementing Claude's suggestions for medical diagnosis, patient care, or mental health guidance.

Industry Context

This expansion occurs amid heightened scrutiny of medical AI accuracy. Recent incidents include:

- Google removing AI-generated health answers that provided dangerous inaccuracies

- Multiple studies showing LLMs hallucinating drug interactions and medical terminology

- Regulatory bodies drafting new frameworks for AI clinical validation

Practical Guidance

For healthcare organizations evaluating similar AI implementations:

- Verify disclaimers: Ensure all AI outputs contain visible warnings about potential inaccuracies

- Audit API connections: Regularly review third-party integration security (like HealthEx/Function)

- Implement human review gates: Require clinician validation for treatment suggestions

- Monitor bias: Test outputs across diverse patient demographics

Both Anthropic and OpenAI explicitly position their healthcare offerings as supplemental tools rather than diagnostic systems. As Claude's iOS app and Android version add health integrations this week, medical professionals caution that AI interpretation layers should augment—not replace—clinical judgment.

"These systems excel at pattern recognition but lack contextual understanding of individual patient circumstances," notes Dr. Alicia Chang, biomedical ethicist at Stanford. "Any AI-generated health insight should undergo the same scrutiny as unverified online medical information."

Comments

Please log in or register to join the discussion