Anthropic introduces Claude Code Security, an AI-powered vulnerability scanning tool that analyzes codebases like human researchers, detects overlooked flaws, and suggests patches while maintaining human oversight.

As attackers increasingly leverage AI to automate vulnerability discovery, Anthropic has countered with Claude Code Security, a new AI-powered scanning capability designed to help defenders identify and patch security flaws before exploitation. Currently available in limited research preview for Enterprise and Team customers, the system analyzes entire codebases using contextual reasoning rather than just pattern matching.

"It scans codebases for security vulnerabilities and suggests targeted software patches for human review, allowing teams to find and fix security issues that traditional methods often miss," Anthropic stated in their announcement. This approach addresses the growing concern that offensive security teams are weaponizing AI for rapid vulnerability discovery, creating an asymmetric advantage that Claude Code Security aims to neutralize.

How Claude Code Security Works

Unlike conventional static analysis tools, Claude mimics human security researchers by:

- Mapping component interactions across the application architecture

- Tracing data flows to identify unexpected pathways

- Detecting logic flaws and contextual vulnerabilities missed by rules-based scanners

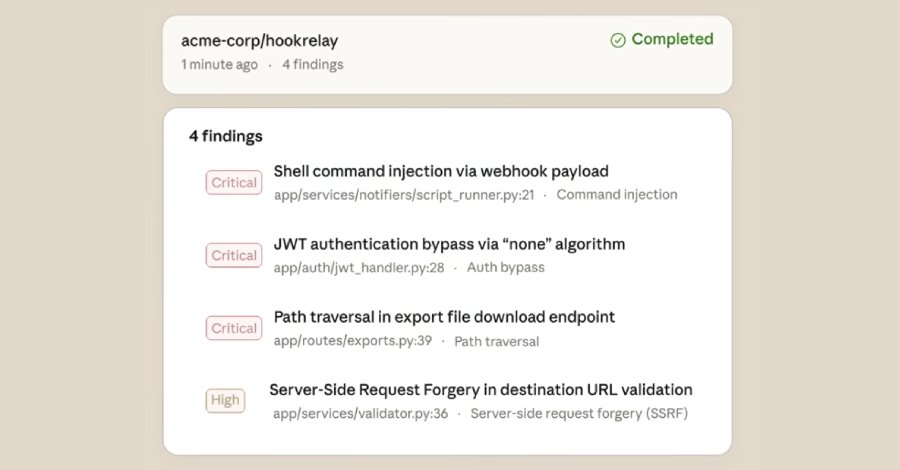

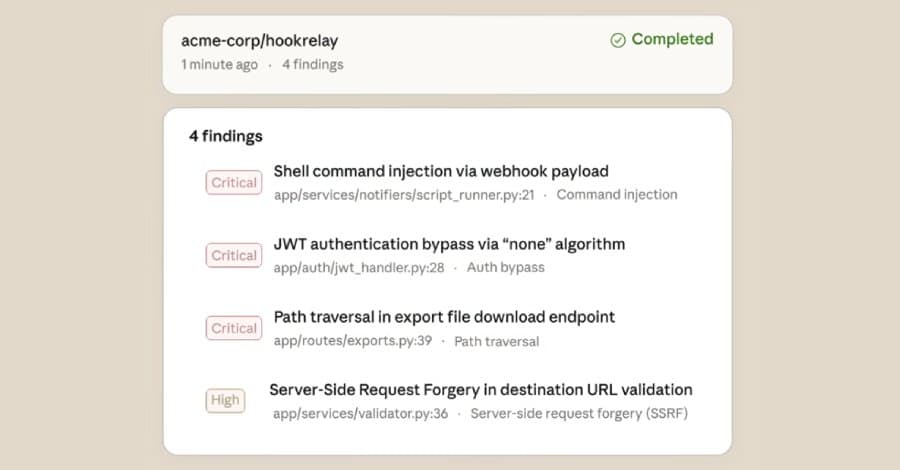

Each finding undergoes multi-stage verification to reduce false positives and receives a severity rating for prioritization. The dashboard then presents vulnerabilities alongside AI-generated patch suggestions, which developers can approve or modify.

The Human-in-the-Loop Imperative

Anthropic emphasizes that developers retain full control: "Because these issues often involve nuances difficult to assess from source code alone, Claude provides a confidence rating for each finding. Nothing is applied without human approval." This human-AI collaboration model ensures contextual understanding while leveraging AI's scalability.

Practical Implications for DevSecOps Teams

- Threat mitigation: Counters AI-powered offensive security tools by accelerating defensive discovery

- Integration: Fits into existing CI/CD pipelines alongside SAST/DAST tools

- Adoption path: Currently available for select enterprise customers; evaluate during preview for future rollout planning

- Limitations: Effectiveness varies by codebase complexity; human validation remains essential

Security experts note that while AI-assisted scanning marks significant progress, it complements rather than replaces traditional methods. Teams should combine Claude's contextual analysis with dynamic testing and manual review, particularly for critical systems. As AI security tools evolve, maintaining this balanced approach will be crucial for effective defense.

For organizations in Anthropic's preview program, initial testing shows promise in identifying business logic flaws and complex chain-of-trust vulnerabilities in web applications and cloud services. Wider availability timelines remain unconfirmed, but the preview offers early insight into AI's growing role in secure development lifecycles.

Comments

Please log in or register to join the discussion