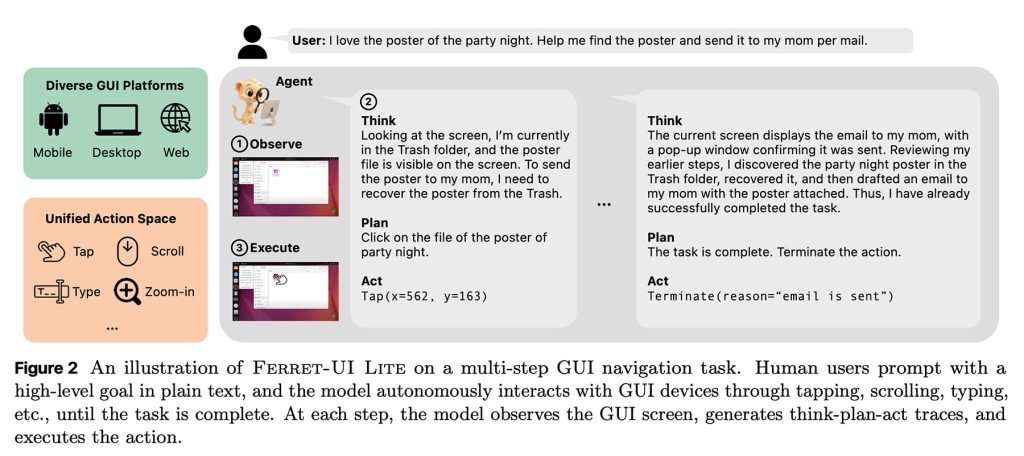

Apple researchers developed Ferret-UI Lite, a 3-billion parameter AI model that runs locally on devices to interact with app interfaces. Despite its small size, it matches performance of models 24x larger through innovative techniques like dynamic cropping and synthetic training.

Apple researchers have achieved a breakthrough in on-device AI with Ferret-UI Lite, a compact 3-billion parameter model capable of understanding and interacting with app interfaces directly on mobile devices. Published in a new study titled Ferret-UI Lite: Lessons from Building Small On-Device GUI Agents, this lightweight model delivers performance comparable to AI systems requiring up to 24 times more computational resources.

The Evolution of Apple's Ferret Models

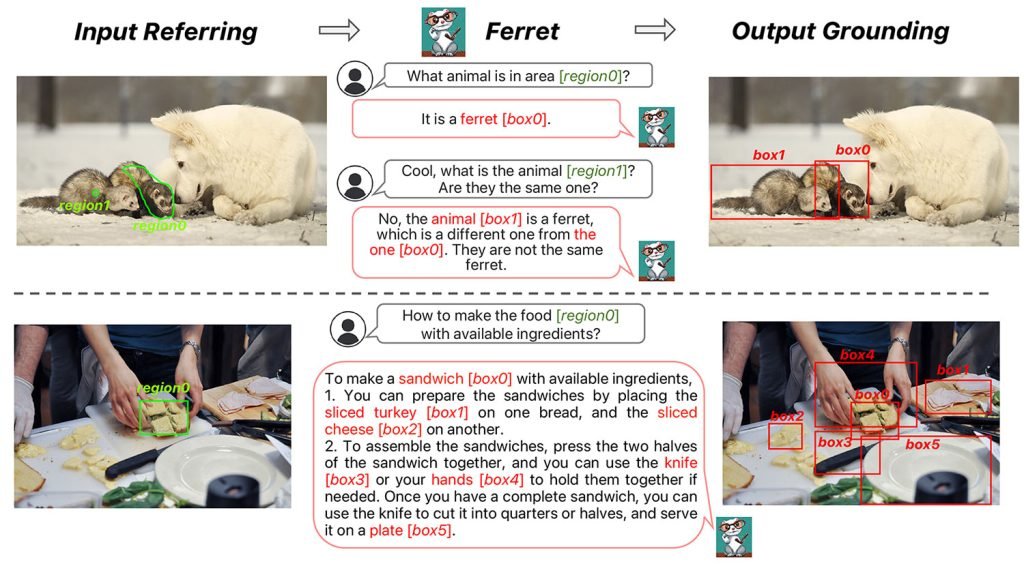

The Ferret lineage began in December 2023 with FERRET (Refer and Ground Anything Anywhere at Any Granularity), a multimodal model that could interpret natural language references within images. Subsequent iterations evolved specifically for user interfaces:

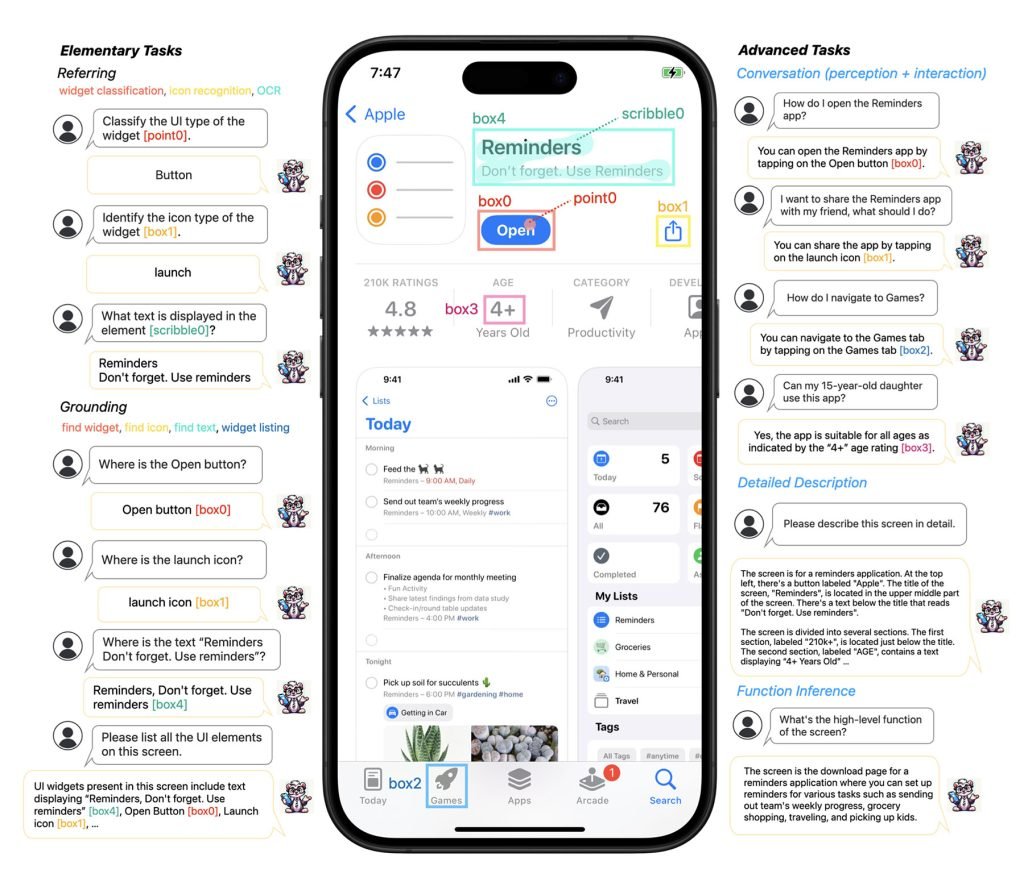

- Ferret-UI (2024): Tailored for mobile screens with "any resolution" processing to handle elongated aspect ratios and small UI elements

- Ferret-UI 2: Added multi-platform support and higher-resolution processing

- Ferret-UI Lite: The new 3B-parameter variant optimized for on-device execution

"Recent general-domain multimodal models often fall short in comprehending user interfaces," Apple researchers noted in their original Ferret-UI paper. This limitation prompted specialized development for GUI interaction.

Caption: The original Ferret-UI demonstrated natural language interaction with app interfaces (right)

Caption: The original Ferret-UI demonstrated natural language interaction with app interfaces (right)

Technical Innovations Behind Ferret-UI Lite

What makes Ferret-UI Lite remarkable isn't just its small size, but how it compensates for limited parameters:

Dynamic Cropping & Zooming: During inference, the model identifies interface regions, crops them, then re-analyzes magnified sections. This technique allows detailed examination without processing entire high-resolution screens.

Automated Training Pipeline: Researchers created a multi-agent system that generates synthetic training data by interacting with live GUIs:

- Curriculum generator creates progressively difficult tasks

- Planning agent breaks tasks into steps

- Grounding agent executes on-screen actions

- Critic model evaluates outcomes

Hybrid Learning Approach: Combines supervised fine-tuning with reinforcement learning, capturing real-world interaction nuances like error recovery.

Performance and Trade-offs

Benchmarked against AndroidWorld and OSWorld environments, Ferret-UI Lite achieves:

- Near parity with 70B+ parameter models on basic UI tasks (button identification, text recognition)

- Competitive results on single-step interactions

- Reduced effectiveness on complex, multi-step workflows

Interestingly, this iteration was trained and evaluated on Android, web, and desktop environments rather than Apple's ecosystem. Researchers cited better availability of reproducible testbeds for these platforms.

Implications for Mobile Developers

Ferret-UI Lite represents significant progress toward practical on-device AI agents:

- Privacy Preservation: Local processing eliminates need for cloud data transmission

- Resource Efficiency: Enables sophisticated UI interaction without server dependencies

- Cross-Platform Potential: Demonstrates capabilities across Android, web, and desktop interfaces

While larger models still handle complex workflows better, Ferret-UI Lite proves small models can deliver competent GUI interaction. The researchers acknowledge the performance-complexity tradeoff: "Ferret-UI Lite offers a local, private agent that autonomously interacts with app interfaces based on user requests."

For developers, this research signals Apple's investment in practical on-device AI that could eventually power features like:

- Automated UI testing

- Accessible interaction helpers

- Contextual in-app assistants

- Cross-platform automation tools

The complete study details Ferret-UI Lite's architecture, training methodology, and benchmark comparisons across various GUI interaction tasks.

Comments

Please log in or register to join the discussion